Asking just out of pure curiosity

If I raytraced this scene

would a or b appear on my monitor?

It's this (because your light is an area light):

the path of a beam of light backwards into the camera

Such statements are always bullshit, or at least inaccurate.

'rays transport light physically accurate like in the real world'... or such statements always reveal the writers have no clue, or attempt to use gross simplifications for the audience, treating them dumb. I kinda hate it.

But it's not easy to express it better. I'll try in two ways...

For the double slit scene you gave, we actually expect a result like this (ignoring quantum confusions):

I tried to draw gradients of white-red-white in Paint.

The reason for the gradients is this:

As we move from pixel to pixel of out image, we can see only parts of the spherical light through the slit.

First we see only the left side of the sphere, so we see only a small area of light, and this lesser light gives us the rosy color.

Then we see the center section of the light, but still just part of it - not the whole sphere. But the section we see has larger area, so we get red.

Then we see the right side of the light and get rosy again.

Illustrating what the left and middle pixel can see through the slit, but too lazy to draw the right. Sorry it's so small, you'd need to magnify:

So we want those smooth gradients because we can see smaller or larger parts of the light.

Bit RT is a binary visibility test which can not provide this information.

We may pick a random point on the light, trace a ray and test if the point is visible through the slit. That's the only information the ray provides.

(There also is NO LIGHT associated with a ray at all. Never. WE need to implement this association on the higher level algorithm which uses the ray visibility test among other things.)

To sum it up: The ray allows us to do point samples, but those points have no aera. We get the noisy image i've shown first from the randomized point samples drawn per pixel from the light sphere.

To get to the desired results we now have two options:

Blur the noisy image.

Or do MANY point samples per pixel and take the average of all.

But at this point we already have 3 concepts to generate our image: RT (to do visibility or intersection tests), the idea to use random samples, and some way to integrate those samples somehow so we get an approximation of area.

And we need to explain / mention all those concepts to associate RT with the application of generating images.

To avoid this rhetorical shortcoming you could use the term path tracing instead ray tracing for example. Because PT defines all those concepts, but RT does not.

Now the second example:

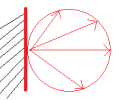

Imagine some light hits the red wall. And if the wall has some diffuse material, the light scatters around like so (no matter where it comes from):

I have drawn some of the infinite number of rays originating from one point on the wall, illustrating the light bouncing off the point on the wall.

Their magnitudes form a lobe. In this case, because we assume perfect diffuse material, the lobe has the shape of sphere.

The important observation here is that a ray can not describe the scattering function. Light goes into many directions, not just one.

So instead talking about rays, we want to talk about the function which describes the scattering distribution on all directions.

To approximate this function, we can use many rays, but it's clear the finite number of rays only tells a part of the whole story.

So again the concept of a ray is not enough to tell anything about optics.

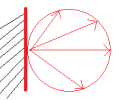

Now the same example again, but it's a perfect mirror now, not a wall:

Light comes in, gets reflected and goes out. Only in this special and theoretical case a single ray is enough to describe the function. Because the shape of our lobe is (coincidentally) a line.

And because that's all what very first attempts of RT have implemented, the foundation of the current rhetorical issue was set back then.

If we could figure out a single sentence to say all this, we could probably address the issue...