Haven't you been listening since the 40 series release? Die size, BOM and historical reference aren't relevant anymore, it's only relevant what Nvidia decide the price is.What is the estimated BOM for the 4080? MSRP of 4080 is 50%* higher than 3080. Does it really cost 50% more to produce? I mean a whole GPU, not just the die.

*inflation for adjusted. $700 in Sept. 2020 is $800 in Sept. 2022.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

arandomguy

Veteran

In general I don't feel people should emphasis BOM as it relates to pricing. BOM does affect pricing in the sense it changes the margins but these aren't cost+ pricing models.

Just take the RTX 4080 Super, it uses a more enabled GA103 die and faster memory over the RTX 4080. Maybe TSMC 4N has dropped so much in cost over the 1 year or so?

But we have more examples in the past of price drops over a time period of just months. RTX 770/780 post release. GTX 280. Fury Nano (somehow cheaper to produce then Fury XT suddenly after a few months?) 5700/xt (not even released...) just as some from both sides.

Just take the RTX 4080 Super, it uses a more enabled GA103 die and faster memory over the RTX 4080. Maybe TSMC 4N has dropped so much in cost over the 1 year or so?

But we have more examples in the past of price drops over a time period of just months. RTX 770/780 post release. GTX 280. Fury Nano (somehow cheaper to produce then Fury XT suddenly after a few months?) 5700/xt (not even released...) just as some from both sides.

Haven't you been listening since the 40 series release? Die size, BOM and historical reference aren't relevant anymore, it's only relevant what Nvidia decide the price is.

Eh when has die size and BOM ever dictated price? Price has always been a function of competition and what people are willing to pay. Basic economics.

D

Deleted member 2197

Guest

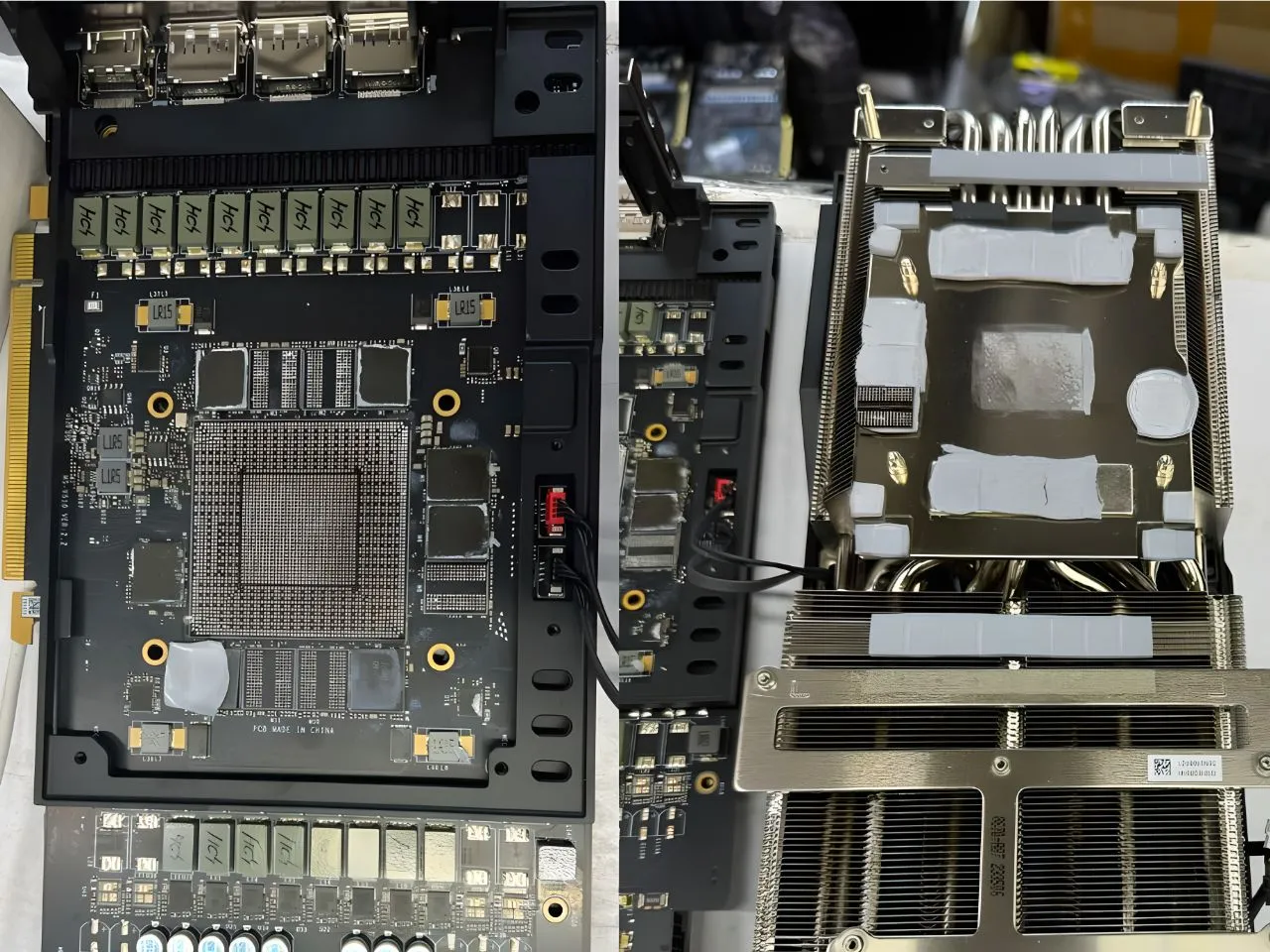

Scammers Selling Used RTX 4090 Graphics Cards without Memory or GPU in China

In recent developments originating from China, a concerning trend of counterfeit RTX 4090 graphics card sales has emerged. These counterfeit cards are notably lacking critical components, including the GPU and memory modules.

One notable case reported in Hong Kong involved an individual who purchased an RTX 4090 for approximately $1,660, only to discover that the card was non-functional. Despite their attempts to seek assistance from local authorities, their efforts proved fruitless, leading them to seek media coverage through specialized Chinese press blogs. Online forums, particularly Chiphell, have seen a surge in similar complaints. Prospective buyers, particularly those interested in used GeForce RTX 4090 cards, often fall victim to enticing pricing without realizing the potential for fraudulent transactions.

Eh when has die size and BOM ever dictated price? Price has always been a function of competition and what people are willing to pay. Basic economics.

It's that and the fact that the floor price will also be impacted by all of the other R&D costs. When you buy an Nvidia gpu you're paying for all of the graphics researchers, the DLSS training, the engineering hours on the SDKs, the driver team etc. If they just just put a low margin on the BOM they'd go out of business.

That's a good point but they won't be going out of business if they lowered gaming GPU prices lol. Their market cap is higher than the GDP of Turkey.It's that and the fact that the floor price will also be impacted by all of the other R&D costs. When you buy an Nvidia gpu you're paying for all of the graphics researchers, the DLSS training, the engineering hours on the SDKs, the driver team etc. If they just just put a low margin on the BOM they'd go out of business.

That's a good point but they won't be going out of business if they lowered gaming GPU prices lol. Their market cap is higher than the GDP of Turkey.

Yah, at this point I guess the gaming business is small to them, and it's not the majority of their operating expenses. Still, there's more to pay for in a product than a bill of materials. It's BOM plus labour, and in tech products labour costs can be enormous once you factor in R&D.

Their market cap is higher than the GDP of Turkey

You do know of course that these things are not comparable. I hope.

Yea I didn't mean to imply that it was. Just giving a sense of scale. It's an ungodly amount of money.You do know of course that these things are not comparable. I hope.

Last edited:

But that's mostly thanks of datacenter revenue, not gaming. If they made less money on gaming, they would naturally invest less and less of their R&D into gaming GPUs, and then everyone would complain even more about the lack of innovation. Alternatively, NVIDIA actually pricing their GPUs aggressively and crushing AMD/Intel to the point where the latter deprioritise discrete graphics in favour of AI is not really in anyone's interests.That's a good point but they won't be going out of business if they lowered gaming GPU prices lol. Their market cap is higher than the GDP of Turkey.

Of course, you could argue they are already putting less R&D into gaming: Turing was a huge step forward with an insane amount of graphics-centric innovation, but (arguably) in 4 years they delivered fewer significant architectural changes with Ampere/Ada than some other GPU vendors (including PowerVR at its peak) achieved with just 1 year projects. On the other hand, their tensor cores have been heavily rearchitected every single generation and there's just a huge amount of AI/HPC-specific stuff in Hopper in general.

I guess we'll see how much of a graphics-specific improvement (features and PPA) there is in Blackwell... I'm cautiously optimistic, although I guess if they believe AI is at the core of future 3D graphics, then it might end up a bit disappointing for everything else, we'll see...

DegustatoR

Legend

The fact that Nvidia is making more and more money on DC products puts more pressure on the gaming side of the business to bring in more profits, not less, to keep up with the DC side and be just as profitable. If it would be the other way around the gaming business would just wither and die eventually - which I doubt that anyone here want to happen. The idea that Nvidia would for some reason subsidize the gaming products from their DC profits is just insane, that's not how any commercial company does business.

The fact that Nvidia is making more and more money on DC products puts more pressure on the gaming side of the business to bring in more profits, not less, to keep up with the DC side and be just as profitable. If it would be the other way around the gaming business would just wither and die eventually - which I doubt that anyone here want to happen. The idea that Nvidia would for some reason subsidize the gaming products from their DC profits is just insane, that's not how any commercial company does business.

When there's limited CoWoS capacity they can't just expand DC stuff and gaming still brings healthy profit, not to mention synergies in R&D, why would you shoot a milking cow just because another cow milks more and you can keep both?

DegustatoR

Legend

There aren't much synergies in R&D there nowadays and CoWoS capacity being limited doesn't mean much when you can sell gaming grade GPUs on AI markets. It's just a running misconception that a company should (why?) sell its products cheaper because it can compensate the lack of profits on that market by profits from the other market which does good right now. Nvidia is not a charity foundation, it doesn't make products so it can give them away for free to those who can't buy them.When there's limited CoWoS capacity they can't just expand DC stuff and gaming still brings healthy profit, not to mention synergies in R&D, why would you shoot a milking cow just because another cow milks more and you can keep both?

https://videocardz.com/newz/modder-shrinks-geforce-rtx-4070-ti-to-itx-form-factor

like this small form factor

like this small form factor

Cards should be smaller across the board. My baseline MSI RTX4070 Ventus 3x is gigantic. It barely fits in my case. There's no reason for this. It is hard locked to 200W. Yea I could have gotten the Ventus 2x but I didn't really think about it and it didn't occur to me that they would make something so large for a 200W card.https://videocardz.com/newz/modder-shrinks-geforce-rtx-4070-ti-to-itx-form-factor

like this small form factor

And then there's the 4090 which is so heavy it needs a kickstand or it might break off the PCIe slot or twist until the core pops off. Ridiculous.

arandomguy

Veteran

There's no reason for this. It is hard locked to 200W. Yea I could have gotten the Ventus 2x but I didn't really think about it and it didn't occur to me that they would make something so large for a 200W card.

Noise tolerance preferences can vary greatly. 200w is actually not simple to cool with just 2 fans, I'm guessing the Ventus 2x will likely need closer 2k fan speeds (if not higher) which at least for me is not preferable.

Also it might be counter intuitive but those triple fan long style coolers on the base models are simpler/cheaper than perhaps a smaller 2 fan design to achieve the same results. You'll notice the base 3 fan models often don't outperform some smaller 2 fan designs for example. But those 2 fan designs often have to employ more "complex" heatpipe layouts and fin designs among other things.

Noise tolerance preferences can vary greatly. 200w is actually not simple to cool with just 2 fans, I'm guessing the Ventus 2x will likely need closer 2k fan speeds (if not higher) which at least for me is not preferable.

Also it might be counter intuitive but those triple fan long style coolers on the base models are simpler/cheaper than perhaps a smaller 2 fan design to achieve the same results. You'll notice the base 3 fan models often don't outperform some smaller 2 fan designs for example. But those 2 fan designs often have to employ more "complex" heatpipe layouts and fin designs among other things.

Yah, fans and plastic shrouds are a lot cheaper than copper and vapor chambers.

I can't really argue this point, but my GTX970 consumed around 200W after OC (probably more like 175W) and it wasn't nearly as long and wasn't very loud. It was also way cheaper but I guess that ship has sailed.Noise tolerance preferences can vary greatly. 200w is actually not simple to cool with just 2 fans, I'm guessing the Ventus 2x will likely need closer 2k fan speeds (if not higher) which at least for me is not preferable.

Also it might be counter intuitive but those triple fan long style coolers on the base models are simpler/cheaper than perhaps a smaller 2 fan design to achieve the same results. You'll notice the base 3 fan models often don't outperform some smaller 2 fan designs for example. But those 2 fan designs often have to employ more "complex" heatpipe layouts and fin designs among other things.

D

Deleted member 2197

Guest

CES 2024 Nvidia booth coverage via KitGuru

DavidGraham

Veteran

NVIDIA secured a 500 million $ orders from India for tens of thousands of H100 and H200 GPUs.

wccftech.com

wccftech.com

NVIDIA Secures a Huge AI GPU Order From India, Worth Over Half A Billion Dollars

NVIDIA has secured a huge AI order from Indian data center operator Yotta, as total order valuation is expected to exceed the $ billion mark.

- Status

- Not open for further replies.

Similar threads

- Replies

- 34

- Views

- 5K

- Replies

- 28

- Views

- 3K