Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I didn't clue in, but Gamer's Nexus did. The Plague Tale entry on the graph says the game only supports DLSS3, so the results are likely comparable and down to the architectural differences because they'd both be using the same frame gen mode. Looks like maybe 30-40% if you flip between the different cards.

TopSpoiler

Regular

Will RTX Mega Geometry be included in DXR specs?

I wonder how much of the power budget increase on the 5080 and lower is eating up by GDDR7? I'm assuming it would be more efficient for the same bandwidth, but since they've pushed the bandwidth higher it'll overall draw more power.

My RTX 3080 is about 29 TFLOPs, so the 5080 is about double, which is a good upgrade.

My RTX 3080 is about 29 TFLOPs, so the 5080 is about double, which is a good upgrade.

NVIDIA RTX Neural Rendering Introduces Next Era of AI-Powered Graphics Innovation | NVIDIA Technical Blog

NVIDIA today unveiled next-generation hardware for gamers, creators, and developers—the GeForce RTX 50 Series desktop and laptop GPUs. Alongside these GPUs, NVIDIA introduced NVIDIA RTX Kit…

To be clear, will RTX Neural Shaders be a RTX 50 series exclusive or not? Because that whole texture compression stuff alone already sounds upgrade-worthy.

Framegen was limited to 40-series because it required Ada’s upgraded optical flow analyzers to get good results (it ran on 30 series cards just not particularly well IIRC). If I had to guess there’s something similar going on here.Is the GeForce Blackwell whitepaper available? What hardware limitations are preventing the 4000 series from supporting DLSS Multi-Frame Generation? It doesn't seem to be due to baseline AI TOPS speed, as the 5070 (FP4) is lower than the 4090 (FP8) in terms of TOPS

fix:edit

Honestly I would be very happy with a framgen that actually doubled fps in GPU limited scenarios. If a 5090 consistently can hit that I might consider getting one (a 25% raw upgrade isn’t especially compelling).

I am assuming the 4x framegen will only hit those numbers in CPU limited games.

Based on a quick search comparisons, many games using Glide did have other renderer implementations. Sure you could make an argument in a few instances where Glide renderers were superior to other renderers but for the most part it wasn't the only hardware-based option or let alone option at all ...

I said in many games in early days supports only Glide (or to a much less extent, some other proprietary API), not most Glide supporting games are exclusives. As I said, in the early days there weren't too much alternatives, and most games chose the best one, which was Glide, to support. The situation only got better (but it's actually quite gradually) around the time of Direct3D 5 and better OpenGL support. We had the prevalence of OpenGL support in gaming cards thanks to id software's insistence to not use proprietary APIs, therefore we had the "GlideGL" wrapper. This allowed Quake to run on RIVA 128 which had reasonably good OpenGL implementation and thus a fighting chance before Direct3D got matured enough.

This is why I said if you have 90% market share it's really not difficult to get developers to support your exclusive features. Heck, even today while path tracingis not an exclusive feature in theory, it's sort of in practice, and you see game developers willing to spend resources on it.

Blackwell has an updated display engine for frame pacing:Framegen was limited to 40-series because it required Ada’s upgraded optical flow analyzers to get good results (it ran on 30 series cards just not particularly well IIRC). If I had to guess there’s something similar going on here.

Honestly I would be very happy with a framgen that actually doubled fps in GPU limited scenarios. If a 5090 consistently can hit that I might consider getting one (a 25% raw upgrade isn’t especially compelling).

I am assuming the 4x framegen will only hit those numbers in CPU limited games.

To address the complexities of generating multiple frames, Blackwell uses hardware Flip Metering, which shifts the frame pacing logic to the display engine, enabling the GPU to more precisely manage display timing. The Blackwell display engine has also been enhanced with twice the pixel processing capability to support higher resolutions and refresh rates for hardware Flip Metering with DLSS 4.

NVIDIA DLSS 4 Introduces Multi Frame Generation & Enhancements For All DLSS Technologies

75 DLSS Multi Frame Generation games and apps available on day 0; graphics industry’s first, real-time transformer model enhances image quality for DLSS Ray Reconstruction, Super Resolution, and DLAA.

www.nvidia.com

Interessting that they have replaced the force field from the OFA with an AI model.

GhostofWar

Regular

Bryan seemed to imply in the dlss 4 video it's the extra tensor core performance allowing them to generate more frames, i'm not exactly sure how that would pan out with the 4090 tensor performance compared to low/mid range blackwell.Framegen was limited to 40-series because it required Ada’s upgraded optical flow analyzers to get good results (it ran on 30 series cards just not particularly well IIRC). If I had to guess there’s something similar going on here.

Honestly I would be very happy with a framgen that actually doubled fps in GPU limited scenarios. If a 5090 consistently can hit that I might consider getting one (a 25% raw upgrade isn’t especially compelling).

I am assuming the 4x framegen will only hit those numbers in CPU limited games.

time stamped to the tensor core bit.

he does mention the flip metering aswell like troyan mentions above.

NVIDIA RTX Neural Rendering Introduces Next Era of AI-Powered Graphics Innovation | NVIDIA Technical Blog

NVIDIA today unveiled next-generation hardware for gamers, creators, and developers—the GeForce RTX 50 Series desktop and laptop GPUs. Alongside these GPUs, NVIDIA introduced NVIDIA RTX Kit…developer.nvidia.com

To be clear, will RTX Neural Shaders be a RTX 50 series exclusive or not? Because that whole texture compression stuff alone already sounds upgrade-worthy.

I don’t think it’ll be exclusive but it’ll probably run better.

Really want to see if the new directx spec will finally open up some of this technology and make it run across multiple vendors .

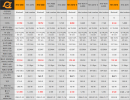

So they claimed 125 TF shader performance on the 5090 at the presentation but it’s actually closer to 105?

I think the 125 TF shader performance probably refers to a "full" RTX Blackwell, along with the "4000 TOPS" and 380 "RT TFLOPS" numbers, where the actual 5090 numbers are 3352 TOPS and 318 RT TFLOPS. This suggests that the "full" Blackwell has ~20% more units (probably ~200 SM units).

Just exactly what 'other' proprietary API are you talking about (besides consoles at the time which were different beasts in nature) and for how long of a period do you define "early days" ? A few months at best ?I said in many games in early days supports only Glide (or to a much less extent, some other proprietary API), not most Glide supporting games are exclusives. As I said, in the early days there weren't too much alternatives, and most games chose the best one, which was Glide, to support. The situation only got better (but it's actually quite gradually) around the time of Direct3D 5 and better OpenGL support. We had the prevalence of OpenGL support in gaming cards thanks to id software's insistence to not use proprietary APIs, therefore we had the "GlideGL" wrapper. This allowed Quake to run on RIVA 128 which had reasonably good OpenGL implementation and thus a fighting chance before Direct3D got matured enough.

This '90%' figure is only applicable if you ignore console or mobile platforms entirely but that's not even factoring in the question of performance/compatibility either with different HW generations on the same vendor. I can see how developers would justify integrating a better RT implementation when it can be tested against a standardized API yet how exactly would they rationalize committing to a radically exotic texturing/shading/material pipeline especially IF they decide fallback on the current pipeline as well ?This is why I said if you have 90% market share it's really not difficult to get developers to support your exclusive features. Heck, even today while path tracingis not an exclusive feature in theory, it's sort of in practice, and you see game developers willing to spend resources on it.

Nvidia: We have also sped up the generation of the optical flow field by replacing hardware optical flow with a very efficient AI model. Together, the AI models significantly reduce the computational cost of generating additional frames.

NVIDIA DLSS 4 Introduces Multi Frame Generation & Enhancements For All DLSS Technologies

75 DLSS Multi Frame Generation games and apps available on day 0; graphics industry’s first, real-time transformer model enhances image quality for DLSS Ray Reconstruction, Super Resolution, and DLAA.

www.nvidia.com

Since Nvidia replaced the hardware optical flow accelerator with an AI model that runs on Tensor Cores in DLSS 4, wouldn’t this open the door for RTX 2000 and 3000 series GPUs to support the Frame Generation feature? Why is it still exclusive to the RTX 4000 and 5000 series?

NVIDIA is saying the DLSS 4 model requires a lot more tensor power than DLSS3 - in which case using it on older cards could actually lower performance in some games rather than increasing it because computing the fake frames would eat up more frametime than you save with a lower internal framerate.

NVIDIA DLSS 4 Introduces Multi Frame Generation & Enhancements For All DLSS Technologies

75 DLSS Multi Frame Generation games and apps available on day 0; graphics industry’s first, real-time transformer model enhances image quality for DLSS Ray Reconstruction, Super Resolution, and DLAA.www.nvidia.com

Since Nvidia replaced the hardware optical flow accelerator with an AI model that runs on Tensor Cores in DLSS 4, wouldn’t this open the door for RTX 2000 and 3000 series GPUs to support the Frame Generation feature? Why is it still exclusive to the RTX 4000 and 5000 series?

Having to use FP16 tensor operations instead of FP8, like Ada, or FP4, like Blackwell, wouldn't help Turing and Ampere cards either if DLSS 4 requires significantly more operations. But I don't know—maybe they could limit it to generating only one frame, like Ada. At least the 3090/3080 should have sufficient performance. The 4060 has 242 AI TOPS (FP8 with sparsity), while the 3080 has 238 TOPS (FP16 with sparsity).NVIDIA is saying the DLSS 4 model requires a lot more tensor power than DLSS3 - in which case using it on older cards could actually lower performance in some games rather than increasing it because computing the fake frames would eat up more frametime than you save with a lower internal framerate.

Just exactly what 'other' proprietary API are you talking about (besides consoles at the time which were different beasts in nature) and for how long of a period do you define "early days" ? A few months at best ?

There were S3D (that's for S3 Virge IIRC), Rendition's V1000, ATI Rage 3D, etc. and there were far from "a few months" because Glide was first released along with Voodoo Graphics on PC which was around 1995.

The first Direct3D was released a year later and it's very difficult to use. The first really usable Direct3D (D3D 5) was released two years later in 1997, and you have to consider the time for adoption. Many game developers stilil found Glide easier to use and has fewer problems than D3D.

OpenGL was another choice but most of these cards do not have a good enough OpenGL implementation, therefore there was GlideGL and the so-called "MiniGL" but they were all incomplete and can be problematic at times.

I can see how developers would justify integrating a better RT implementation when it can be tested against a standardized API yet how exactly would they rationalize committing to a radically exotic texturing/shading/material pipeline especially IF they decide fallback on the current pipeline as well ?

You can ask the same question for DLSS, SER, Ray reconstruction, OMM and now this RTX hair and mega geometry stuff. Nvidia doesn’t need to get their proprietary tech into every game. They just need to snag a few big ones to maintain the perception of tech leadership and instill sufficient FOMO in the community.

Granted the neural texture pipeline appears to be pretty invasive to developer and artist workflows but with Nvidia’s current market dominance I wouldn’t be surprised if they convinced a few big studios to try it out.