Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

No DX12 Software is Suitable for Benchmarking *spawn*

- Thread starter trinibwoy

- Start date

DavidGraham

Veteran

I wanted to bring the attention to this again. From my short memory I have seen this happen in three recent occasions, games that worked previously fine on DX11, but the moment they switch to DX12 they become broken!Call of Duty games are based on the IW engine, in it's DX11 form, the engine was pretty balanced on all GPUs, it was lastly seen in Infinite Warfare 2016, Modern Warfare 1 Remastered 2016, and Modern Warfare 2 Remastered 2020.

-Assassin's Creed, worked fine previously with DX11 (up until Odyssey in 2018), but as soon as Valhalla got released in 2020 with DX12 only, it became significantly slower on NVIDIA hardware compared to AMD hardware.

-Call of Duty games, worked fine with DX11 up until Call of Duty WW2 in 2017 and Call of Duty Black Ops 4 in 2018, with all GPUs in their respective comparable position to each other, but as soon as they switched to DX12 only in Call of Duty Modern Warfare 2019, they became slightly slower on NVIDIA hardware compared to AMD, and ever since Black Ops Cold War, the game got noticeably slower on NVIDIA hardware compared to AMD, a Vega 64 became toe to toe with a 1080Ti and a 5700XT equal to a 2080, in Vanguard AMD gained even more ground with the Vega 64 surpassing the 1080Ti, 5700XT surpassing the 2080, and the 6900XT leaving the 3080Ti in the dust. Then comes Modern Warfare 2, and AMD GPUs all gain a tier above their comparable NVIDIA GPUs, You can almost chart the gradual downward spiral of NVIDIA GPUs in this series game by game.

-The third instance is different, the Borderlands series worked fine with DX11 in it's previous two games, it was even a bit faster on NVIDIA GPUs, Borderlands 3 got released with DX11 too, and the game was also a bit faster on NVIDIA hardware, but then in 2020, DX12 was patched in, in a beta state, and suddenly all NVIDIA GPUs got noticeably slower compared to their equivalent AMD GPUs, and it remains so to this day. Later in 2022 Tiny Tina's Wonderlands got released with only DX12, but with good performance across all GPUs, the relative positioning of all GPUs is preserved similar to DX11, so I think this is a case of DX12 being properly optimized for all architectures in Tiny Tina's Wonderlands, in contrast to Borderlands 3.

I want to remember more instances, but those 3 are what comes to my mind right now. So they will have to do for now. The important thing is to ask, what the hell is happening? why are some games still releasing with such an obviously broken DX12 implementation? And why are they still not fixed yet at a later date with more patches?

davis.anthony

Veteran

Any recent examples of DX12 being a net performance win on AMD while being a net loss on Nvidia?

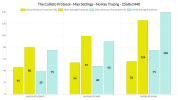

The Callisto Protocol

Again, why are NVIDIA issues "broken games" by default instead of NVIDIA issues, while it's opposite on AMD?I want to remember more instances, but those 3 are what comes to my mind right now. So they will have to do for now. The important thing is to ask, what the hell is happening? why are some games still releasing with such an obviously broken DX12 implementation? And why are they still not fixed yet at a later date with more patches?

What makes you so sure they're patchable by game devs, instead of NVIDIA in their drivers?

DegustatoR

Legend

Because it's up to the developers to make sure that their D3D12 implementation is on par with IHV's D3D11 driver. And vice versa.Again, why are NVIDIA issues "broken games" by default instead of NVIDIA issues, while it's opposite on AMD?

What makes you so sure they're patchable by game devs, instead of NVIDIA in their drivers?

But tbf you need to look at each case of D3D12 being faster on AMD separately because it's more than just one issue / reason for this and it's not a given that is always an engine issue at play.

davis.anthony

Veteran

Why are some games still releasing with such an obviously broken DX12 implementation? And why are they still not fixed yet at a later date with more patches?

Hint: If AMD cards are performing fine/better under DX12 then the problem isn't the games

DavidGraham

Veteran

I just explained how the transition to DX12 in these games have been rough on NVIDIA. Before DX12, things were normal. Also, hard to imagine the game being broken consistently on Maxwell, Pascal, Turing, Ampere and Ada, and attribute that to NVIDIA alone. NVIDIA often tries to fix this through drivers every now and then, recently they introduced a driver that improved DX12 overhead in several games but still it was obviously not enough.Again, why are NVIDIA issues "broken games" by default instead of NVIDIA issues, while it's opposite on AMD?

So game devs are now responsible for IHV drivers too? IHVs still have D3D12 drivers too, even when it's lower level API and devs have more control over things. Things can be broken in those drivers. Some things might just be slow but not actually broken. Hardware might be slower than expected in some things. There's gazillion reasons out of game devs hands.Because it's up to the developers to make sure that their D3D12 implementation is on par with IHV's D3D11 driver. And vice versa.

You mean by normal "NVIDIA had the best, optimal hardware/driver combo for older APIs", those are two different things you know?I just explained how the transition to DX12 in these games have been rough on NVIDIA. Before DX12, things were normal. Also, hard to imagine the game being broken consistently on Maxwell, Pascal, Turing, Ampere and Ada, and attribute that to NVIDIA alone. NVIDIA often tries to fix this through drivers every now and then, recently they introduced a driver that improved DX12 overhead in several games but still it was obviously not enough.

Just because NVIDIA is having harder time with DX12 than previous APIs doesn't mean it's game devs fault. NVIDIA has fixed several things in their drivers, has several more things to fix in future. So again, why are these game devs fault and not NVIDIA issues?

davis.anthony

Veteran

In The Callisto Protocol the RTX4090 gets a performance increase under DX12 while all the older Nvidia GPU's get a performance decrease.

This tells us one of two things

1. Nvidia are purposefully holding back the DX12 on the older cards in order to sell more RTX4000 cards

2. The issue is architectural which they've fixed for the RTX4000 series indicating it was an Nvidia problem all along

This tells us one of two things

1. Nvidia are purposefully holding back the DX12 on the older cards in order to sell more RTX4000 cards

2. The issue is architectural which they've fixed for the RTX4000 series indicating it was an Nvidia problem all along

Attachments

In The Callisto Protocol the RTX4090 gets a performance increase under DX12 while all the older Nvidia GPU's get a performance decrease.

This tells us one of two things

1. Nvidia are purposefully holding back the DX12 on the older cards in order to sell more RTX4000 cards

2. The issue is architectural which they've fixed for the RTX4000 series indicating it was an Nvidia problem all along

It could represent a CPU limitation at play. i.e. the two slower GPU's are GPU bound and DX12 adds additional GPU overhead whereas the 4090 is CPU bound and absorbs the additional GPU overhead but benefits from the lower CPU overhead DX12 brings. It'd be interesting to see that same test on a slower ADA GPU.

davis.anthony

Veteran

It could represent a CPU limitation at play. i.e. the two slower GPU's are GPU bound and DX12 adds additional GPU overhead whereas the 4090 is CPU bound and absorbs the additional GPU overhead but benefits from the lower CPU overhead DX12 brings. It'd be interesting to see that same test on a slower ADA GPU.

A quote from the article:

It’s also worth noting that the GTX980Ti, RTX2080Ti and RTX3080 ran the game faster in DX11. So, if you own an old NVIDIA GPU, we suggest using DX11. However, the RTX4090 runs the game significantly faster in DX12. This is the first time we’re seeing such a thing on NVIDIA’s hardware (we triple-checked our results/benchmarks and yes, DX12 is better on the RTX40 series). The RTX2080Ti was also always crashing at 4K in DX12. As for AMD’s hardware, all of its GPUs performed better in DX12.

It does point to being an architectural problem that Nvidia have now fixed with the 4000 series.

DavidGraham

Veteran

Hint: If AMD cards are performing fine/better under DX12 then the problem isn't the games

Just because NVIDIA is having harder time with DX12 than previous APIs doesn't mean it's game devs fault.

When it happens in certain titles, while the vast majority of others seem to work fine, then it's definitely, unquestionably the developers fault. In my third instance I showed how the developers of Borderlands changed the state of performance of DX12 from the beta broken mess of Borderlands 3 to the solid working as intended implementation of Tiny Tina.

I don't think it gets any more clearer than this.

davis.anthony

Veteran

When it happens in certain titles, while the vast majority of others seem to work fine, then it's definitely, unquestionably the developers fault.

If it doesn't happen on AMD cards it's Nvidia's fault.

If the RTX4000 series doesn't suffer with the same DX12 regression as the older Nvidia cards that shows in Nvidia's fault.

Stop crying so damn hard because Nvidia aren't king shits at everything, if you want good DX12 performance then buy a 4000 series or AMD GPU.

DavidGraham

Veteran

RTX 4000 GPUs suffer the same problem in Borderlands 3, latest Call of Duty games and Valhalla.If the RTX4000 series doesn't suffer with the same DX12 regression as the older Nvidia cards that shows in Nvidia's fault.

Maybe Nvidia only partially fixed their architecture issues with the 40x0 series release. Perhaps those games will run faster under DX12 when they release the 50x0 series if they fixed more.RTX 4000 GPUs suffer the same problem in Borderlands 3, latest Call of Duty games and Valhalla.

Oh right, because all games run the exact same code. Forgot about that.When it happens in certain titles, while the vast majority of others seem to work fine, then it's definitely, unquestionably the developers fault. In my third instance I showed how the developers of Borderlands changed the state of performance of DX12 from the beta broken mess of Borderlands 3 to the solid working as intended implementation of Tiny Tina.

I don't think it gets any more clearer than this.

DegustatoR

Legend

No? Re-read what I've said.So game devs are now responsible for IHV drivers too?

Sure but the limits of IHV control in D3D12 are considerably lower and if a game isn't using the API properly on some h/w (which is also much easier in these explicit APIs as well) then there usually isn't much an IHV can do in the driver to "fix" this. Thus the burden of making sure that your D3D12 (or VK) renderer runs well on all h/w on the market is on the developers - which is a bit of a reversal to how it was/is in D3D11 where driver took care of many things by itself.IHVs still have D3D12 drivers too, even when it's lower level API and devs have more control over things.

If things are broken in the drivers then they can be easily fixed. When "things" are "broken" for 7+ years on 4+ different h/w architectures then how high is the chance of them being in the driver - the only piece of s/w which has been updated monthly for all h/w in question?Things can be broken in those drivers.

This would be true for all APIs.Some things might just be slow but not actually broken.

There are many reason but not a "gazillion" and they can most certainly be grouped into IHV and developer sides.Hardware might be slower than expected in some things. There's gazillion reasons out of game devs hands.

Or 4090 is simply more CPU limited and thus it is more often enjoys the benefits of lower driver overhead of D3D12 while slower GFs are more GPU limited and the inefficiencies of D3D12 GPU rendering are showing there more prominently.In The Callisto Protocol the RTX4090 gets a performance increase under DX12 while all the older Nvidia GPU's get a performance decrease.

This tells us one of two things

1. Nvidia are purposefully holding back the DX12 on the older cards in order to sell more RTX4000 cards

2. The issue is architectural which they've fixed for the RTX4000 series indicating it was an Nvidia problem all along

DavidGraham

Veteran

What architectures issue are we talking about here then? It's not like any of these NVIDIA GPUs are lacking any DX12 feature level features! We've also had several hints pointing towards incompetence from either the developers or -sometimes- from Microsoft itself explaining the discrepancies in such "broken" titles.Maybe Nvidia only partially fixed their architecture issues with the 40x0 series release.

The D3D12 binding model causes some grief on Nvidia HW. Microsoft forgot to include STATIC descriptors in RS 1.0 which then got fixed with RS 1.1 but no developers use RS 1.1 so in the end Nvidia likely have app profiles or game specific hacks in their drivers. Mismatched descriptor types are technically undefined behaviour in D3D12 but there are now cases in games where shaders are using sampler descriptors in place of UAV descriptors but somehow it works without crashing! No one has an idea of what workaround Nvidia is applying.

it's 'how' developers are 'using' it that makes it defective since that just means in practice that D3D12 binding model isn't all that different from Mantle's binding model which was pretty much only designed to run on AMD HW so it becomes annoying for other HW vendors trying to emulate this behaviour to be consistent with their competitor's HW ...

Microsoft revised the binding model with shader model 6.6 but I don't know if that's in response to what they saw with it's potential to be misused in games ...

Root-level views in D3D12 exists to cover the use cases of the binding model that would bad on their hardware but nearly no developers use them because they don't have bounds checking so they hate using the feature for the most part! This ties in with the last sentence but instead of games using SetGraphicsRootConstantBufferView, some games will spam CreateConstantBufferView just before every draw which will add even more overhead. It all starts coincidentally adding up when developers are abusing all these defects behind D3D12's binding model.

Bindless on NV (unlike AMD) has idiosyncratic interactions where they can't use constant memory with bindless CBVs so they load the CBVs from global memory which is a performance killer (none of this matters on AMD) ...

Microsoft chose the binding model which favors AMD HW so other vendors don't really have a choice. Similarly, Microsoft standardized dumb features like ROVs which run badly on AMD. Sometimes Microsoft takes a "no compromise" approach to these things so hardware vendors are bound to find some features difficult to implement ...

Developers can also stop relying on "undefined behavior" because that's what they're doing so it's not unreasonable to see performance pitfalls or even crashes ...

Maybe Nvidia could very well be at fault since they keep making hacks in their drivers instead of designing their HW to be closer inline with AMD's bindless model ...

Vulkan seems to be spared from such issues.

On Vulkan, the binding model isn't too bad on Nvidia HW. The validation layers on Vulkan would catch the mismatched descriptor type preventing a lot of headaches for their driver team compared to D3D12 ...

lol... I love it. It's either Nvidia's fault... or Nvidia's fault!

Architectures change, drivers change, APIs change, game code changes.... There's nothing broken about one or the other. DX12 was only "bad" on Nvidia, because their DX11 drivers were "so good"...

I remember the talk that AMD was going to absolutely dominate Nvidia in DX12 because of the uplift AMD saw over their crappy DX11 drivers all that time ago... "DX12 just favors AMD hardware design!" ...and now look.. It's all meaningless because by the time that DX12 mattered... Nvidia already had better performance overall regardless of AMDs performance increase relative to previous APIs.

You can't say Nvidia are holding back DX12 on older cards.. that's just ridiculous. There's NO reason for them to do that. They were accused of pricing 40 series high to force customers to buy older 30 series cards... and now they're accused of holding back 30 series performance to sell 40 series cards...

It's all ridiculous. They want the best performance for all of their GPUs. This is simply a difference in architecture, as well as CPU limitations coming into play. Not to mention, the game has been a clusterfuck of performance.. and shouldn't be used to judge performance metrics of GPUs or even APIs..

Architectures change, drivers change, APIs change, game code changes.... There's nothing broken about one or the other. DX12 was only "bad" on Nvidia, because their DX11 drivers were "so good"...

I remember the talk that AMD was going to absolutely dominate Nvidia in DX12 because of the uplift AMD saw over their crappy DX11 drivers all that time ago... "DX12 just favors AMD hardware design!" ...and now look.. It's all meaningless because by the time that DX12 mattered... Nvidia already had better performance overall regardless of AMDs performance increase relative to previous APIs.

You can't say Nvidia are holding back DX12 on older cards.. that's just ridiculous. There's NO reason for them to do that. They were accused of pricing 40 series high to force customers to buy older 30 series cards... and now they're accused of holding back 30 series performance to sell 40 series cards...

It's all ridiculous. They want the best performance for all of their GPUs. This is simply a difference in architecture, as well as CPU limitations coming into play. Not to mention, the game has been a clusterfuck of performance.. and shouldn't be used to judge performance metrics of GPUs or even APIs..

DavidGraham

Veteran

Yep, Callisto Protocol is CPU limited in it's current form even on AMD GPUs.as well as CPU limitations coming into play. Not to mention, the game has been a clusterfuck of performance.. and shouldn't be used to judge performance metrics of GPUs or even APIs..

Similar threads

- Replies

- 13

- Views

- 6K

- Replies

- 91

- Views

- 20K

- Replies

- 98

- Views

- 36K