Bah I was actually looking for versions in the article, should had thought he may had linked by word dohLatest ones, The i7 7700K is downclocked to 1800X frequency though @4GHz.

http://www.techspot.com/article/1374-amd-ryzen-with-amd-gpu/

Thanks

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Bah I was actually looking for versions in the article, should had thought he may had linked by word dohLatest ones, The i7 7700K is downclocked to 1800X frequency though @4GHz.

http://www.techspot.com/article/1374-amd-ryzen-with-amd-gpu/

Looking at the results of this test it seems like the first sentence of the conclusion is contradicting what is shown on the charts, here are some examples:Thanks guys for eloquently pointing out what should be obvious from the start. Careful though, paranoia runs rampant here, some will have irrational ideas and accusations might start flying quickly. Better duck for cover.

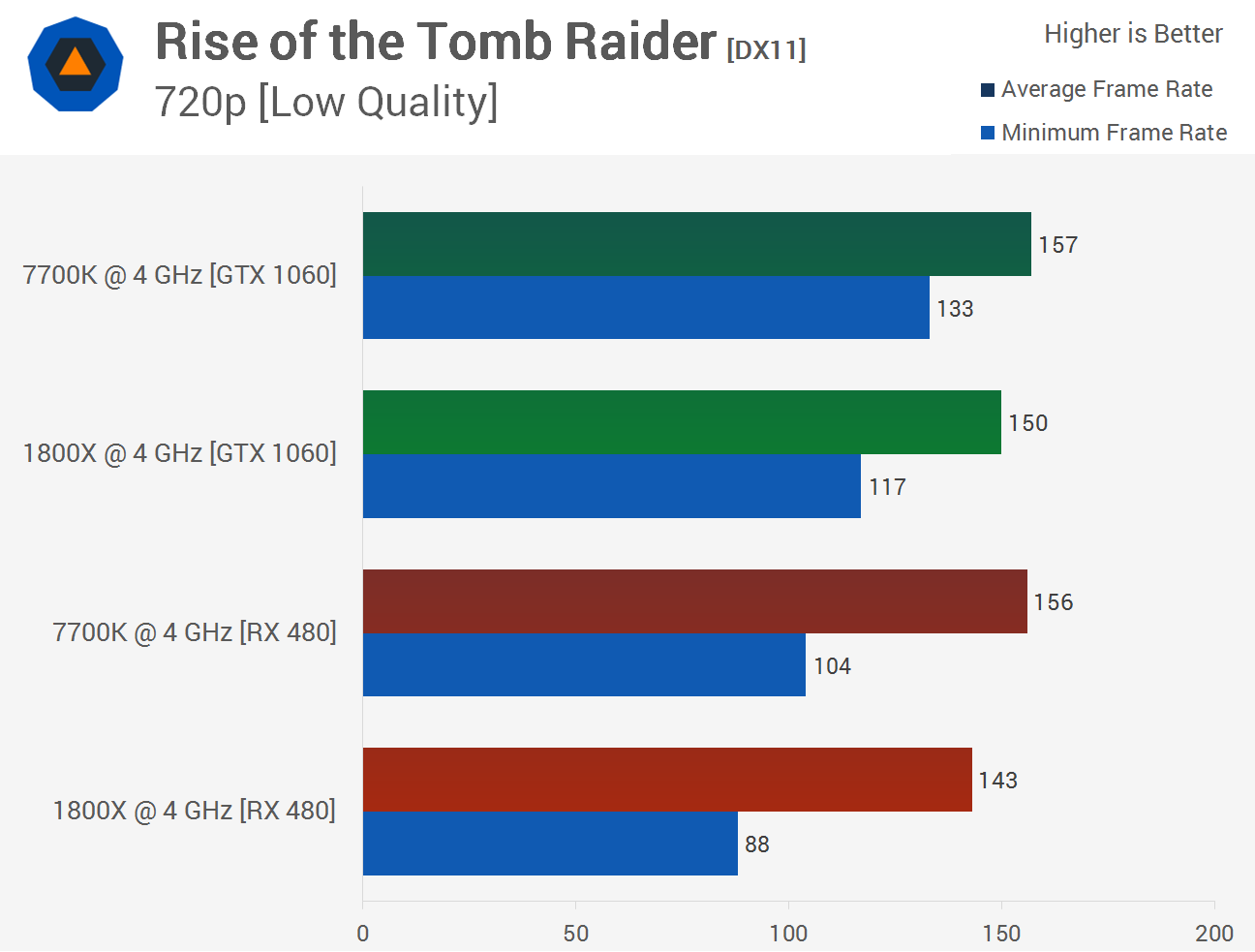

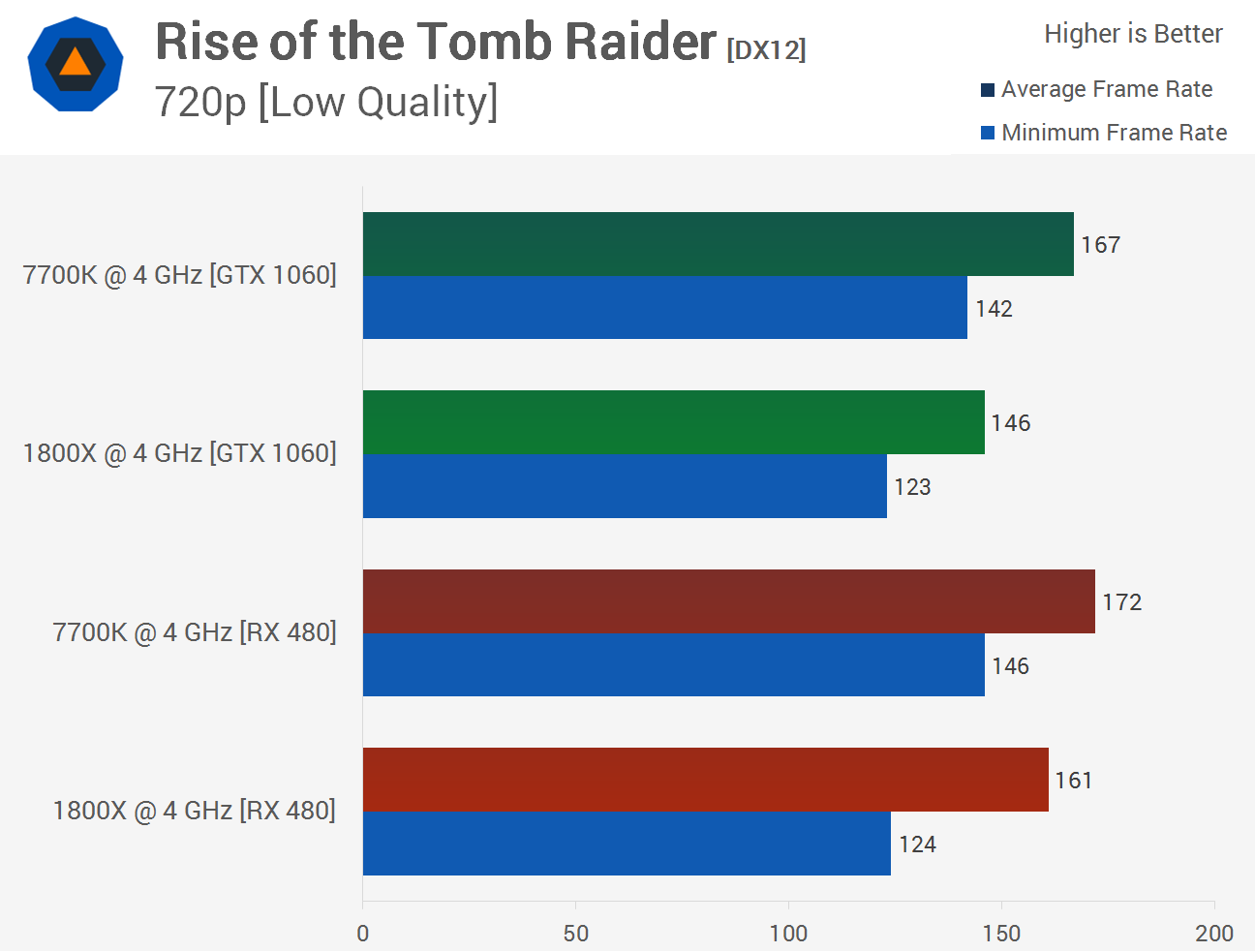

On a more serious note, TechSpot reiterated their piece on this, they now have several games tested @720p and low details. In general, @DX11 Ryzen performs worse on AMD's 480, @DX12 Ryzen is worse on NV's 1060.

http://www.techspot.com/article/1374-amd-ryzen-with-amd-gpu/

So, are Nvidia GPUs limiting Ryzen's gaming performance? Well, we didn't find any evidence of that.

Not wrong there.Exactly. And these are the "easy" bottlenecks. There's also a whole bunch of harder to analyze geometry bottlenecks. When you decrease the rendering resolution, the geometry density (per pixel) increases. Comparing high end GPU performance at 720p is going to result in surprises. Extremely low resolutions reveal bottlenecks that are not visible at 1080p or 4K. Some of low resolution bottlenecks are the same that high tessellation level causes (but not all of them).

Looking at the results of this test it seems like the first sentence of the conclusion is contradicting what is shown on the charts, here are some examples:

As you can see above all DX12 games show that Ryzen performs much closer to 7700K when paired with RX480.

To better ilustrate my point here is a graph with average excluding AoTS:E as it has negative scaling (Ryzen is faster):

As you can see from the average 7700K is 20,73% faster than Ryzen when paired with GTX 1060 in DX12 titles but only 5,89% faster than Ryzen when paired with RX480.

So looking at techreport article's title (Does Ryzen perform better with AMD GPUs?) we can answer like this:

Ryzen does compare better to 7700K in DX12 titles when paired with AMD GPU.

But instead author writes in first paragraph of the conclusion:

He did not find any evidence? This is clearly contradicting with presented data. There is some evidence showing that there are limitations when we pair Ryzen with Nvidia GPU. Its not decisive, but its there.

I am not saying that we should sharpen our pitchforks or start putting our tinfoil hats on. I am saying that reviewers should clearly rethink their testing procedures when reviewing Zen based cores in the future.

Why stop the conclusion at that though? We can also add this for fairness sake:So looking at techreport article's title (Does Ryzen perform better with AMD GPUs?) we can answer like this:

Ryzen does compare better to 7700K in DX12 titles when paired with AMD GPU.

I would be interested in seeing GCN2 vs GCN4 CPU utilization benchmarks. AMD has improved their hardware schedulers and command processors in GCN3/GCN4. Most likely they can offload some work to hardware schedulers. Could be simply that the main render thread load is lower with AMD GPU. This would help 8-core CPU more than 4-core CPU. Of course before we can draw conclusions like this, we need more benchmarks. Preferably also Intel 8-core vs Intel 4-core to rule out Ryzen architecture specific differences.

Just looking at frame times (or fps counters) isn't going to bring enough information. There's tools available that capture and show timelines of CPU activity, including which threads of which application are running on which core. This gives much better insight about multithreaded scalability issues. It is easy to see how well the code is using all the available cores and whether there's a single thread bottleneck or not.

std::thread::hardware_concurrency() in C++, or GetSystemInfo(...) on Windows, for exemple.How exactly does applications and games detect cpu's thread capability? And can we even be sure that application x runs up to 16 threads on both Intel I7-6900k and Ryzen R7 cpu's? I would check this out personally but I only have 8 thread cpu. I do know ashes does run 16 threads on 16 thread cpu, and 8 threads on 8 thread cpu, but for example witcher 3 runs 16 threads no matter what cpu you use (most of the threads are very light).

Would be interested to see this from RotR DX12 especially.

Looking at the results of this test it seems like the first sentence of the conclusion is contradicting what is shown on the charts, here are some examples:

As you can see above all DX12 games show that Ryzen performs much closer to 7700K when paired with RX480.

To better ilustrate my point here is a graph with average excluding AoTS:E as it has negative scaling (Ryzen is faster):

As you can see from the average 7700K is 20,73% faster than Ryzen when paired with GTX 1060 in DX12 titles but only 5,89% faster than Ryzen when paired with RX480.

So looking at techreport article's title (Does Ryzen perform better with AMD GPUs?) we can answer like this:

Ryzen does compare better to 7700K in DX12 titles when paired with AMD GPU.

But instead author writes in first paragraph of the conclusion:

He did not find any evidence? This is clearly contradicting with presented data. There is some evidence showing that there are limitations when we pair Ryzen with Nvidia GPU. Its not decisive, but its there.

I am not saying that we should sharpen our pitchforks or start putting our tinfoil hats on. I am saying that reviewers should clearly rethink their testing procedures when reviewing Zen based cores in the future.

That's why I stated that Ryzen indeed worked better with AMD GPUs in DX12, however it worked worse with it in DX11 in several titles. Mind you this happened: with a downclocked 7700K, @720p and low details (see sebbbi's posts above about different bottlenecks @720p). A better comparison should be made with high end GPUs, and Ultra details preferably with an 8 core Intel CPU as well.He did not find any evidence? This is clearly contradicting with presented data. There is some evidence showing that there are limitations when we pair Ryzen with Nvidia GPU. Its not decisive, but its there.

One thing that really surprises me is how users manage to find best case scenarios for ryzen that AMD themself haven't shown that tomp rider bench at 720 would have been a boom for a performance preview prior to launch(I'm talking from the marketing side of view) and some cases would have shown ryzen better in the initial reviews. AMD really need to step up in their way to launch products to shown best case scenarios to boost sales, they need to find money and that is an easy way to do it(and lets not talk about the reviews MoBos not better ram pass 2133..)

The problem with that is the contraposition of the argument has to hold true, and if it doesn't then it all wrong.

And guess what the contraposition if held true, nV's cards would hurt AMD ryzen in all API's! Nope doesn't do that, nor does it do it consistently in DX12. So start making other theories up, we already see bios updates, giving less problems. It could be a platform issue, and needs bios and microcode fixes....

There is a ton of things to look at, its not as simple as running the benchmarks and pointing to nV drivers, cause we see so many different things affecting performance on the Ryzen platforms, not only that, we need to see how different manufacture platforms differ from each other too at this point.

Now does it look like this is something that is going to be easy to do?

I would be interested in seeing GCN2 vs GCN4 CPU utilization benchmarks. AMD has improved their hardware schedulers and command processors in GCN3/GCN4. Most likely they can offload some work to hardware schedulers. Could be simply that the main render thread load is lower with AMD GPU. This would help 8-core CPU more than 4-core CPU. Of course before we can draw conclusions like this, we need more benchmarks. Preferably also Intel 8-core vs Intel 4-core to rule out Ryzen architecture specific differences.

Just looking at frame times (or fps counters) isn't going to bring enough information. There's tools available that capture and show timelines of CPU activity, including which threads of which application are running on which core. This gives much better insight about multithreaded scalability issues. It is easy to see how well the code is using all the available cores and whether there's a single thread bottleneck or not.

You are correct when we look at DX11, there is a ~2% difference in favour of Nv GPU, still a far cry from what we can observe in DX12. We do not have data for DX9, OGL and Vulkan therefore your argument in this part is not valid.Why stop the conclusion at that though? We can also add this for fairness sake:

Ryzen does compare better to 7700K in DX9, DX11, OpenGL and Vulkan titles when paired with NVidia GPU.

The only definitive conclusion here is just reinforcing that Ryzen 1800X is slower than 7700K in gaming. You can switch Ryzen with any Intel CPU comparably slower to 7700K @4GHz and the conclusion will still hold true. What's new here? Anybody who had following the pattern don't need to spend hours of analysis and you tube videos or a bunch of graphs to come up with that.

The problem with that is the contraposition of the argument has to hold true, and if it doesn't then it all wrong.

And guess what the contraposition if held true, nV's cards would hurt AMD ryzen in all API's! Nope doesn't do that, nor does it do it consistently in DX12. So start making other theories up, we already see bios updates, giving less problems. It could be a platform issue, and needs bios and microcode fixes....

There is a ton of things to look at, its not as simple as running the benchmarks and pointing to nV drivers, cause we see so many different things affecting performance on the Ryzen platforms, not only that, we need to see how different manufacture platforms differ from each other too at this point.

Now does it look like this is something that is going to be easy to do?

First of all I am not making up any theories, i am merely analysing (limited) data from the article.The problem with that is the contraposition of the argument has to hold true, and if it doesn't then it all wrong.

And guess what the contraposition if held true, nV's cards would hurt AMD ryzen in all API's! Nope doesn't do that, nor does it do it consistently in DX12. So start making other theories up, we already see bios updates, giving less problems. It could be a platform issue, and needs bios and microcode fixes....

There is a ton of things to look at, its not as simple as running the benchmarks and pointing to nV drivers, cause we see so many different things affecting performance on the Ryzen platforms, not only that, we need to see how different manufacture platforms differ from each other too at this point.

Now does it look like this is something that is going to be easy to do?

You can also point to DX11 results and claim my assertion is wrong but i can do the same and point to DX12 results, hence the "all games and APIs" average in the 3rd graph. And please do not try to move the goal posts by throwing in platforms, bioses and other issues. I am talking about data from the article and author's conclusion.We'll be sure to throw an AMD GPU into the mix for games such as Deus Ex: Mankind Divided or Total War: Warhammer, though these games are being dropped until we have a more powerful single-GPU graphics card from AMD

First of all I am not making up any theories, i am merely analysing (limited) data from the article.

Secondly if we consider all APIs contraposition is true: on average in all tested games and APIs Ryzen does look better vs 7700K when paired with AMD GPU. Obviously difference is marginal and only DX12 shows big differences but the point still stands. Author admits that there is something going on by saying:

You can also point to DX11 results and claim my assertion is wrong but i can do the same and point to DX12 results, hence the "all games and APIs" average in the 3rd graph. And please do not try to move the goal posts by throwing in platforms, bioses and other issues. I am talking about data from the article and author's conclusion.

I fully agree with your third paragraph but i thought i made it clear in my first post (remember the pitchfork/tin foil hat reference?).

And lastly, did i mention somewhere that verification of this finding is going to be easy?

Can someone fix my posts? I was not aware of reply timers and messed them up a bit.

First of all I am not making up any theories, i am merely analysing (limited) data from the article.

Secondly if we consider all APIs contraposition is true: on average in all tested games and APIs Ryzen does look better vs 7700K when paired with AMD GPU. Obviously difference is marginal and only DX12 shows big differences but the point still stands. Author admits that there is something going on by saying:

You can also point to DX11 results and claim my assertion is wrong but i can do the same and point to DX12 results, hence the "all games and APIs" average in the 3rd graph. And please do not try to move the goal posts by throwing in platforms, bioses and other issues. I am talking about data from the article and author's conclusion.

I fully agree with your third paragraph but i thought i made it clear in my first post (remember the pitchfork/tin foil hat reference?).