My laptop ran a lot more smoothly when I tripled the amount of memory in it. If you've got more memory, you don't have to access slower media as often, and you can keep things around longer. It indirectly affects your bandwidth usage, depending on what you're doing.

A larger memory pool does help avert paging to slower media, although you may have experienced other issues as well.

Did adding RAM (extra modules) up the bandwidth (single to double channel)?

Did adding RAM replace poor quality memory with memory with better CAS latency or frequency (bandwidth)?

Did adding RAM address a paging issue (e.g. your OS had a large page file cache on the HDD and the extra RAM resulted in more resources stored in RAM)?

Without knowing more about your system it is hard to know what was at issue.

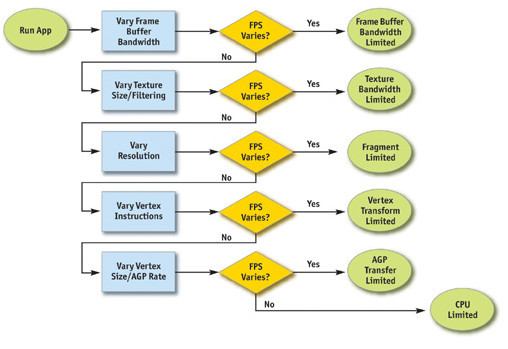

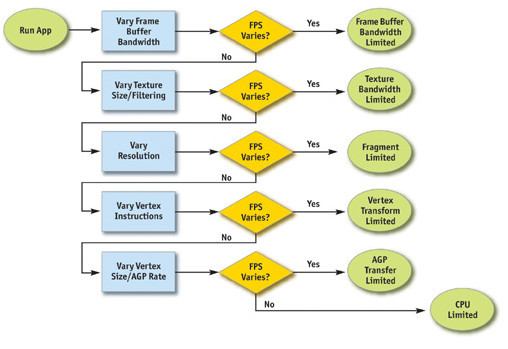

@ More generally in regards to the PS4 issues and memory others were talking about: just adding memory (but not bandwidth) isn't going to help performance outside of issues related to memory pressure. Yes, if you are HDD limited performance will go down--but at 8GB of RAM that should be a rare corner case. The extra memory the PS4 developers have received should help games look better (if there is the computational performance to process the nicer textures) and reduce load times and allow larger, more varied worlds (again, if it can process such computationally) but it won't make the GPU faster. Someone posted an old flow chart but the idea pretty much stands:

* If the bottleneck is the GPU (shading/texturing/fill/vertex) then adding more memory won't help at all.

* If the bottleneck is memory (VRAM) bandwidth then adding more memory won't help.

* If the bottleneck is memory footprint adding more memory will help.

There are situations where more RAM can improve performance but there are a *lot* of GPUs with extra memory but no additional performance. This was especially a common tactic in the mid-to-low range discrete GPUs where adding more memory often was a great sales point that didn't cost a lot but could increase perceived value and thus price point.

Assuming a 1GB v.s 2GB scenario, there are situations where the 1GB board may need to pull data from the system memory which slows it down. But typically in these scenarios the 2GB system would underwhelm in these scenarios as well (e.g. the extra memory can store more textures but if you don't have the texturing performance to enable HQ textures it doesn't help much). But to my point outside this scenario the 1GB and 2GB boards perform the same at the same quality levels, so the extra memory helps in a corner case which isn't usually a relevant one.

This isn't so much true on a console that has an UMA. Assuming the same bandwidth 2GB, 4GB, or 8GB as long as the demo/game isn't caching from the HDD or Optical drive or doing funky decompression or such to avert the memory issue they should perform the same if the assets were all tailored to the lowest common denominator (2GB).