I'd need to find it again, but I think it varied from 10 to 25% depending on the game. Of course my memory could be very faulty indeed

Either way, it's not negligible, so it's not a straightforward "We should remove this" even if it did make sense in practice.

In the paper the performance data is collected by simulating Larrabee cores - so the ~10% cost of Pixel Setup on those graphs is using whatever ALUs they are planning for Larrabee.

Maybe there is a transcendental ALU and maybe there's an attribute interpolator too, but there's no mention of either of these things so far as I can tell.

Presumably they've got an instruction or two to make it a bit cheaper, but not a dedicated instruction per-se?

I can imagine a look-up table instruction to seed the transcendental macros with the data they need to start:

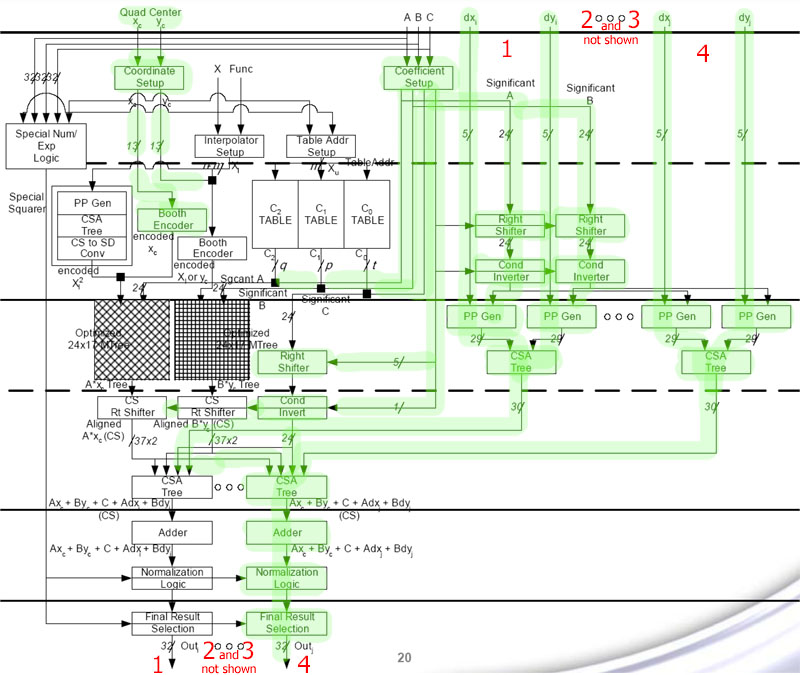

http://developer.intel.com/technology/itj/q41999/pdf/transendental.pdf

where double-precision vec2 MAD ALUs can calculate DP transcendental functions. A vec2-DP ALU can do any transcendental in 52-72 cycles, which is a throughput of 1/104 to 1/144. So in Larrabee a SIMD-16 would have a throughput of 1/26 to 1/36, again for double-precision. I dunno how much faster single-precision would be. Twice as fast? : 1/13 to 1/18.

GT200 currently has 1/4 to 1/8 throughput (maybe there are some things that are 1/16). Halving those again (as you propose) produces 1/8 to 1/16 - very much like the rate for Intel's macros on IA-64, translated onto Larrabee.

Though of course NVidia's pair of SIMDs (MAD-8 and MI-4 - MI-8 is capable of 2 transcendentals or 8 interpolations per clock, so MI-4 is half) is more like half the width of Intel's SIMD-16.

But for overall throughput, wouldn't you rather have one SIMD-16 than two MAD-8s and two MI-4s? Sure, transcendentals are faster in the latter, but the SIMD-16 is smaller, once you take into account the control overhead of the operand collectors and the 4 SIMDs in NVidia's architecture, not the one in Larrabee.

But that's a much lower die size penalty than having to try hiding register bandwidth limitations for real-world scenarios. Also some of that complexity must surely be there anyway for shared memory, as required by CUDA.

Yes to both those points. But there's still dedicated scoreboarding for MI operands - they still have to be scheduled and tracked.

You mean the register file bandwidth? (since the others clearly aren't really affected by it).

Operand and resultant bandwidth has to be managed regardless of the ALU lanes involved.

I'm not sure I agree completely; how many interpolation operations nowadays are directly for the registers that'll be used for texture fetches? It has already moved past that goal and is still useful.

Yes, but D3D10 only allows 16 attributes per vertex, while D3D10.1 allows for 32 attributes. So, per fragment, the worst case interpolation is currently 32 32-bit values.

A single G92 cluster can interpolate 16 attributes in 1 cycle (2 multiprocessors, each doing 8 per clock). The desired rate is set by the rasteriser/fillrate, not by ALU throughput. In other words, G92's rasterisation rate of 16 fragments per core clock is supported by an interpolation rate of 128 attributes per ALU clock, so in 9800GTX at 675/1688, that's a 1:20 ratio, rasterisation:interpolation.

GT200 rasterises at 32 per core clock and interpolates at 240 per ALU clock, so GTX280 602/1296 has a 1:16 ratio.

A speculative, small, GT300 with 384 interpolations-per-clock (384 MAD lanes) with say 4:1 ALU:TEX, and with say 600/1300 clocks would be 1:26. Why?

ATI gets by with 32 interpolators, or 1:2.

So, yes, my conservative GT300 has way way too much interpolation rate, so halving the MIs or dropping them entirely really sounds like a good idea.

If deleting MI allows GT300 to go from 4 to 5 multiprocessors per cluster, that would be a bonus eh?

As you say, the real question is how useful it is - or more precisely, how much does its usefulness *vary*? Because if it's always not-very-useful, you can just make it less powerful but cheaper. If sometimes it's very useful and sometimes completely useless and wasted, then it starts making some sense to unify that functionality into another block. However being able to extract a little bit of the MUL clearly already helps that problem a bit (but only if adding the MUL on its own makes sense; otherwise it's less of a win).

Clearly the MUL was a complete waste of time until GT200 arrived - and the compilation and instruction-dependency scoreboarding issues it generates are hardly a decent pay-back for the shitty utility.

The reality is that interpolation is bloody cheap the way NVidia's done it, by tacking it on the side of transcendental. Going forwards, though, it looks like a case of the tail wagging the dog

So the intriguing question is, does interpolation explode in your face if the MI is dropped? Seeing the stratospheric interpolation rates of even G92, I don't see how NVidia can justify keeping it in future.

(I'm actually wondering if I've radically misunderstood interpolation rates in NVidia's architecture because the numbers are so silly.)

Jawed