Yeah, i have confused 1.1 with the potential future features, sorry. (https://microsoft.github.io/DirectX-Specs/d3d/Raytracing.html#potential-future-features)There is no mention of "programmable traversal" anywhere in the links provided. AFAIK all new DX features of 20H1 will be supported on Turing GPUs.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Direct3D feature levels discussion

- Thread starter DmitryKo

- Start date

Alessio1989

Regular

I want multiple graphics queue on the same device node.

Ext3h

Regular

Care to explain what for exactly? I mean, short of the comfort of synchronization by fences there ain't a difference to be expected just by exposing multiple host side queues per logical engine.I want multiple graphics queue on the same device node.

And if you need synchronization between more than one queue, you should still be able to just serialize the schedule in your own code based on CPU side completion events for now, at no significant penalty. The driver couldn't do significantly better either, yet.

At least not until the GPU did expose a second engine for the GCP, which was IIRC already attempted by one vendor but dropped again as it wasn't trivial to utilize.

Last edited:

DegustatoR

Legend

https://devblogs.microsoft.com/directx/dxr-1-1/

+

https://devblogs.microsoft.com/dire...edback-some-useful-once-hidden-data-unlocked/

None of these features specifically require new hardware. Existing DXR Tier 1.0 capable devices can support Tier 1.1 if the GPU vendor implements driver support.

Reach out to GPU vendors for their timelines for hardware and drivers.

+

https://devblogs.microsoft.com/dire...edback-some-useful-once-hidden-data-unlocked/

DegustatoR

Legend

Alessio1989

Regular

D

Deleted member 13524

Guest

DX12 updates detailed!

https://devblogs.microsoft.com/directx/dev-preview-of-new-directx-12-features/

Mesh Shaders, Inline Raytracing...

Now I wonder which GPU architectures can run inline RT and Mesh Shaders

My layman's understanding of this is that the new features will allow for lower precision in raytracing calculations, which AFAIK is the biggest criticism devs make about nvidia's Turing implementations.

This might result into two things:

- Lower end GPUs with lower compute throughput and bandwidth will be able to perform real time raytracing.

- If the Turing GPUs don't support these optimizations then their raytracing performance will be significantly lower than Ampere and RDNA2 (assuming both of these support the latest optimizations).

I think Turing will support it. Just a hunch though.My layman's understanding of this is that the new features will allow for lower precision in raytracing calculations, which AFAIK is the biggest criticism devs make about nvidia's Turing implementations.

This might result into two things:

- Lower end GPUs with lower compute throughput and bandwidth will be able to perform real time raytracing.

- If the Turing GPUs don't support these optimizations then their raytracing performance will be significantly lower than Ampere and RDNA2 (assuming both of these support the latest optimizations).

DegustatoR

Legend

Again:

https://devblogs.microsoft.com/directx/dxr-1-1/ said:None of these features specifically require new hardware.

D

Deleted member 13524

Guest

Again:

Of course you don't require new hardware. DXR doesn't even require BVH accelerators and will work on any DX12 GPU.

The question is whether Turing's BVH accelerators can be used within the updated pipeline.

DegustatoR

Legend

DXR does require BVHs to be built to trace rays through them. How exactly this is accelerated is down to the h/w which runs the API calls.DXR doesn't even require BVH accelerators and will work on any DX12 GPU.

Turing doesn't have any "BVH accelerators", it has ray traversal accelerators which work with BVH structures which on Turing (and Pascal) are built on CPU and CUDA cores.The question is whether Turing's BVH accelerators can be used within the updated pipeline.

So far there is no indication that RT shaders which could be called from other shader types in DXR 1.1 will operate in any way differently to the same RT shaders running inside their own shader type. Which means that the same h/w should be able to accelerate them in the same way as previously.

One shoud specify what "BVH accelerator" means in each case, Turing has BVH traversal accelerators, but not BVH building accelerators like PowerVR has.Of course you don't require new hardware. DXR doesn't even require BVH accelerators and will work on any DX12 GPU.

The question is whether Turing's BVH accelerators can be used within the updated pipeline.

vs

DavidGraham

Veteran

On their official Twitter page NVIDIA declared that both DXR1.1 and Mesh Shaders features are supported ONLY on current Geforce cards.

They also had a developer page on them.

https://news.developer.nvidia.com/dxr-tier-1-1/

They also had a developer page on them.

https://news.developer.nvidia.com/dxr-tier-1-1/

On their official Twitter page NVIDIA declared that both DXR1.1 and Mesh Shaders features are supported ONLY on current Geforce cards.

They also had a developer page on them.

https://news.developer.nvidia.com/dxr-tier-1-1/

Support doesn’t necessarily mean the hardware can benefit from it. Nvidia also claimed Maxwell and Pascal supported async compute.

DavidGraham

Veteran

Pascal and Maxwell DO support Async Compute, but Maxwell often achieves very little uplift through its use, because it's ALU utilization rate is especially high.Support doesn’t necessarily mean the hardware can benefit from it. Nvidia also claimed Maxwell and Pascal supported async compute.

I suppose you could be right though, Pascal supports DXR alright but it achieves little acceleration from it, however the context of the tweet is Mesh Shaders and DXR1.1, we know Turing supports hardware Mesh Shaders natively, so DXR1.1 is most probably also supported natively on Turing as well.

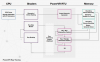

They support the feature, but Maxwell outright doesn't do async, it just switches from gfx to compute and compute to gfx, Pascal improves on this but is still apparently somewhat limited compared to what GCN (and probably Turing?) can do. Top is Maxwell, middle is GCN, bottom is PascalPascal and Maxwell DO support Async Compute, but Maxwell often achieves very little uplift through its use, because it's ALU utilization rate is especially high.

I suppose you could be right though, Pascal supports DXR alright but it achieves little acceleration from it, however the context of the tweet is Mesh Shaders and DXR1.1, we know Turing supports hardware Mesh Shaders natively, so DXR1.1 is most probably also supported natively on Turing as well.

Alessio1989

Regular

Bottom is a proper high priority compute queue running in between the graphics queue job, middle is normal priority.

Pascal and Maxwell DO support Async Compute, but Maxwell often achieves very little uplift through its use, because it's ALU utilization rate is especially high.

I suppose you could be right though, Pascal supports DXR alright but it achieves little acceleration from it, however the context of the tweet is Mesh Shaders and DXR1.1, we know Turing supports hardware Mesh Shaders natively, so DXR1.1 is most probably also supported natively on Turing as well.

I dont remember any games where AC can be toggled showing any benefit on Maxwell and Pascal. Im not saying Turing doesnt benefit from DXR 1.1 and/or mesh shaders, just that its not a certainty until we have some actual data in real games. Nvidia more than anyone has a history of misrepresenting their hardware's capabilities.

DavidGraham

Veteran

We've had this discussion repeatedly here. Maxwell can do Async Compute, it's the question of does it actually help in performance or not is the one that matters. And in short, Maxwell does support Async perfectly fine, it's just it can't dynamically load balance it's Compute and Graphics queue on the fly to benefit from it, besides, it's utilization rate is sufficiently high to begin with.They support the feature, but Maxwell outright doesn't do async, it just switches from gfx to compute and compute to gfx,

This from a technical perspective is all that you need to offer a basic level of asynchronous compute support: expose multiple queues so that asynchronous jobs can be submitted. Past that, it's up to the driver/hardware to handle the situation as it sees fit; true async execution is not guaranteed. Frustratingly then, NVIDIA never enabled true concurrency via asynchronous compute on Maxwell 2 GPUs. This despite stating that it was technically possible. For a while NVIDIA never did go into great detail as to why they were holding off, but it was always implied that this was for performance reasons, and that using async compute on Maxwell 2 would more likely than not reduce performance rather than improve it.

The issue, as it turns out, is that while Maxwell 2 supported a sufficient number of queues, how Maxwell 2 allocated work wasn’t very friendly for async concurrency. Under Maxwell 2 and earlier architectures, GPU resource allocation had to be decided ahead of execution. Maxwell 2 could vary how the SMs were partitioned between the graphics queue and the compute queues, but it couldn’t dynamically alter them on-the-fly. As a result, it was very easy on Maxwell 2 to hurt performance by partitioning poorly, leaving SM resources idle because they couldn’t be used by the other queues.

Meanwhile not shown in these simple graphical examples is that for async’s concurrent execution abilities to be beneficial at all, there needs to be idle time bubbles to begin with. Throwing compute into the mix doesn’t accomplish anything if the graphics queue can sufficiently saturate the entire GPU. As a result, making async concurrency work on Maxwell 2 is a tall order at best, as you first needed execution bubbles to fill, and even then you’d need to almost perfectly determine your partitions ahead of time.

https://www.anandtech.com/show/10325/the-nvidia-geforce-gtx-1080-and-1070-founders-edition-review/9

There are only two questions about async compute that anyone that's not a driver developer should ask:

1. can GPU X run graphics and compute concurrently?

2. how fast can GPU react once it gets a work of a higher priority?

With the 1st one:

GCN obviously the answer is yes. For Pascal it's also yes. And in fact it's also yes for Maxwell with one giant asterisk that it may be yes on a hardware level but it's a no on driver level so it's a no for all practical purposes.

There is no NV way of doing async and AMD way of doing async. You have a queue that eats draw commands and you have a queue that eats dispatch commands. That's it. You could use 10 compute queues if you wanted to, but that won't help increase performance as internet seems to be convinced this days, it will actually hurt performance even on GCN. If someone does not agree with that you send him coding a small benchmark that will prove what ever point he's trying to make.

With the 2nd one:

That's already a question that basically only developers will deal with. Questions around here on the topic "why would you need async compute if it doesn't run graphics and compute at the same time?" proves that.

https://forum.beyond3d.com/posts/1931938/

For Pascal, prominent examples off the top of my head include: the TimeSpy test.I dont remember any games where AC can be toggled showing any benefit on Maxwell and Pascal. I

Again, NVIDIA had this to say about Async on Maxwell:

https://www.legitreviews.com/3dmark...performance-tested_184260#0IwideW8dv31FWXI.99Maxwell GPUs are indeed capable of Async Compute, but it hasn’t been enabled since the Maxwell GM2xx GPUs don’t have dynamic load balancing.

I don't think Mesh Shaders are under question at this point at all. It's a DEFINITE Yes for support.Im not saying Turing doesnt benefit from DXR 1.1 and/or mesh shaders

Last edited:

I think Doom showed an improvement, IIRC.I dont remember any games where AC can be toggled showing any benefit on Maxwell and Pascal.

Do you know how this has improved with Volta / Turing?Maxwell does support Async perfectly fine, it's just it can't dynamically load balance it's Compute and Graphics queue on the fly to benefit from it,

Assumption is, with the new 'fine grained sheduling' it should be good now?

As far is i understand, Maxwell needs to 'divide' the SMs before the work starts, e.g. task A gets 20% of GPU, task B 80%. If one task finishes earlier, there is no way to utilize the SMs that became idle. (Not sure how accurate this is technically.)

GCN can dynamically balance with its ACEs, and it can even run multiple shaders on the same CU.

"You could use 10 compute queues if you wanted to, but that won't help increase performance as internet seems to be convinced this days, it will actually hurt performance even on GCN."

Actually, in Vulkan the number of queues is fixed, and for GCN i get one GFX+compute queue, and two pure compute queues, so i can only enqueue 3 different tasks concurrently. Also, only the gfx queue offers full compute performance, the other two seem halfed even if there is no gfx load, also given priorities from API seem to be ignored. This seems what AMD considered to be practical. Very early drivers had more queues, IIRC.

I did some testing with small workloads, which is where i see the most benefit because one task alone can not saturate. Results on GCN were close to ideal. (Forgot to test on Maxwell)

But there is one more of application of AC that is rarely mentioned because it happens automatically:

If you enqueue multiple tasks to just one queue, but they have no dependencies on each other (no barriers in between), then GCN can and does run those tasks async. Likely also on DX11.

This is very powerful because it avoids the disadvantages of using multiple queues, which are: Additional sync necessary accross queues, and the need to divide command lists to feed multiple queues. (Big overhead that can cut the benefit, which hurts especially on small tasks where we need it the most.)

I see some potential to improve low level APIs here. Again: We need ability to generate commands dynamically directly on GPU, including those damn barriers that are currently missing from DX Execute Indirect, VK Conditional Draw and even NV device generated command buffers.

Last edited: