Yeah.. I couldn't get any further than anandtechs quotes on PPU acceleration

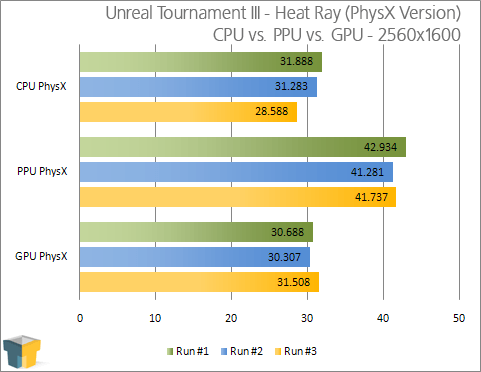

And with the GPU performing less than the PPU version, I can only assume performance improvements are nill.

But that was not what you said.

You said: "HARDWARE physx is only enabled in those three maps."

Even if there aren't any performance improvements, that doesn't mean hardware physx isn't used.

Does Cell Factor run PhysX on the GPU yet? Manju Hedge said their goal was to support all PPU functions on the GPU solver but as far as I know that's not yet the case. he later admitted that some functions (riggid bodies)

will probably never be GPU accelerated

Do we know why Cell Factor doesn't run on the GPU? Since it's esentially a marketing-tool for Ageia, it could just have some extra checks to make sure it is running with a real Ageia PPU installed. When I tried to run it, it simply refused to switch to hardware mode. No indication that it was broken or anything.

Also, even if GPUs don't support all features, why wouldn't nVidia just add CPU code to remain compatible? I see no reason why they shouldn't, especially since they already have a full CPU solver anyway. And I can see many reasons why they should... It would mean that developers could seamlessly enable GPU acceleration, rather than only enabling it when there are only simplified physics in the game. Some acceleration is better than no acceleration, you'd get the best of both worlds.

I find all this rather circumstantial.

Besides, your article doesn't say "will probably never be GPU accelerated". It says "however: the port of rigid bodies n ' has not yet been made and this feature may not, for the moment at least, be accelerated by the GPU."

"For the moment" is something quite different from "probably never".

This topic has more info on it:

http://developer.nvidia.com/forums/index.php?showtopic=2649

It seems that only one feature was missing at the time of writing ("D6 joints only support angular drives. They do not support linear drives or SLERP drives").

PhysX SDK 3.0 is supposed to have full support.