You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

800Mhz or 1066 RAM for Quad Q6600???

- Thread starter ragser

- Start date

arent you a little confused...

800mhz (what you call it)

has a bus speed of 400mhz (the speed it actualy runs at)

its also known as 800mhz (because its ddr double data rate (transferes twice as much per clock as sdr)

because intel run this memory in dual channel 2*800mhz is also refered to as 1600mhz

its also called pc2 6400 as this is the bandwidth

unless ive got it wrong..

Yes, you're so wrong it hurts..

DDR2-800 (PC6400) has a bus speed of 200Mhz

Double-data rate = 400Mhz

Dual channel = 800Mhz. Not 1600Mhz

It's called PC2-6400 because the 800Mhz speed is multiplied by the bus width which is 8.

Ergo:

DDR2-1066MHZ (PC8500) has a bus speed of 266Mhz

Double-data rate = 533Mhz

Dual channel = 1066Mhz

When the 1066Mhz is multiplied by the bus width which again is 8, not 4, you get PC2-8500 from the number being rounded to the nearest hundreth.

And as far as the Q6600 goes don't jack around with the FSB just set that thing to 400Mhz and either drop your multi down or your voltage way the hell up.

wouldnt it be the opposite alby ?

because if you lower the speed the timmings become tighter

I didn't say timings (how the memory is accessed by the northbridge), but I did say latency (how quickly information within the memory can be accessed by the CPU.) I think this is where the confusion sets in...

Memory timings are effectively latency on the memory bus. Thus, increasing your timings from say 4-4-4-10 to 5-5-5-12 would result in higher latency from the northbridge interface to the memory module being accessed. If everything else remains equal (FSB speed, memory speed, chipset timings) then a higher memory bus latency will result in lower memory performance for the rest of the system.

But Intel platforms have a front side bus -- the interface from CPU to northbridge. The latency here is set by the northbridge "performance value" (technically called tRD). While this bus serves as the primary I/O bottleneck for the entire system, you could do a bit of latency-hiding trickery if you get the external busses going fast enough.

So, if you ramp your memory bus from the lock-step 1:1 multiplier (a 333mhz FSB getting 667mhz ram) to, let's say, a 1:2 multipler (a 333mhz FSB getting 1333mhz ram) then the timings at the memory bus end of things will be at least partially hidden because the northbridge is acting as the memory controller.

Using my example: 4-4-4-10 timings on DDR2-667 vs 5-5-5-18 timings on DDR3-1333 memory, operating in dual channel, both on a 333FSB... The 1333Mhz set, even though it has more relaxed timings, still has a solid chance of performing better simply because the memory bus is operating at twice the speed, which can effectively cut the latency down to 2.5-2.5-2.5-9 in terms of absolute time.

Obviously, simple math is your friend before assuming that turning up memory speed beyond that of the FSB is actually going to make a difference. Running ram at 20% faster speeds (667 vs 800) at 20% higher latency (4-4-4-10 vs 5-5-5-12) doesn't do you any good and will result in higher heat and power draw. And all you're really doing is reducing latency, which in terms of overall bandwidth, isn't going to do a whole lot...

So, a 100% increase in memory speed (like my last example of using DDR3-1333) might net you a ~20-30% reduction in access times of DDR2-667 at 4-4-4-10 if both are using a 333FSB, which will slightly increase your available bandwidth as well (~10% maybe?). Does that extra heat and stress on your northbridge tangibly affect your system's performance? I suppose it might, especially if you're looking for every last ounce of performance.

If you're more interested in pure bandwidth numbers, you're left only with turning up the FSB speeds as high as you can get them stable and setting the memory at whatever speed makes sense.

caveman-jim

Regular

Yes, you're so wrong it hurts..

DDR2-800 (PC6400) has a bus speed of 200Mhz

Double-data rate = 400Mhz

Dual channel = 800Mhz. Not 1600Mhz

It's called PC2-6400 because the 800Mhz speed is multiplied by the bus width which is 8.

Ergo:

DDR2-1066MHZ (PC8500) has a bus speed of 266Mhz

Double-data rate = 533Mhz

Dual channel = 1066Mhz

When the 1066Mhz is multiplied by the bus width which again is 8, not 4, you get PC2-8500 from the number being rounded to the nearest hundreth.

Intel's FSB is quad pumped to generate the cpu FSB clock. So 266 x4 = 1066FSB of the processor as advertised (266 x [multi] 9 = cpu speed). At 1:1 memory ratio, the 266 is doubled to give 533, i.e. the minimum speed of the RAM to run a 1066fsb processor (unless the mobo supports either lower than 1:1 mem speeds or unlocks the memory clock from the FSB clock).

So, 266Mhz RAM at 1:1 533Mhz effective.

If you up your FSB to 333 (quad pumped is now 1333, like a wolfy), then 1:1 gives you 667 (because it's not really 333, its 333.3 and 666.6, rounded to the nearest decimal).

FSB of 400Mhz (1600mhz quad pumped) gives you 800mhz effective DDR, i.e. the rated speed of PC2-6400.

If you anticipate overclocking much higher than 400mhz FSB, then it might pay you to invest in DDR2-1000 (PC2-8000) or DDR2-1066 (PC2-8500) as it is going to give you more headroom - more likely that your proc or mobo is going to be limiting you rather than your mem.

IMO PC2-6400 is a good match for a 1066Mhz FSB processor, as most the quality 2gb and 4gb kits can stretch to 1000mhz speeds, and not many 1066mhz FSB procs get into the 475mhz+ arena.

Yes, you're so wrong it hurts..

DDR2-800 (PC6400) has a bus speed of 200Mhz

Double-data rate = 400Mhz

Dual channel = 800Mhz. Not 1600Mhz

It's called PC2-6400 because the 800Mhz speed is multiplied by the bus width which is 8.

Ergo:

DDR2-1066MHZ (PC8500) has a bus speed of 266Mhz

Double-data rate = 533Mhz

Dual channel = 1066Mhz

When the 1066Mhz is multiplied by the bus width which again is 8, not 4, you get PC2-8500 from the number being rounded to the nearest hundreth.

What? No way in hell!

You're getting Intel's quad pumped FSB mixed up with DDR memory!

Indeed. DDR2-800 really does run at 400mhz bus speed (double data rate would give you the 800mhz "effective"), and running a pair of sticks in dual-channel mode at 400FSB could theoretically give you 1600mhz "effective" -- but it doesn't always work that way.

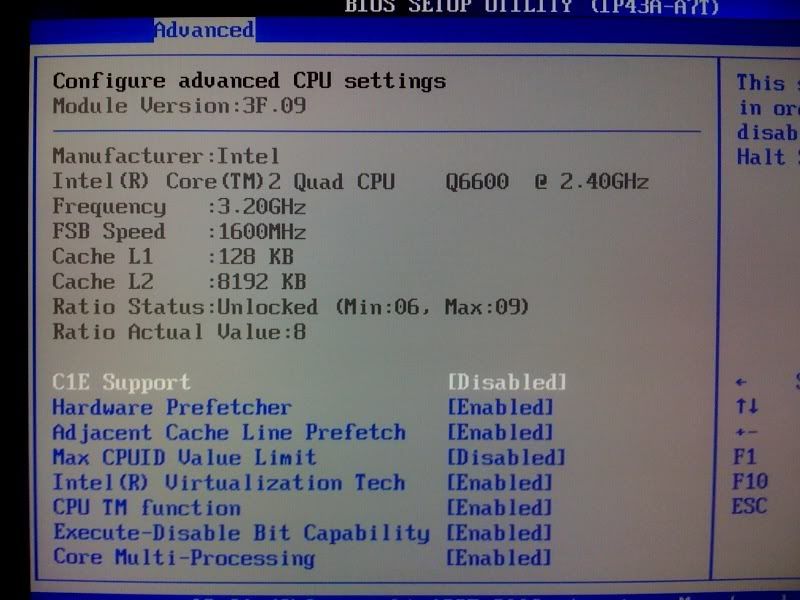

I am fairly new to OC so please correct me wherever I am wrong

My mobo is a TP43D2-A7.

It says it supports FSB 1600/1333/1066

Now does that mean for a quad core q6600 at factory my FSB being 266, with 4 cores it is 266*4=1066 FSB

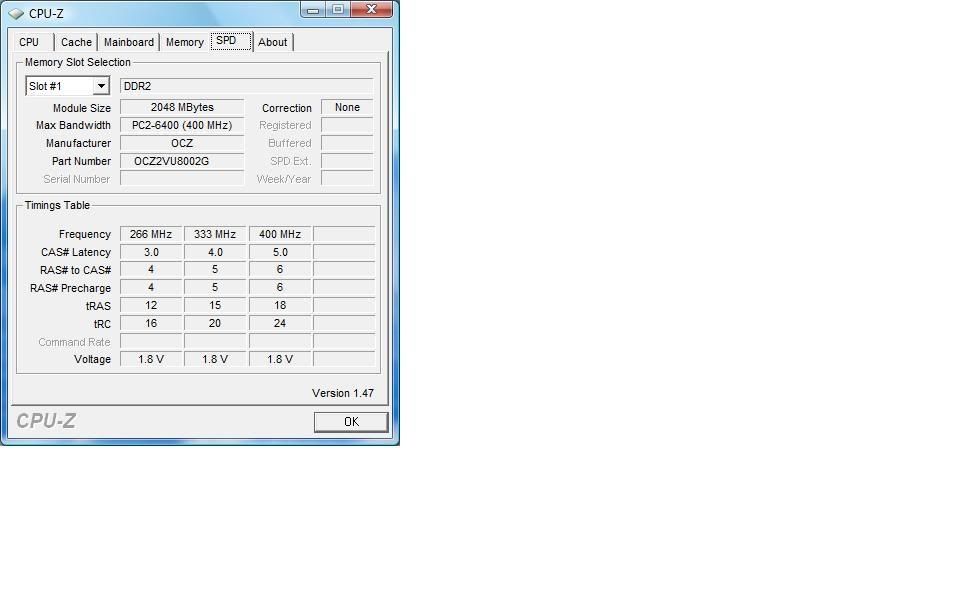

The memory I have is OCZ2VU8004GK -DDR2 800 (PC2 6400)

http://www.newegg.com/Product/Product.aspx?Item=N82E16820227195

when I OC’d I set my FSB to 355 the multiplier to 9, which gives me ~ 3.2 GHZ CPU clock, while my FSB is 355*4 = 1420 ( is this ok)

(Disabled C1E and EIST)

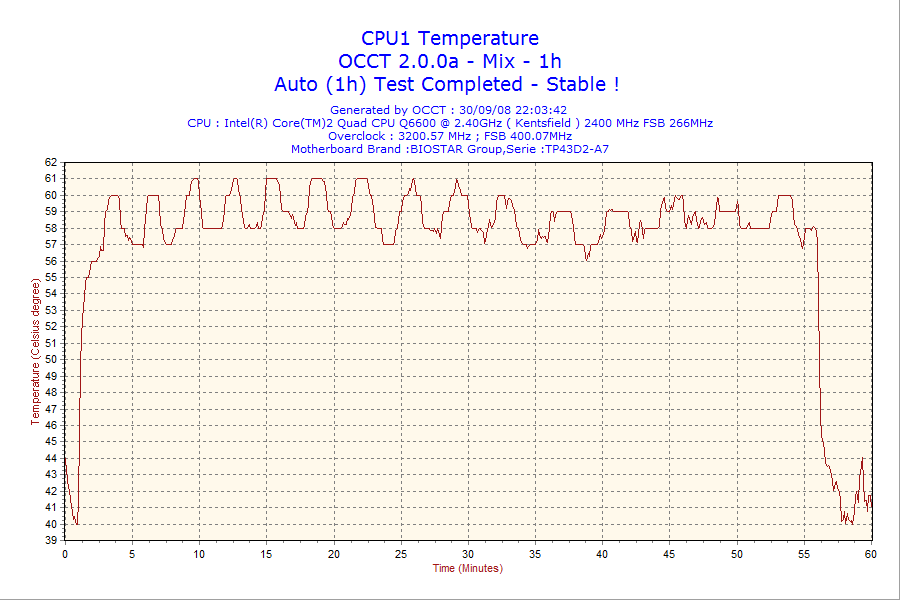

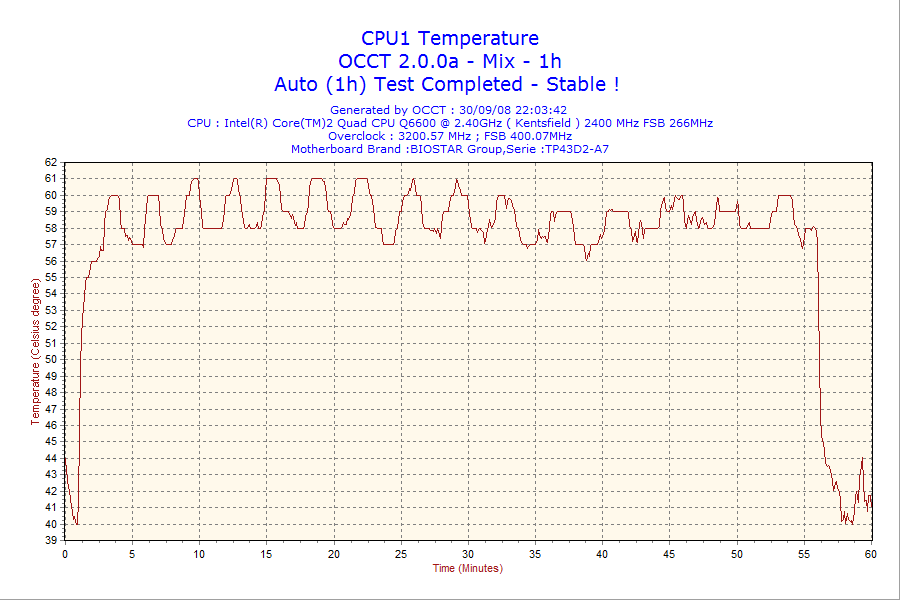

I ran OCCT (1 hr) and prime 95 for 12 hours and my temperature on core 1 (which was the highest never exceeded 61), the other cores stayed between 58-60

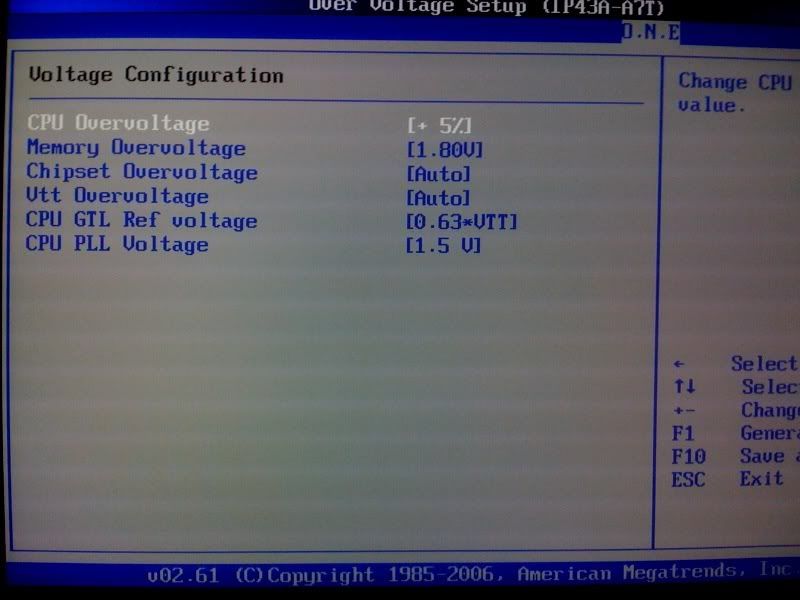

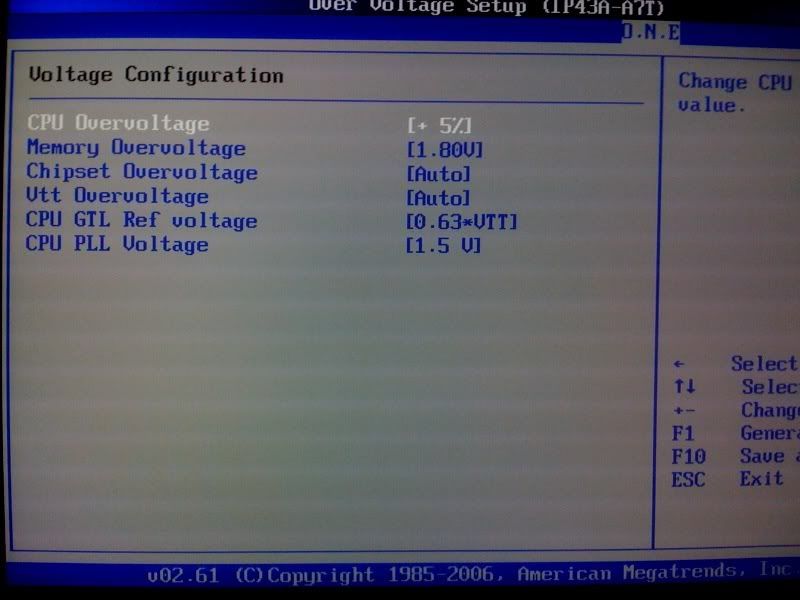

The mobo only allows me to increase the vcore in increments for 5/10/15 % so I limited it to 5% and under prime 95 it shows it at 1.3 vcore

I have not played around with the memory timings /voltage.

I haven’t quite understood the FSB: memory ratio concept and don’t understand the advantages of going 1:1 or any other way.

But from what I have read so far, it seems like it would make more sense for me to

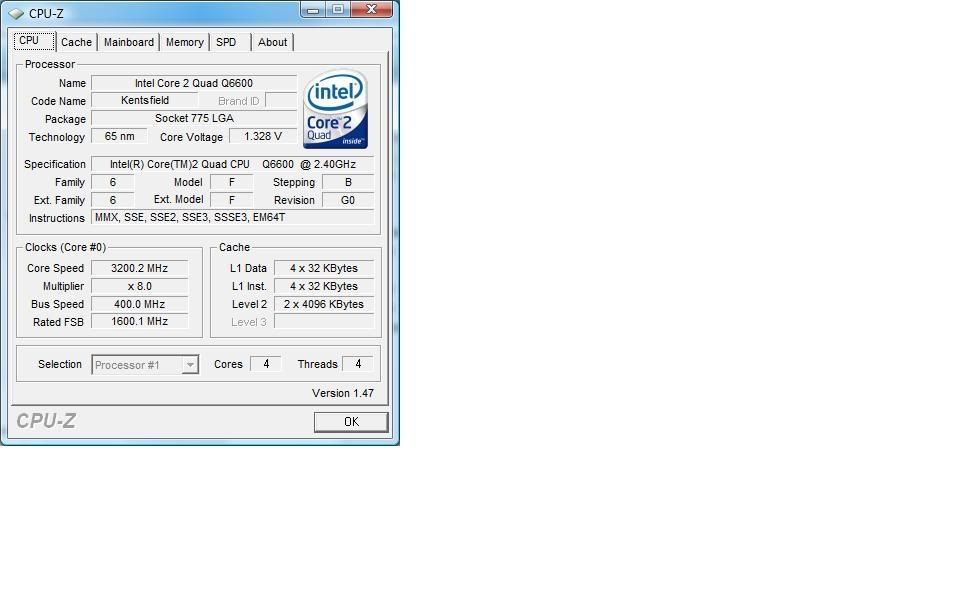

Set my FSB to 400MHZ since my mobo does support 1600MHZ FSB and set my multiplier down to *8 giving me 3.2 GHZ with the same vcore.

From there I can increase my multiplier to check if 3.6 GHZ is stable and if needed step up the vcore %

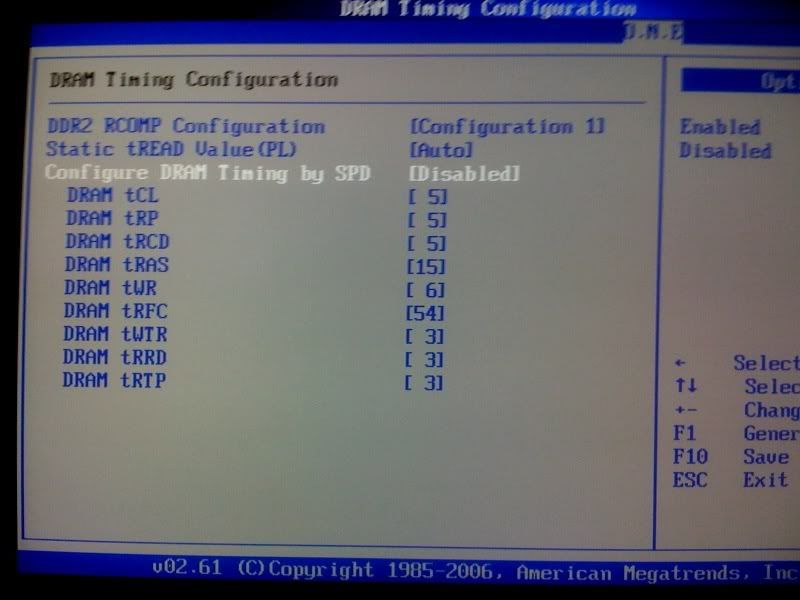

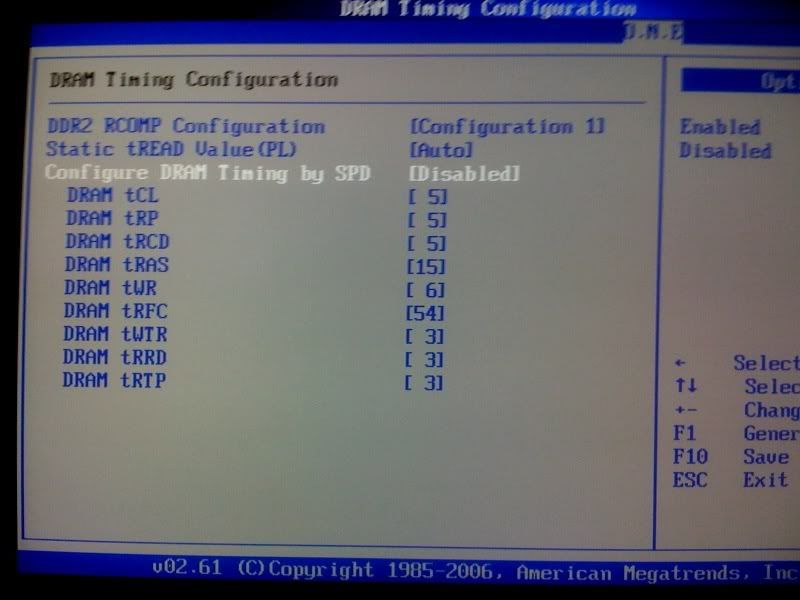

I will change my memory timings to 5-5-5-15@800mhz 1.8v

By setting my FSB to 400MHZ regardless of the multiplier I seem to achieve the 1:1 ratio with the standard for the PC2-6400.

On a side note I am running vista and the ATI 4850 (NO oc, stable under furmark stability tests)

Also is there any advantage to having 355*9 = 3.2 vs 400*8 = 3.2 since they both accomplish the same clock, does the FSB being OC’d more vs the multiplier being stepped up/down have any advantages/disadvantages.

My mobo is a TP43D2-A7.

It says it supports FSB 1600/1333/1066

Now does that mean for a quad core q6600 at factory my FSB being 266, with 4 cores it is 266*4=1066 FSB

The memory I have is OCZ2VU8004GK -DDR2 800 (PC2 6400)

http://www.newegg.com/Product/Product.aspx?Item=N82E16820227195

when I OC’d I set my FSB to 355 the multiplier to 9, which gives me ~ 3.2 GHZ CPU clock, while my FSB is 355*4 = 1420 ( is this ok)

(Disabled C1E and EIST)

I ran OCCT (1 hr) and prime 95 for 12 hours and my temperature on core 1 (which was the highest never exceeded 61), the other cores stayed between 58-60

The mobo only allows me to increase the vcore in increments for 5/10/15 % so I limited it to 5% and under prime 95 it shows it at 1.3 vcore

I have not played around with the memory timings /voltage.

I haven’t quite understood the FSB: memory ratio concept and don’t understand the advantages of going 1:1 or any other way.

But from what I have read so far, it seems like it would make more sense for me to

Set my FSB to 400MHZ since my mobo does support 1600MHZ FSB and set my multiplier down to *8 giving me 3.2 GHZ with the same vcore.

From there I can increase my multiplier to check if 3.6 GHZ is stable and if needed step up the vcore %

I will change my memory timings to 5-5-5-15@800mhz 1.8v

By setting my FSB to 400MHZ regardless of the multiplier I seem to achieve the 1:1 ratio with the standard for the PC2-6400.

On a side note I am running vista and the ATI 4850 (NO oc, stable under furmark stability tests)

Also is there any advantage to having 355*9 = 3.2 vs 400*8 = 3.2 since they both accomplish the same clock, does the FSB being OC’d more vs the multiplier being stepped up/down have any advantages/disadvantages.

I am fairly new to OC so please correct me wherever I am wrong. I am breaking my post up since it doesn't let me post everything all at once !

My mobo is a TP43D2-A7.

It says it supports FSB 1600/1333/1066

Now does that mean for a quad core q6600 at factory my FSB being 266, with 4 cores it is 266*4=1066 FSB

The memory I have is OCZ2VU8004GK -DDR2 800 (PC2 6400)

My mobo is a TP43D2-A7.

It says it supports FSB 1600/1333/1066

Now does that mean for a quad core q6600 at factory my FSB being 266, with 4 cores it is 266*4=1066 FSB

The memory I have is OCZ2VU8004GK -DDR2 800 (PC2 6400)

when I OC’d I set my FSB to 355 the multiplier to 9, which gives me ~ 3.2 GHZ CPU clock, while my FSB is 355*4 = 1420 ( is this ok)

(Disabled C1E and EIST)

I ran OCCT (1 hr) and prime 95 for 12 hours and my temperature on core 1 (which was the highest never exceeded 61), the other cores stayed between 58-60

The mobo only allows me to increase the vcore in increments for 5/10/15 % so I limited it to 5% and under prime 95 it shows it at 1.3 vcore

I have not played around with the memory timings /voltage.

(Disabled C1E and EIST)

I ran OCCT (1 hr) and prime 95 for 12 hours and my temperature on core 1 (which was the highest never exceeded 61), the other cores stayed between 58-60

The mobo only allows me to increase the vcore in increments for 5/10/15 % so I limited it to 5% and under prime 95 it shows it at 1.3 vcore

I have not played around with the memory timings /voltage.

I haven’t quite understood the FSB: memory ratio concept and don’t understand the advantages of going 1:1 or any other way.

But from what I have read so far, it seems like it would make more sense for me to

Set my FSB to 400MHZ since my mobo does support 1600MHZ FSB and set my multiplier down to *8 giving me 3.2 GHZ with the same vcore.

From there I can increase my multiplier to check if 3.6 GHZ is stable and if needed step up the vcore %

I will change my memory timings to 5-5-5-15@800mhz 1.8v

By setting my FSB to 400MHZ regardless of the multiplier I seem to achieve the 1:1 ratio with the standard for the PC2-6400.

Also is there any advantage to having 355*9 = 3.2 vs 400*8 = 3.2 since they both accomplish the same clock, does the FSB being OC’d more vs the multiplier being stepped up/down have any advantages/disadvantages.

On a side note I am running vista and the ATI 4850 (NO oc, stable under furmark stability tests)

But from what I have read so far, it seems like it would make more sense for me to

Set my FSB to 400MHZ since my mobo does support 1600MHZ FSB and set my multiplier down to *8 giving me 3.2 GHZ with the same vcore.

From there I can increase my multiplier to check if 3.6 GHZ is stable and if needed step up the vcore %

I will change my memory timings to 5-5-5-15@800mhz 1.8v

By setting my FSB to 400MHZ regardless of the multiplier I seem to achieve the 1:1 ratio with the standard for the PC2-6400.

Also is there any advantage to having 355*9 = 3.2 vs 400*8 = 3.2 since they both accomplish the same clock, does the FSB being OC’d more vs the multiplier being stepped up/down have any advantages/disadvantages.

On a side note I am running vista and the ATI 4850 (NO oc, stable under furmark stability tests)

That was a lot of posts  but if I understand correctly, you're simply asking whether higher FSB is better than lower FSB -- or at least, what are the pros and cons, right?

but if I understand correctly, you're simply asking whether higher FSB is better than lower FSB -- or at least, what are the pros and cons, right?

It ultimately depends on what your memory is capable of... If you run your processor at 355*9, that means your ram would be doing 710Mhz (when set to the 1:1 ratio.) What kind of timings can your memory use at that speed, versus running at the full 800Mhz speed?

If you can run at 4-4-4-12 timings with that 710Mhz speed, you'll have better bandwidth at 355 * 9. If you are stuck at 5-5-5-15 timings either way, then the 400*8 setting would net you better results as the memory would pick up some usable speed.

And I sense a bit of confusion around the memory ratio (1:1, etc) bit... The bottom line is, the only time you want to increase your ratio above 1:1 is when your memory is capable of running at a fast enough speed to offset the increased timings it will incur at that speed. A 20% gain in speed is easily offset by a 20% increase in timings, but a 50% increase in speed with a 20% increase in timings would actually result in a slight reduction of overall latency.

Hope that made sense

It ultimately depends on what your memory is capable of... If you run your processor at 355*9, that means your ram would be doing 710Mhz (when set to the 1:1 ratio.) What kind of timings can your memory use at that speed, versus running at the full 800Mhz speed?

If you can run at 4-4-4-12 timings with that 710Mhz speed, you'll have better bandwidth at 355 * 9. If you are stuck at 5-5-5-15 timings either way, then the 400*8 setting would net you better results as the memory would pick up some usable speed.

And I sense a bit of confusion around the memory ratio (1:1, etc) bit... The bottom line is, the only time you want to increase your ratio above 1:1 is when your memory is capable of running at a fast enough speed to offset the increased timings it will incur at that speed. A 20% gain in speed is easily offset by a 20% increase in timings, but a 50% increase in speed with a 20% increase in timings would actually result in a slight reduction of overall latency.

Hope that made sense

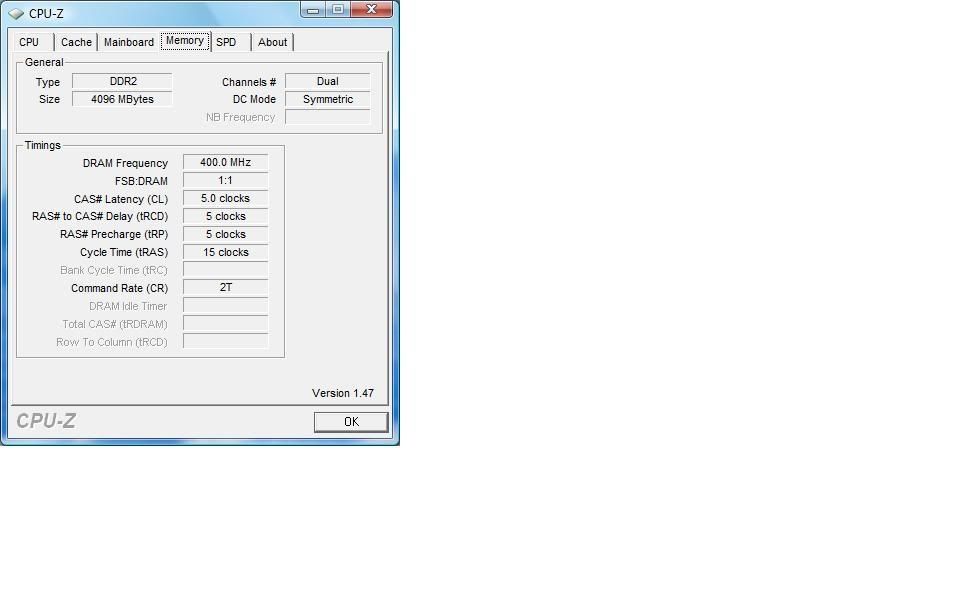

ok I have played around with the new settings

400*8 for the CPU and 5-5-5-15 (1.8v) at 800 MHZ for the memory

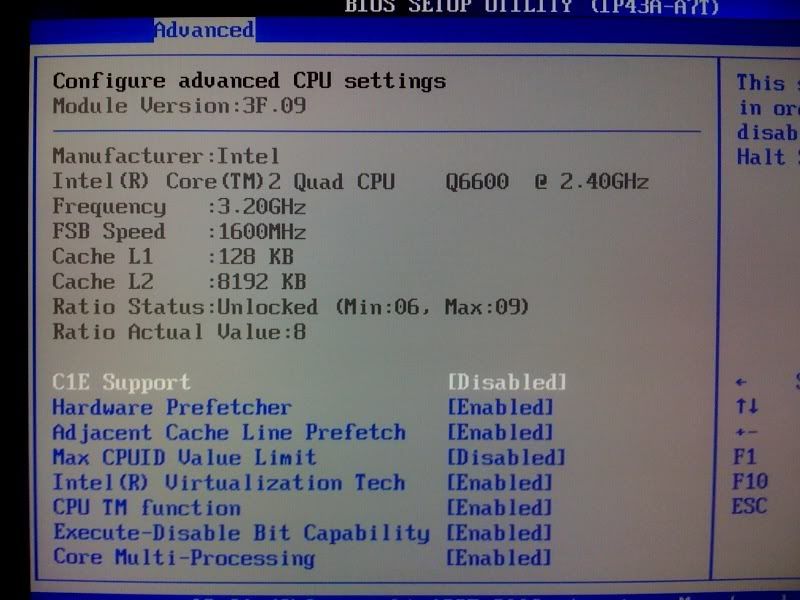

here are my results. I noticed a really weird thing though , everest and hardware monitor and even futuremark think my processor is running at 3.6 GHZ!!!! it showed up as 450*8 and 1800 FSB....how is that possible ?

I checked it twice and its 400*8 = 3.2 GHZ

i have verified this through OCCT, CORE temp and CPU-Z. however once when i started CPU-Z it didnt seem to load correctly and also showed 3.6ghz but the memory etc was greyed out so i restarted it and it was back at 3.2 ...weird eh.....ahy theories on why this is happening.

also please let me know based on the results below if it is looking right

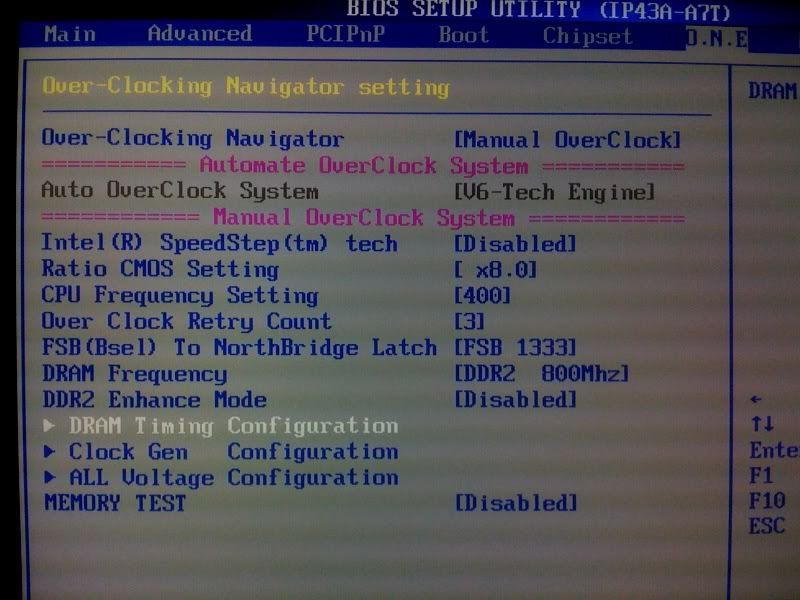

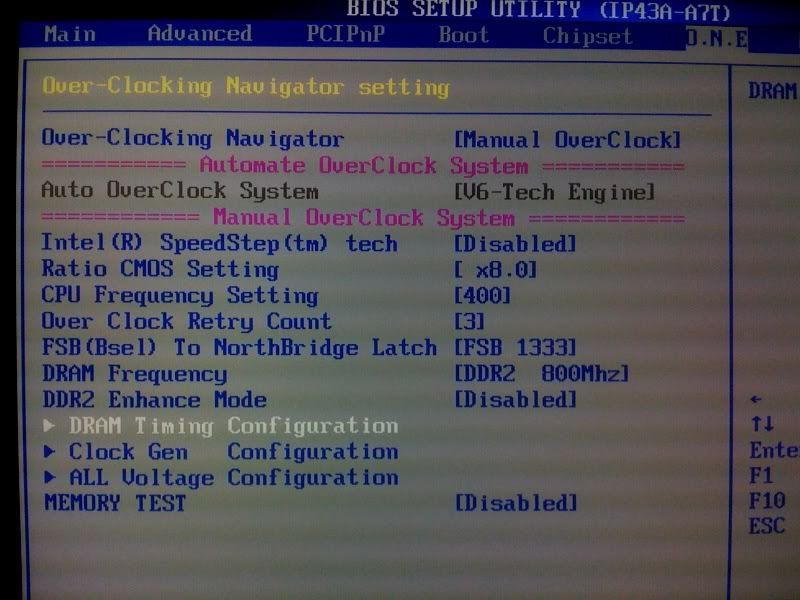

Here are my BIOS tweaks. I was not completely sure about the FSB to northbride latch but its at max now and I read somewhere that ddr2 enhance should be disabled, but dont know why

ihave disabled dram timing by spd, is that ok ? if i dont then cpu-z shows 5-6-6-15 under memory.

see here how hardware monitor shows it at 3.6 GHZ , please throw some light on why its doing this!

here are the results from core 1 after 1 hour under occt, the rest were similar and at 58

400*8 for the CPU and 5-5-5-15 (1.8v) at 800 MHZ for the memory

here are my results. I noticed a really weird thing though , everest and hardware monitor and even futuremark think my processor is running at 3.6 GHZ!!!! it showed up as 450*8 and 1800 FSB....how is that possible ?

I checked it twice and its 400*8 = 3.2 GHZ

i have verified this through OCCT, CORE temp and CPU-Z. however once when i started CPU-Z it didnt seem to load correctly and also showed 3.6ghz but the memory etc was greyed out so i restarted it and it was back at 3.2 ...weird eh.....ahy theories on why this is happening.

also please let me know based on the results below if it is looking right

Here are my BIOS tweaks. I was not completely sure about the FSB to northbride latch but its at max now and I read somewhere that ddr2 enhance should be disabled, but dont know why

ihave disabled dram timing by spd, is that ok ? if i dont then cpu-z shows 5-6-6-15 under memory.

see here how hardware monitor shows it at 3.6 GHZ , please throw some light on why its doing this!

here are the results from core 1 after 1 hour under occt, the rest were similar and at 58

I don't know, but I want to know where you got your desktop background!...see here how hardware monitor shows it at 3.6 GHZ , please throw some light on why its doing this!

...

Unknown Soldier

Veteran

I am fairly new to OC so please correct me wherever I am wrong. I am breaking my post up since it doesn't let me post everything all at once !

My mobo is a TP43D2-A7.

It says it supports FSB 1600/1333/1066

Now does that mean for a quad core q6600 at factory my FSB being 266, with 4 cores it is 266*4=1066 FSB

The memory I have is OCZ2VU8004GK -DDR2 800 (PC2 6400)

Again, the FSB is quad pumped so it's actually only using 266Mhz. Once you start over clocking though(with the Q6600 it's easy), you get 333FSB(CPU 3Ghz) or 356FSB(3.2Ghz). That's if you leave it at the standard 9x multiplier. With the Q6600 you got the option to bump the multiplier between 6x-9x.

At 400 your DDR2 800 (6400) is running 1:1 ratio but your CPU might not be able to take it. My old B3 struggled to hit 3.5Ghz. My new G0 struggled to hit 3.4Ghz but that was on my old P5W Deluxe. I've now bought a new X48, and am hoping to try it out the weekend.

Please note, that the default CPU cooler is not adequate enough over 3Ghz, and I suggest getting a new cooler, I suggest the Thermalright Ultra-120 Extreme(my new buy) and buy a fan too or the Zalman CNPS9700L which i've had great success on.

US

Last edited by a moderator:

I mobo only allowed enough room for the arctic 7 pro cooler, it did bring my temperature down around 10-15 C over compared to stock.

it does look like its not completely stable cause OCCT runs fine for the 1Hr test, but i have run prime 95 an few times and once core 3 failed after 5 hours and another time core 4 failed after 3 hours.

I am trying to rerun them , but not sure if that means something is wrong or its a rare occurance.

it does look like its not completely stable cause OCCT runs fine for the 1Hr test, but i have run prime 95 an few times and once core 3 failed after 5 hours and another time core 4 failed after 3 hours.

I am trying to rerun them , but not sure if that means something is wrong or its a rare occurance.

Similar threads

- Replies

- 21

- Views

- 3K

- Replies

- 13

- Views

- 2K

- Replies

- 61

- Views

- 11K