There are 4GB GDDR7 chips?Today, just like the crypto-mining mania, there's this "AI" mania. I know people who are buying 5090 not because they are gamers but because they want to do edge AI. They are also more likely to be willing to pay more. I also heard some rumors about some people in China are replacing 5090's memory with chips of twice the size, making them 64GB, and also some rumors of the 3 bits modules with potential to 96GB. Together with the ban of AI chips to China, I guess this is just going to get even worse.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA GeForce RTX 50-series Blackwell Availability

- Thread starter pcchen

- Start date

DegustatoR

Legend

Technically the standard goes up to 8GB/chip but right now we're getting only a small number of expensive 3GB chips.There are 4GB GDDR7 chips?

4, 6 and 8 should all be possible in the future though.

That's what I thought. I'm wondering how are they modding 64GB on a 5090? I thought there had to be some PCB considerations to do clamshell.Technically the standard goes up to 8GB/chip but right now we're getting only a small number of expensive 3GB chips.

4, 6 and 8 should all be possible in the future though.

arandomguy

Veteran

There are 4GB GDDR7 chips?

I'm guessing it's referring to doubling VRAM by making them double sided, not using 4GB chips which don't exist.

While the standard allows for it whether or not it's technically and/or financially practical for them to be deployed in GDDR7's lifespan would be debatable. GDDR6 in spec allowed for 1GB, 1.5GB, 2GB, 3GB, and 4GB. Only 1GB and 2GB were ever deployed in market.

That's what I thought. I'm wondering how are they modding 64GB on a 5090? I thought there had to be some PCB considerations to do clamshell.

I think it should be kept in mind with China we aren't talking about just modding these at home with just a soldering iron or something. It's companies with proper equipment and trained professionals. They've already done it at scale with the 4xxx series.

They are making custom PCBs? And wouldn't the firmware also have to be changed? Or does it autodetect when 2 modules are present on a channel?I'm guessing it's referring to doubling VRAM by making them double sided, not using 4GB chips which don't exist.

While the standard allows for it whether or not it's technically and/or financially practical for them to be deployed in GDDR7's lifespan would be debatable. GDDR6 in spec allowed for 1GB, 1.5GB, 2GB, 3GB, and 4GB. Only 1GB and 2GB were ever deployed in market.

I think it should be kept in mind with China we aren't talking about just modding these at home with just a soldering iron or something. It's companies with proper equipment and trained professionals. They've already done it at scale with the 4xxx series.

arandomguy

Veteran

They are making custom PCBs? And wouldn't the firmware also have to be changed? Or does it autodetect when 2 modules are present on a channel?

I haven't looked into this in detail. From what I understand is that this became publicly aware when companies started making them available to the public. It's not a situation in which some enthusiast was modding them and documented how they did it exactly.

Nvidia gaming GPUs modded with 2X VRAM for AI workloads — RTX 4090D 48GB and RTX 4080 Super 32GB go up for rent at Chinese cloud computing provider

Same GPU but with twice the GDDR6X

Chinese data centers refurbing and selling Nvidia RTX 4090D GPUs due to overcapacity — 48GB models sell for up to $5,500

Companies are letting go of their idle GPUs for instant profits.

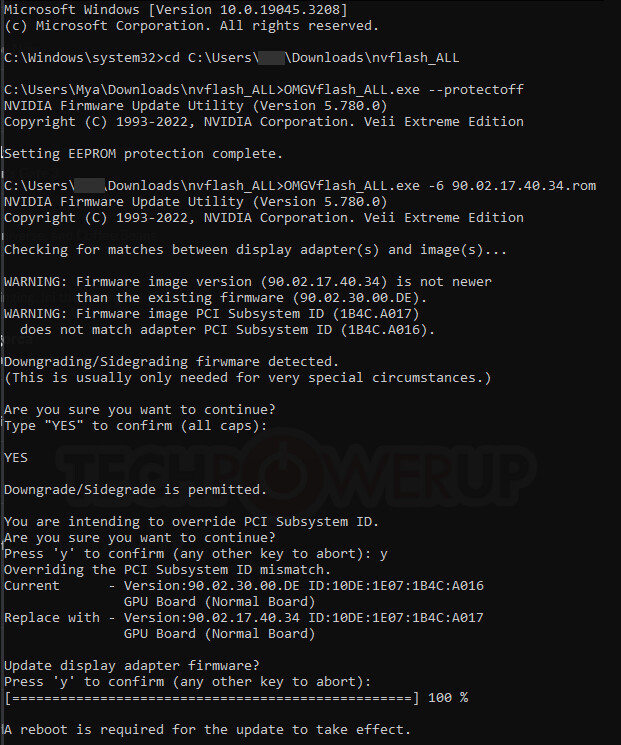

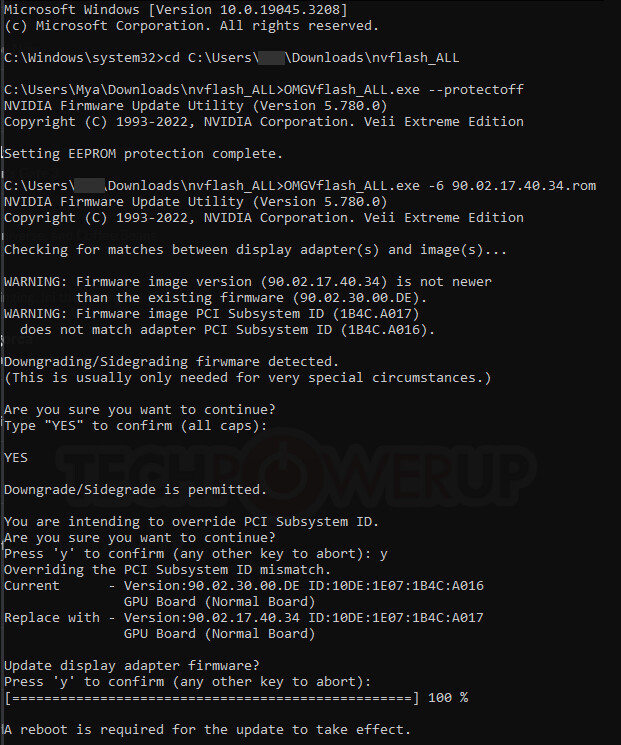

In terms of the firmware it's worth noting that Nvidia's firmware signing I believe was broken (at least some degree) in 2023. So firmware level modifications being a requirement may not be a barrier in itself.

NVIDIA BIOS Signature Lock Broken, vBIOS Modding and Crossflash Enabled by Groundbreaking New Tools

You can now play with NVIDIA GeForce graphics card BIOS like it's 2013! Over the last decade, NVIDIA had effectively killed video BIOS modding by introducing BIOS signature checks. With GeForce 900-series "Maxwell," the company added an on-die security processor on all its GPUs, codenamed...

I've seen both custom PCBs, and reusing old 3090 PCBs to make the 4090 franken-cards. Apparently the 4090 is pin-compatible with the 3090, and since the 3090 was a clamshell design when it was introduced, you just swap the 4090 GPU and the newer 16gbit density GDDR6x chips onto the 3090 PCB, flash the firmware and away you go.I haven't looked into this in detail. From what I understand is that this became publicly aware when companies started making them available to the public. It's not a situation in which some enthusiast was modding them and documented how they did it exactly.

Nvidia gaming GPUs modded with 2X VRAM for AI workloads — RTX 4090D 48GB and RTX 4080 Super 32GB go up for rent at Chinese cloud computing provider

Same GPU but with twice the GDDR6Xwww.tomshardware.com

Chinese data centers refurbing and selling Nvidia RTX 4090D GPUs due to overcapacity — 48GB models sell for up to $5,500

Companies are letting go of their idle GPUs for instant profits.www.tomshardware.com

In terms of the firmware it's worth noting that Nvidia's firmware signing I believe was broken (at least some degree) in 2023. So firmware level modifications being a requirement may not be a barrier in itself.

NVIDIA BIOS Signature Lock Broken, vBIOS Modding and Crossflash Enabled by Groundbreaking New Tools

You can now play with NVIDIA GeForce graphics card BIOS like it's 2013! Over the last decade, NVIDIA had effectively killed video BIOS modding by introducing BIOS signature checks. With GeForce 900-series "Maxwell," the company added an on-die security processor on all its GPUs, codenamed...www.techpowerup.com

I suspect the firmware is modified/customized, but they apparently work just fine out of the box with regular standard Nvidia drivers.

Testing the 4090 48GB

5070 and 5070 Ti debut on the April Steam survey with pretty decent numbers especially for the 5070.

Is the 4060 laptop edition as the most popular GPU new? When did it dethrone the 3060?5070 and 5070 Ti debut on the April Steam survey with pretty decent numbers especially for the 5070.

in aprilIs the 4060 laptop edition as the most popular GPU new? When did it dethrone the 3060?

Availability in the US (Newegg/Microcenter) seems decent now except for the 5090. Took about 3 months to get there for the 5080 and a bit less for the 5070s. Not bad compared to other launches considering all the doom and gloom. Now to see how sticky these prices are over the next few months.

5080 is starting at $1400, 5070 Ti at $900, 5070 at $600 and 5060 Ti 16 GB at $480. The 40% markup on the 5080 is the standout.

5080 is starting at $1400, 5070 Ti at $900, 5070 at $600 and 5060 Ti 16 GB at $480. The 40% markup on the 5080 is the standout.

GhostofWar

Regular

I think here is the most appropriate place to post this, wasn't expecting that outcome but I havn't been looking at gpu prices recently and i'm not in the usa.

DegustatoR

Legend

^^ TL;DV: 5070 and 5070Ti have better perf/price than 9070/XT due to current retail prices.

If anyone's been following the availability and pricing for last months this isn't news when we're looking at USA.

9070XT is selling at the same price level as 5070Ti while 9070 is at least +$100 to 5070.

If anyone's been following the availability and pricing for last months this isn't news when we're looking at USA.

9070XT is selling at the same price level as 5070Ti while 9070 is at least +$100 to 5070.

^^ TL;DV: 5070 and 5070Ti have better perf/price than 9070/XT due to current retail prices.

If anyone's been following the availability and pricing for last months this isn't news when we're looking at USA.

9070XT is selling at the same price level as 5070Ti while 9070 is at least +$100 to 5070.

I’m anxiously awaiting the flood of angry YouTube videos about 9070 pricing and availability. Any day now.

DegustatoR

Legend

5090 is also starting to hit MSRPs outside of US.

I think it's fair to expect US market to reach MSRPs for all cards aside from 5090 in a month or two.

Let's see if collective steves will notice that outside of some "Q&A" videos.

I think it's fair to expect US market to reach MSRPs for all cards aside from 5090 in a month or two.

Let's see if collective steves will notice that outside of some "Q&A" videos.

Doom: Dark Ages is the next unoptimized game for nVidia user: https://www.computerbase.de/artikel...s_in_wqhd_uwqhd_und_ultra_hd_mit_hwraytracing

5090 is 47% faster than the 9070XT with "hardware" raytracing. I think is quite clear that these developers have no clue how to optimize their engines for modern nVidia GPUs and wasting so much potential. 100 FPS in 1440p with rasterizing and simple Raytracing on a 120 TFLOPs and 1.8 TB/s GPU is a joke.

Dont think that nVidia should put much wafer into gaming for the next years. Doesnt matter for them and game developers dont care about anything anymore.

5090 is 47% faster than the 9070XT with "hardware" raytracing. I think is quite clear that these developers have no clue how to optimize their engines for modern nVidia GPUs and wasting so much potential. 100 FPS in 1440p with rasterizing and simple Raytracing on a 120 TFLOPs and 1.8 TB/s GPU is a joke.

Dont think that nVidia should put much wafer into gaming for the next years. Doesnt matter for them and game developers dont care about anything anymore.

DavidGraham

Veteran

It's 80% faster at "native" 4K, expect this advantage to expand once the path tracing update is live.5090 is 47% faster than the 9070XT with "hardware" raytracing.

Doom: The Dark Ages Benchmarks & PC Performance Analysis

Powered by id Tech 8 Engine, it's time now to benchmark Doom: The Dark Ages and examine its performance on the PC.

www.dsogaming.com

www.dsogaming.com

I think the game is probably optimised fine for NV hardware - it is just the RT here even on "Ultra Nightmare" is not exactly high-end stuff. RT Reflections are in, but they are pretty simple and do not apply to rough materials. Do not forget, the RT effects here are are designing it running on old RDNA 1.5/2 consoles, so it has to be ultra fast simple material stuff. The only issue is - at launch - the RT settings do not scale much higher than that simple stuff. Path Tracing later presumably changes that.Doom: Dark Ages is the next unoptimized game for nVidia user: https://www.computerbase.de/artikel...s_in_wqhd_uwqhd_und_ultra_hd_mit_hwraytracing

5090 is 47% faster than the 9070XT with "hardware" raytracing. I think is quite clear that these developers have no clue how to optimize their engines for modern nVidia GPUs and wasting so much potential. 100 FPS in 1440p with rasterizing and simple Raytracing on a 120 TFLOPs and 1.8 TB/s GPU is a joke.

Dont think that nVidia should put much wafer into gaming for the next years. Doesnt matter for them and game developers dont care about anything anymore.

DegustatoR

Legend

CB.de has started using upscaling in all of their benchmarks, and while this is likely closer to how people actually play games these days it diminishes their GPU testing as everything above mid-range ends up being CPU limited to various degrees.

If Doom runs similar to The Great Circle then this one has some very high CPU load in it's default console RTGI mode and you basically have to use PC exclusive PT mode to put the bottleneck back onto high end GPUs.

That being said 9070XT being higher than 5070Ti is abnormal even in case of high CPU limitation.

If Doom runs similar to The Great Circle then this one has some very high CPU load in it's default console RTGI mode and you basically have to use PC exclusive PT mode to put the bottleneck back onto high end GPUs.

That being said 9070XT being higher than 5070Ti is abnormal even in case of high CPU limitation.

Something does look wrong with Doom on Blackwell right now. I see the 4070 matching the 5070 and the 4080 beating the 5080 which should probably never happen.CB.de has started using upscaling in all of their benchmarks, and while this is likely closer to how people actually play games these days it diminishes their GPU testing as everything above mid-range ends up being CPU limited to various degrees.

If Doom runs similar to The Great Circle then this one has some very high CPU load in it's default console RTGI mode and you basically have to use PC exclusive PT mode to put the bottleneck back onto high end GPUs.

That being said 9070XT being higher than 5070Ti is abnormal even in case of high CPU limitation.

Similar threads

- Replies

- 596

- Views

- 60K