Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD RDNA4 Architecture Speculation

- Thread starter Frenetic Pony

- Start date

Pixel Fillrate makes no sense... 10% higher than N33 but performs >2x better?

I was assuming N48 would have 128ROPs to hit ~400GP/s, aka a bit under N31.

Yeah probably wrong.

arandomguy

Veteran

Hmm... The 9070XT beat the 5070TI in both TFlops and TOPS. I wonder what the price will be?

Inferring based on the current information out there it suggests $699 MSRP.

arandomguy

Veteran

I'm guessing they changed it? But the videocardz article current shows 128 ROPs, although based on the pixel fill rate numbers it works out to 64 still.

Polaris halved the number of ROPs compared to Fiji and yet can be faster in some modern workloads (e.g.). Also, the exact ROP configuration isn't known. RDNA 4 is said to have changed TMUs, so maybe ROPs were updated, too (just a guess, not a hint).64ROPs? Seriously? That can't be right, even 7800XT has 96. I was hoping for 128 but at ~3GHz 96 would probably be fine.

Last edited:

If ROPs don't matter, then why is everyone increasing throughput every gen?Why the desire for more ROPs? They don’t seem very important these days. All rendering is done reconstructed from low resolutions, MSAA is dead etc.

That seems to be due to some artificial limitation in the benchmark for cards with 4gb of VRAM and under.Polaris halved the number of ROPs compared to Fiji and yet can be faster in some modern workloads (e.g.). Also, the exact ROP configuration isn't known. RDNA 4 is said to have changed TMUs, so maybe ROPs were updated, too (just a guess, not a hint).

Polaris also had quite a few architectural improvements and other changes that helped it perform much more efficient than Hawaii and Fiji.

Then one wonders why the RTX5080 losing 8ROPs causes ~14% loss in performance in some situations?If the number 160GP/s for 9070 is correct that'd be enough for overdrawing 4K 120Hz more than 160 times each frame. I think one could say that's more than enough.

It's not VRAM related. Even Radeon RX 480 (4GB) is faster (in this test) than 4GB Fury X.That seems to be due to some artificial limitation in the benchmark for cards with 4gb of VRAM and under.

Doesn't that also apply to RDNA 4?Polaris also had quite a few architectural improvements and other changes that helped it perform much more efficient than Hawaii and Fiji.

Performance loss is sometimes several times higher (14 %) than the percentage of missing ROPs (4.5 %). So it seems that with the disabled ROPs, even some datapath (or cache?) is also disabled or reduced.Then one wonders why the RTX5080 losing 8ROPs causes ~14% loss in performance in some situations?

From the linked page-It's not VRAM related. Even Radeon RX 480 (4GB) is faster (in this test) than 4GB Fury X.

"The 3DMark Steel Nomad requirements for the PC are moderate to low. Windows 10 or 11 is required, apart from that the graphics card must support DirectX 12 with feature level 12_0, which is available from a GeForce GTX 900 and a Radeon R7 260 or an R9 290 (the Radeon R9 270 is not included). A graphics card memory of 6 GB is also required."

They don't specify what VRAM on many of those cards... so one has to assume that they are using a SKU with the highest amount of VRAM which is why they perform better.

That synthetic test as proof of anything (other than old cards are old) is pointless.

We don't know... at least not yet.Doesn't that also apply to RDNA 4?

Even if it does, it likely isn't in the same ballpark as the low hanging fruit from a decade ago.

I'm hearing the "low-hanging fruit" argument for like the last 20 years. That should disprove it by itself. Game requirements are still changing, polygons are getting smaller, MSAA is getting irrelevant (unfortunately), AI performance is getting more important, ray-tracing support is more and more widespread. It makes sense, that new architectures may significantly rebalance its resources.Even if it does, it likely isn't in the same ballpark as the low hanging fruit from a decade ago.

Do not put too much hope or hate into one single number here.

The Computerbase-Test shows the RX 5500 XT with 8 GByte 2.47x as fast as the 4 GByte version. I'd say, that's a strong indicator.It's not VRAM related. Even Radeon RX 480 (4GB) is faster (in this test) than 4GB Fury X.

But for RX 9070 with its 16 GByte that should be of no concern.

Last edited:

If the number 160GP/s for 9070 is correct that'd be enough for overdrawing 4K 120Hz more than 160 times each frame. I think one could say that's more than enough.

Isn’t lots of fillrate is still helpful for bursty workloads like the depth or gbuffer passes and shadow maps. Maybe fillrate is no longer bound by VRAM bandwidth on GPUs with large caches. Just a guess though.

Isn’t lots of fillrate is still helpful for bursty workloads like the depth or gbuffer passes and shadow maps. Maybe fillrate is no longer bound by VRAM bandwidth on GPUs with large caches. Just a guess though.

Well other than vertex computations, almost all shader computations end up with a pixel write out one way or another, so I think one can look this as how much time in percentage it spends in ROPS. If say it’s 5% then doubling ROPS gives you at most 2.5% increase in performance. While spending the silicon on other area could give you more performance.

I don’t really know the numbers but my instinct is that more than 160 times 4K @ 120Hz for a mid-range GPU is more than enough. I could be wrong, of course.

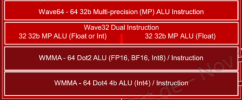

RDNA3 already had INT4 support for WMMA op's. But the TOPS rates quoted in those RDNA4 specs are too high even ignoring sparsity. Dedicated WMMA units?Wow INT4 support too. AMD all aboard the AI train. Choo Choo! Teraflop spec implies dual issue is still a thing.

RDNA3 unexpectedly did not double throughput going from BF16/FP16 to INT8.RDNA3 already had INT4 support for WMMA op's. But the TOPS rates quoted in those RDNA4 specs are too high even ignoring sparsity. Dedicated WMMA units?

Attachments

DegustatoR

Legend

See PS5Pro.RDNA3 already had INT4 support for WMMA op's. But the TOPS rates quoted in those RDNA4 specs are too high even ignoring sparsity. Dedicated WMMA units?

Similar threads

- Replies

- 85

- Views

- 16K

- Replies

- 70

- Views

- 22K