Very interesting Read TampaM!

There's a lot of wrong information on the DC. A lot of places (like the Sega Retro wiki) say that the PVR in the DC can do things that are exclusive to the Neon 250, like have 2D acceleration or display resolutions like 1600x1200.

I can confirm there is a lot of misinformation on segaretro, they seem to be known to be somewhat unreliable.

A lot of people take this for granted, that's why in a lot of youtube-videos and comments you read stuff like "DC natively renders everything at 1600x1200 internally and then just outputs it at 640x480" and more like this.

1600x1200 is a PC resolution and absurdely high for a gaming console from 1998. The only source segaretro gives is a web archive containing an age-olde article from the website "sega technical pages", which I used to read back then and which is down for centuries now. So it makes sense they would confuse it with Neon 250, whose specs were altered a lot from the PowerVR CLX2 used in the Dreamcast.

But I think using a bit of logical thinking is enough to disprove the "renders at 1600x1200 internally".

If that was true - why should the VGA-Signal of the Dreamcast output only 640x480?

The VGA-Box was made to connect to PC-monitors, which were perfectly capable of displaying resolutions a lot higher than 640x480 in the DC's days. Most of them going up to 1280x1024, some even 1600x1200.

So why limit VGA-output to 640x480 and go through all the hassle of using an absurdely high resolution which costs a ton of resources, then going through the process of downsampling it, and then only output it as 640x480? Makes no sense at all.

Also, one can see it with own eyes easily when using a VGA-Box that there is no AA at all - aside from 4 games I know of (excluding maybe homebrew) that do horizontal 2xSSAA in the form of 1280x480.

1600x1200 is not even an integer multiple of 640x480 (as 1280x960 would be). You would get artifacts downsampling that resolution to 640x480.

BTW, segaretro has a lot of other things wrong.

The claim that DC does up to 5 Million polygons per second ingame, for example. Only source given: An age-old article written for a game-magazine that claims (!) that the developers claim (!) the game does 5 Million.

I mean what is the average size of memory required for a polygon in the display list? 32 bytes? ~1 vertex per polygon, UV coordinates and what not going into the display lists...

And to my knowledge after reading some of the manuals, you would double store it in VRAM in a way that the Tile Accelerator writes, while the rendering core reads in parallel, so you got twice the memory usage if I got that right.

So running at 30fps, that alone would use more than 5 MB of the DC's 8 MB VRAM. Plus the 1.17 MB double framebuffers, you have only 1.83 MB left for everything else. Texture data alone is way more than that (VQ texure compression already considered), so 5 Million just can't be true for this game.

Dreamcast was and is a marvellous piece of hardware, so that I think is the reason why people just take that for granted and spread it all around:

They just WANT it to be true (and DC besting the PS2). ; D

Another example for misinformation on segaretro would be the Sega Model 3 technical specs.

It says that Model 3 Step 2, for expamle, has 6 (!) GPUs in the form 6 Real3D-pro 1000 GPUs (chip number 315-6060).

Model 3 does not even have a GPU. GPUs were a later thing, the Real3D-pro is an image generating system spanning several PCBs with a dozend of specialized chips for different functions, not a GPU. And Model 3 does not use 6 (!) Real 3D image generators, each consisting of several boards. Model 3 instead IS a (slightly modified) Real 3D-pro.

They cannot even count right. There are no 6 chips with number 315-6060 present, you can tell if you just look at the Model 3 Step 2 PCB! There are only 4, and those are not GPUs, but texturing units!

There is a lot of more wrong info in that article, I looked through all the source material, which simply does not support those claims. It does not add up.

So a lot of misinformation here.

people hadn't really settled on what exactly certain terms mean. In the 90s, you might see people calling bilinear filtering a form of antialiasing, which isn't entirely inaccurate, but that's not how people would describe it now.

Yes, in the early 90s, texture filtering as also called "texture antialiasing" in some cases. I mean you could call it that, but only a short time after, nobody would.

SSAA does indeed reduce aliasing on edges, so someone in the 90s might call it edge antialiasing

Hmmmmm... I never heard the term Edge Anti-Aliasing used for Supersamling!

I mean SSAA works on the whole image and there already were AA-technics working on edges only back then.

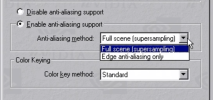

So especially on hardware like the Dreamcast, which also supports SSAA, it would be strange to claim it supported both SSAA/FSAA AND Edge Anti Aliasing, if both were the same.

Well, more on that below!

The Dreamcast has nothing that we would now call hardware edge antialiasing. It just has supersampling.

Good that that is finally cleared up.

The only sources saying DC supported Edge Anti Aliasing would be - again - segaretro and the following document they give as a soure:

Here on pages 3 and 21, it says it supports Edge Anti Aliasing as polygon function (right next to "Bamp"-Mapping - lol).

I never found it mentioned for Dreamcast anywhere else in the document or in any other source.

It is possible for the PVR to render at resolutions greater than 1280x480, but it requires software workarounds that make it less efficicent. I've done 640x480 with 4x SSAA (1280x960) by rendering the screen in two halves, top and bottom, but this requires submitting geometry twice.

I saw you mentioning this in another thread.

Resubmitting geometry meaning out of the VRAM again? That would cost bandwith...

It seems like 1280x480 would be relatively (!) cheap on DC compared to other GPU architectures since it was done using the tile buffers, if I understand correctly.

So not much of of a bandwith hit here and you sad it only increases VRAM-usage slightly.

But what about fillrate cost?

Perhaps that was the reason why only a handful of commercial released games used it - and the ones that did perhaps were heavily CPU-limitated anyway.

That, and also because it would be a suboptimal increase in image quality...

Textures from the time were not shimmering as much as later texture content anyway, and DC had good texture filters in place to handle this.

For edges, the SSAA was effectively ordered grid supersampling, which is not optimal for edge smoothing (a rotated grid with two subpixels would be much more effective, but was only done in later hardware). Also, the horizontal direction would be less important on CRT displays from back then - vertical supersampling would be much more important, but was not done in any game due to the TA buffer limit.

One minor issue with vertical downsampling is that the PVR can't do a 2 pixel box filter, it always does a 3 pixel filter, so there's some slight additional vertical blur, but it's not bad. The user can specify one weight for the center sample, and one weight for the upper and lower sample. Horizontal downsample is always a box filter.

The attached image shows 4x SSAA on the DC. For testing, I deliberately used a different color for the background on both halves to show which render each half belongs to, but it would be seamless if I used the same colors. IIRC, the vertical downsample uses a 50% weight for the center sample, and 25% for the top and bottom samples.

That exactly sounds like the way the Dreamcast handles the deflickering on interlace output!

Did you use it for you 4x SSAA?

Was it even feasible to use it for a resource demanding game or were you merely trying if it worked at all?