https://techenclave.com/threads/the-rtx-5090-benchmarks-are-here-early-confirmed-leaks.224832/ generally expected but pretty meh.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Something is confusing me.https://techenclave.com/threads/the-rtx-5090-benchmarks-are-here-early-confirmed-leaks.224832/ generally expected but pretty meh.

DLSS @ 4K perform worse than 4K in Indiana Jones?

Man from Atlantis

Veteran

https://techenclave.com/threads/the-rtx-5090-benchmarks-are-here-early-confirmed-leaks.224832/ generally expected but pretty meh.

The link is unfortunately dead. However, another forum member compared the 5090 results with the previous 4090 results on the same site.

The 5090 FE underwent a FurMark stress test. Apparently, the card doesn’t sound like a vacuum cleaner, but to me, the sound profile is just as important as the dB level. By the way, the GDDR7 hotspot temperatures exceed 90°C.

Also on board power supply profile is similar to 4090 where PCIe slot power is reserved for auxiliary units, such as fans, LEDs, PLL, M.2 NVMe etc., and maxes out at around 20W and the rest is via 12V-2x6 pin cable.

Last edited:

DegustatoR

Legend

DLSS4 DLLs are out in CP2077 update 2.21: https://store.steampowered.com/news/app/1091500/view/538843836072329454?l=english

Doesn't seem to be THAT more expensive to run on Lovelace.

- Added support for DLSS 4 with Multi Frame Generation for GeForce RTX 50 Series graphics cards, which boosts FPS by using AI to generate up to three times per traditionally rendered frame – enabled with GeForce RTX 50 Series on January 30th. DLSS 4 also introduces faster single Frame Generation with reduced memory usage for RTX 50 and 40 Series. Additionally, you can now choose between the CNN model or the new Transformer model for DLSS Ray Reconstruction, DLSS Super Resolution, and DLAA on all GeForce RTX graphics cards today. The new Transformer model enhances stability, lighting, and detail in motion.

- Fixed artifacts and smudging on in-game screens when using DLSS Ray Reconstruction.

- The Frame Generation field in Graphics settings will now properly reset after switching Resolution Scaling to OFF.

Doesn't seem to be THAT more expensive to run on Lovelace.

Last edited:

It shouldn't be much about architecture, but raw power. Eg. 2060 will probably have harder penalty than 2080 Ti etcDoesn't seem to be THAT more expensive to run on Lovelace.

Dammit...now I have to game this evening and testDLSS4 DLLs are out in CP2077 update 2.21: https://store.steampowered.com/news/app/1091500/view/538843836072329454?l=english

Doesn't seem to be THAT more expensive to run on Lovelace.

/eyes the wife

I dont know, DLSS in the past has never been one to stress overall compute power differences much at all. Tensor power per SM seems to generally be the biggest factor, which will be architectural based.It shouldn't be much about architecture, but raw power. Eg. 2060 will probably have harder penalty than 2080 Ti etc

We'll find out pretty quickly, though. My guess is that the relative performance loss even over different architectures stays in a fairly close ballpark, not differing too radically. I really doubt this model is stressing these tensor cores so hard that Turing/Ampere are gonna struggle with it. That is just a guess, though.

DLSS4 DLLs are out in CP2077 update 2.21: https://store.steampowered.com/news/app/1091500/view/538843836072329454?l=english

Doesn't seem to be THAT more expensive to run on Lovelace.

The thing that stands out to me is how bad native + TAA looks.

Meant raw tensor power, not shader. Transformer model is 4x heavier than CNN modelI dont know, DLSS in the past has never been one to stress overall compute power differences much at all. Tensor power per SM seems to generally be the biggest factor, which will be architectural based.

DegustatoR

Legend

5090 FE reviews are online: https://videocardz.com/195043/nvidia-geforce-rtx-5090-blackwell-graphics-cards-review-roundup

Man from Atlantis

Veteran

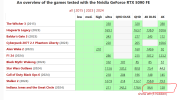

TPU has a nice comparison table showing the performance of CNN and TNN models for the 5090 and 4090. As expected, the 4090 experiences a more significant performance hit when switching from CNN to TNN on DLSS Q/B/P presets.

www.techpowerup.com

www.techpowerup.com

NVIDIA GeForce RTX 5090 Founders Edition Review - The New Flagship

NVIDIA's GeForce RTX 5090 is the fastest graphics card ever released. It comes with a whopping 32 GB VRAM, and support for multi-frame generation, which achieves hundreds of FPS easily. We also managed to disassemble the card, and our review includes performance testing without upscaling or...

Right, but simply having more tensor cores on the die hasn't ever seemed to improve performance for DLSS in any consistent way within any given architecture range. They dont seem to all be acting as one large block together doing a workload, which is why that at least so far, tensor core performance per SM has seemed to be more relevant. Meaning it's really architecture that does make the difference.Meant raw tensor power, not shader. It's Transformer model is 4x heavier than CNN model

DegustatoR

Legend

It should be about architecture because supposedly it is using the newest Blackwell tensor precision and the way they are coupled with shading.It shouldn't be much about architecture, but raw power. Eg. 2060 will probably have harder penalty than 2080 Ti etc

In fact there were changes in tensor features between all RTX generations so I hope someone will check how it runs on all of them.

Just browsed TPU so far but it looks like there’s some meat on those bones. 35-50% increases at 4K in games where it matters. It’s wild that counter strike saw the biggest increase though. So not bad but not amazing given the price increase. More than enough for an upgrade from my 3090.

Will see how the AIB models fare. Not excited about 40db fan noise on the FE.

Will see how the AIB models fare. Not excited about 40db fan noise on the FE.

DegustatoR

Legend

Yeah the results would be okay if it would cost as 4090. At $2000 though that's basically zero perf/price change.

Almost feels like they should've made a 5080Ti on GB202 to directly replace the 4090 at its price point.

Almost feels like they should've made a 5080Ti on GB202 to directly replace the 4090 at its price point.

TPU shows 35% average uplift at 4k.Just browsed TPU so far but it looks like there’s some meat on those bones. 35-50% increases at 4K in games where it matters.

NVIDIA GeForce RTX 5090 Founders Edition Review - The New Flagship

NVIDIA's GeForce RTX 5090 is the fastest graphics card ever released. It comes with a whopping 32 GB VRAM, and support for multi-frame generation, which achieves hundreds of FPS easily. We also managed to disassemble the card, and our review includes performance testing without upscaling or...

Let's not mislead here.

The reviews for the lesser Blackwell GPU's are gonna be ugly. It's no wonder Nvidia had to give a small(and insufficient) shave to pricing.

If you want an NVIDIA RTX 50 Series, you're going to have to arm yourself with patience and luck because everything points to supply problems.

Charlietus

Regular

As I wrote some time ago, this is troubling for the future, if it wasn't already clear. It costs 25% (if you can even get the FE editions that aren't available easily) to 35%+ more than a 4090 while consuming 25%~ more watts and having 25%~ more cores.

And of course, all of that for 25-35%~ more performance at 4k.

There is no efficiency uplift from the architecture itself, pretty much no perf/watt advancements. Nvidia has hit a wall. And that means that the low end will continue to suck while the high end will get minor advancements that the consumer will pay higher and higher prices for.

Outside of node reductions (and those are maybe coming to a end) the future is bleak.

And of course, all of that for 25-35%~ more performance at 4k.

There is no efficiency uplift from the architecture itself, pretty much no perf/watt advancements. Nvidia has hit a wall. And that means that the low end will continue to suck while the high end will get minor advancements that the consumer will pay higher and higher prices for.

Outside of node reductions (and those are maybe coming to a end) the future is bleak.