Yeah, the shader count "only" goes up by about 27%, although the clocks drop by around 5%, so the effective CUDA / shading rate seems to only grow by maybe 25% total. The really BIG gain is the memory bandwidth, which is a nearly 80% bump from the 4090. This likely matters less for the typical rasterized scene, but I wager it matters a lot more for FP32 and especially FP64 functions where the loads/stores are significantly larger and will burn through said bandwidth. I wager this extra bandwidth also measurably enhances raytracing performance for the same reasons, but I guess we'll have to wait and see.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I think if you already have a 4090 then it's probably not that a big upgrade to 5090 (as always). However, 4090 is quite bandwidth limited in many games @ 4K so the greately increased bandwidth of 5090 may help a lot. Another case is if you like to run path tracing games then 5090 may be a healthy upgrade.

Well Nvidia decided to mask any performance gains in heavy RT titles behind MFG so we’ll get a better sense of the real uplift in a few weeks.

DegustatoR

Legend

In a week actually.Well Nvidia decided to mask any performance gains in heavy RT titles behind MFG so we’ll get a better sense of the real uplift in a few weeks.

I'm seriously considering a move from the 4070Ti to a 5080. I reckon I'll get at least 40% more performance there in non VRAM limited scenarios, maybe more in titles with heavy RT or that take advantage of the new Blackwell features. Amd in those titles that hit VRAM limits I'm hoping I'll no longer have to compromise on texture settings.

Plus the 3x FG should allow me to hit my monitors 144hz limit in most games where its available.

Plus the 3x FG should allow me to hit my monitors 144hz limit in most games where its available.

That would be a solid upgrade and get you out of the VRAM concerns. I'm slightly tempted to go from my 4070 to a 5070Ti. 4070Ti Super is the card I would've gotten this generation had it existed at the time. I've found 12GB to be a limiting factor even on my 4070 in some games.I'm seriously considering a move from the 4070Ti to a 5080. I reckon I'll get at least 40% more performance there in non VRAM limited scenarios, maybe more in titles with heavy RT or that take advantage of the new Blackwell features. Amd in those titles that hit VRAM limits I'm hoping I'll no longer have to compromise on texture settings.

Plus the 3x FG should allow me to hit my monitors 144hz limit in most games where its available.

TBH at this point I'll wait for the 3GB modules and see what happens, whether it's a Super refresh or RTX6000. But I promise I won't sit on the 4070 as long as I sat on the 970. Now 970 to 4070 was a hell of an upgrade

I have been keen to find out the L2 cache for months, and I agree it’s a bit odd to leave it out. They would have had to shrink the relative size of the cache footprint to keep the same ratio on GB202 (ie 128MB) - not impossible (AMD did it with Zen 5) but once we knew the chip was 22% bigger it never seemed likely. My guess is it’s still 96MB (maybe a bit smaller even) and they don’t want silly stories about less cache for more money or some such nonsense. Worth noting that unless they needlessly disable cache on the 5090 again, it’ll probably end up with more overall than the 4090 (even if the SM/L2 ratio is a bit smaller).If those die sizes are accurate then Nvidia somehow managed to cram fatter SMs and 4 more of them into the same area. I would be surprised if they didn’t sacrifice L2 to make room and lean more heavily on GDDR7.

Edit: Replied before seeing the post on the next page, no surprises there.

It’s all about what one’s expectation’s are ultimately - the 4090 probably set an unrealistic bar for generational uplift for many consumers. NVIDIA has made clear that they see software features as equally important selling points, which is a smart move considering transistor costs aren’t going down and generational gains in “pure” performance from node shrinks diminishing.Yeah, the shader count "only" goes up by about 27%, although the clocks drop by around 5%, so the effective CUDA / shading rate seems to only grow by maybe 25% total. The really BIG gain is the memory bandwidth, which is a nearly 80% bump from the 4090. This likely matters less for the typical rasterized scene, but I wager it matters a lot more for FP32 and especially FP64 functions where the loads/stores are significantly larger and will burn through said bandwidth. I wager this extra bandwidth also measurably enhances raytracing performance for the same reasons, but I guess we'll have to wait and see.

It’s why I look at HUB posting that framegen has no effect on “performance”, it’s simply a “frame smoothing” system, and see that the very way people talk about performance needs to shift (and already is). Dismissing frame gen as “nothing more than frame smoothing” is only marginally more helpful than saying it increases performance and also misses how impactful it can be when utilized ideally (when the native framerate is at least 60fps). Vendors now offer appreciable differences (and innumerable permutations thereof) in framerate, latency, and visual quality. It’s getting much harder to purely quantify these cards in “performance” terms across generations and vendors. I think some reviewers are realizing this, like DF, and trying to grapple with how to adjust their process accordingly. I’ll be interested to see how it evolves.

DavidGraham

Veteran

With DLSS4, NVIDIA wants reviewers to shed light on a new performance metric, the "MsBetweenDisplayChange", instead of the old "MsBetweenPresents".

The old metric "MsBetweenPresents" measures the time between frames presentation inside the GPU and render pipeline, it's how we have always measures fps, the new metric "MsBetweenDisplayChange" measures the time between frames being displayed on the screen, it's the actual measurement of the smoothness of the frame delivered to the end user, and it's what the end user feels in the end.

Igor's Lab did a piece on this and found several differences between the new "Display" and old "GPU" measurements.

www.igorslab.de

www.igorslab.de

The old metric "MsBetweenPresents" measures the time between frames presentation inside the GPU and render pipeline, it's how we have always measures fps, the new metric "MsBetweenDisplayChange" measures the time between frames being displayed on the screen, it's the actual measurement of the smoothness of the frame delivered to the end user, and it's what the end user feels in the end.

Igor's Lab did a piece on this and found several differences between the new "Display" and old "GPU" measurements.

With the introduction of new technologies such as DLSS 4 and Multi Frame Generation, MsBetweenDisplayChange is playing an increasingly central role. In scenarios where multiple frames are generated per rendered image, such as Multi Frame Generation, MsBetweenDisplayChange enables the evaluation of the actual image display by ensuring that the additional frames generated are precisely synchronized and evenly displayed. An uneven display would lead to noticeable jerks, even if MsBetweenPresents signals a high frame rate.

MsBetweenDisplayChange is essential for evaluating the actual user experience

A look into the lab: Correctly recording render time, time between actual image changes and variances in benchmarks | Page 2 | igor´sLAB

In order to precisely measure the performance of graphics cards, the use of specialized benchmarking software is essential. These programs log demanding graphics processes in order to test the…

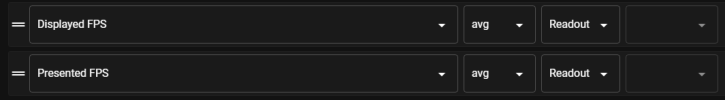

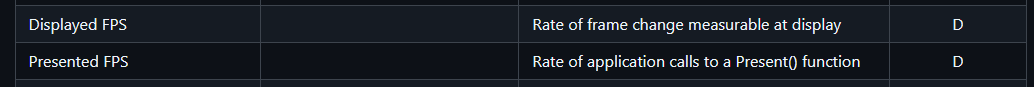

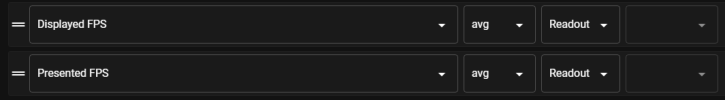

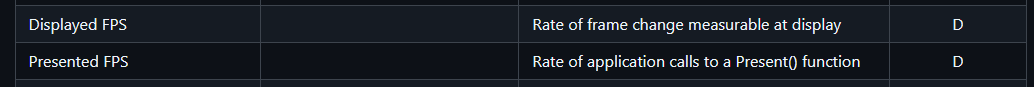

@DavidGraham Interesting article, but I want to clarify that PresentMon 2.3 (I think probably any 2.x version) allows you to measure Displayed FPS or Presented FPS. You can select 1% lows, average etc. I was always curious about that distinction, but now I know.

github.com

github.com

They also have Frame Time which is the time between frames on the CPU and Displayed Time which is the time a frame is visible on the display.

A good review of what PresentMon is doing.

PresentMon/README-CaptureApplication.md at main · GameTechDev/PresentMon

Capture and analyze the high-level performance characteristics of graphics applications on Windows. - GameTechDev/PresentMon

They also have Frame Time which is the time between frames on the CPU and Displayed Time which is the time a frame is visible on the display.

A good review of what PresentMon is doing.

The specs strongly suggested it. Nvidia's own performance claims are confirming it.No, I can't. The architecture is different enough to avoid applying previous patterns to how it will perform. Shading core seem to be different, RT units are different, tensor h/w is different, even memory controllers are different again.

You know it. Deep down, you know it, no matter how much you type words on a screen that belie that you know.

Nope, I'm assured by people here that die sizes have absolutely nothing to do with anything at all.GB203 in particular looks like a straight rehash of AD103 -- similar die size, transistor density, clock rates and a minor bump in SM count. The GDDR7 support is a bright spot for bandwidth bottleneck situations.

TSMC wafer rates for latest nodes must really eyewatering even for Nvidia.

Last edited:

Why is Displayed FPS not a good measure of render queue bottlenecks (according to Igor)? You can’t display frames faster than you present them so any bottleneck should show up there too.

Wouldn't the spikes be smoothed out though with FG? i.e. if rendered frame 1 takes 10ms and rendered frame 2 takes 20ms, then that would be uneven without FG, but in theory would MFG not be able to slot 3 frames in the middle and display all 5 frames for 6ms each?

Wouldn't that require knowing what frame 2's rendering time will be before it had even started rendering frame 1?Wouldn't the spikes be smoothed out though with FG? i.e. if rendered frame 1 takes 10ms and rendered frame 2 takes 20ms, then that would be uneven without FG, but in theory would MFG not be able to slot 3 frames in the middle and display all 5 frames for 6ms each?

Wouldn't the spikes be smoothed out though with FG? i.e. if rendered frame 1 takes 10ms and rendered frame 2 takes 20ms, then that would be uneven without FG, but in theory would MFG not be able to slot 3 frames in the middle and display all 5 frames for 6ms each?

Yes FG can smooth out frametimes somewhat but it can’t improve overall throughput (avg FPS). It makes sense to use display time for 1% lows but I don’t get the comment about bottlenecks not showing up in display metrics.

Wouldn't that require knowing what frame 2's rendering time will be before it had even started rendering frame 1?

You generate frames 1.1, 1.2 and 1.3 after frame 2 has already been rendered. So you have all the info you need to meter those 4 frames.

Last edited:

Man from Atlantis

Veteran

Blackwell SM:

GB202

www.hardwareluxx.de

www.hardwareluxx.de

GB202

NVIDIA Editors Day: Details zur Blackwell-Architektur und der GeForce RTX 50 - Hardwareluxx

NVIDIA Editors Day: Die Details zur Blackwell-Architektur und den GeForce-RTX-50-Karten.

DegustatoR

Legend

CUDA Driver will continue to support running 32-bit application binaries on GeForce RTX 40 (Ada), GeForce RTX 30 series (Ampere), GeForce RTX 20/GTX 16 series (Turing), GeForce GTX 10 series (Pascal) and GeForce GTX 9 series (Maxwell) GPUs. CUDA Driver will not support 32-bit CUDA applications on GeForce RTX 50 series (Blackwell) and newer architectures.

Man from Atlantis

Veteran