I've never seen this, it sounds like you have dynamic tone mapping on. You need to disable that when calibrating otherwise the TV is going to continuously tone map the test patterns.It kinda works but on the same session you can get a "calibrated" HDR experience with a value of 2700, and once you complete that you try again, and the calibration now gives you a value of 1500 as the best value for your display. It's so random.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

HDR settings uniformity is much needed

- Thread starter RobertR1

- Start date

I've never seen this, it sounds like you have dynamic tone mapping on. You need to disable that when calibrating otherwise the TV is going to continuously tone map the test patterns.

One of the many things I learned today about the HDR mess!

DavidGraham

Veteran

But I thought NVIDIA added multi monitor support for RTX HDR 3 months ago!It only need 2nd monitor disconnected for rtx hdr for games. Rtx hdr for video works fine with multiple monitors connected to the Nvidia gpu.

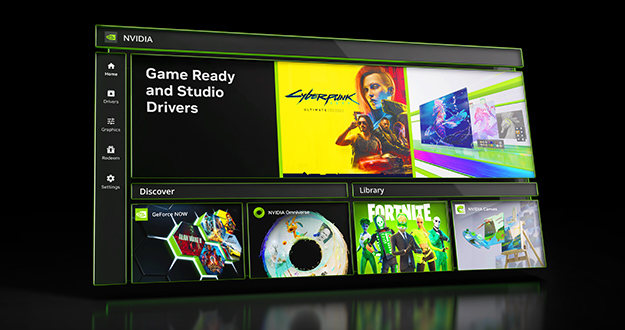

RTX HDR can now be enabled on systems with multiple displays

NVIDIA App Beta Adds G-SYNC Controls, RTX HDR Multi-Monitor Support & More

The essential companion for PC gamers and creators is further enhanced by the addition of new features and user-requested options.

www.nvidia.com

It doesn’t help that this is never explained and it would require understanding a lot more about the HDR pipeline than 99% of people to learn about this lol. We really need more standardization and clarity on gaming HDR.One of the many things I learned today about the HDR mess!

Frankly after reading about it for a while I still barely understand how all this interacts lol.

Android and Chome for what? This is misrepresenting. Android TV for Shield is just fine, providing HDR10/HDR10+/DolbyVision without issue. Chrome provides HDR10 on windows just devices just fine for YouTube, KODi, Netflix etc.Btw hdr on Android is also shitty, even on Android 15 google pixel 6.

It requires per-app hdr flag. Even chrome doesn't have hdr enabled.

Instagram hdr is region locked

But I thought NVIDIA added multi monitor support for RTX HDR 3 months ago!

Yes, I've been using that with a second monitor attached for months. You may need to check your NVIDIA App version (which is different from the drivers and older NVIDIA App does not seem to auto update itself).

For example, On Google pixel 6 android 15, opening YouTube on Chrome would not have hdr, youTube app have hdr. For exampleAndroid and Chome for what? This is misrepresenting. Android TV for Shield is just fine, providing HDR10/HDR10+/DolbyVision without issue. Chrome provides HDR10 on windows just devices just fine for YouTube, KODi, Netflix etc.

Android TV is just the OS branch (Android TV, Android watch wear thingy, android automotive, android phones and tablet).

Best OS level hdr experience by far is apple iPad Pro 2, iPhone and mbp. No user intervention required.

Windows is a disaster as always. I don’t envy anyone not into the AV hobby trying to navigate HDR on the PC. Compounded by game devs just making up hdr sliders and terminology on the fly. Good luck everyone.

Windows is a disaster as always. I don’t envy anyone not into the AV hobby trying to navigate HDR on the PC. Compounded by game devs just making up hdr sliders and terminology on the fly. Good luck everyone.

I don't think Windows is the problem here, nobody even implements the Windows HDR calibration data anyways, even first party MS games use the stupid sliders!Best OS level hdr experience by far is apple iPad Pro 2, iPhone and mbp. No user intervention required.

Windows is a disaster as always. I don’t envy anyone not into the AV hobby trying to navigate HDR on the PC. Compounded by game devs just making up hdr sliders and terminology on the fly. Good luck everyone.

thanks! How do you do that? I am usually fiddling with the display settings but I don't see an option for that on my Samsung TV, nor on my monitor.I've never seen this, it sounds like you have dynamic tone mapping on. You need to disable that when calibrating otherwise the TV is going to continuously tone map the test patterns.

disabled the HDR on my 1440p 165Hz HDR 400 monitor. It has mediocre HDR but the SDR is really good.

Plus, I ordered a 360Hz Alienware AW2523HF monitor and it's also good at SDR. Dell is my favourite company since their customer service has been always really good to me, and I am going to use it both in SDR mode in a dual monitor setup.

I only enable HDR on my 4K TV, but I use it very little for gaming as of late.

HDR adds some extra input lag and a VRAM footprint, so it's ok on my TV but not on the other displays which have higher refresh rates.

so it's ok on my TV but not on the other displays which have higher refresh rates.

Plus, I ordered a 360Hz Alienware AW2523HF monitor and it's also good at SDR. Dell is my favourite company since their customer service has been always really good to me, and I am going to use it both in SDR mode in a dual monitor setup.

I only enable HDR on my 4K TV, but I use it very little for gaming as of late.

HDR adds some extra input lag and a VRAM footprint,

Last edited:

Great investigative work by Tim at Monitors Unboxed on QD-OLED panel dimming in HDR. He even taught the guys at TFTCentral a thing or two!

Still can’t wrap my head around HDR in games. My understanding is that Windows will tone map an SDR game when running in HDR mode such that the game is displayed in standard dynamic range and colors and therefore still look decent.

I tried this with Borderlands : ThePreSequel which to my knowledge doesn’t support HDR natively. Auto HDR and RTX HDR are disabled. However it was significantly brighter and colors were more saturated compared to disabling HDR in windows. What’s more is that enabling RTX HDR on top of that doesn’t change anything. It’s almost like AutoHDR is working even when it’s turned off. So confusing.

Is it expected that SDR games get brighter and more saturated without help from Auto HDR or RTX HDR?

I tried this with Borderlands : ThePreSequel which to my knowledge doesn’t support HDR natively. Auto HDR and RTX HDR are disabled. However it was significantly brighter and colors were more saturated compared to disabling HDR in windows. What’s more is that enabling RTX HDR on top of that doesn’t change anything. It’s almost like AutoHDR is working even when it’s turned off. So confusing.

Is it expected that SDR games get brighter and more saturated without help from Auto HDR or RTX HDR?

dunno how it works internally, but my Alienware AW2523HF displays SDR content by default but while not certified by VESA it also supports HDR.Still can’t wrap my head around HDR in games. My understanding is that Windows will tone map an SDR game when running in HDR mode such that the game is displayed in standard dynamic range and colors and therefore still look decent.

I tried this with Borderlands : ThePreSequel which to my knowledge doesn’t support HDR natively. Auto HDR and RTX HDR are disabled. However it was significantly brighter and colors were more saturated compared to disabling HDR in windows. What’s more is that enabling RTX HDR on top of that doesn’t change anything. It’s almost like AutoHDR is working even when it’s turned off. So confusing.

Is it expected that SDR games get brighter and more saturated without help from Auto HDR or RTX HDR?

I calibrated it using Rtings ICC colour profile file and their recommended settings, in SDR mode.

So yesterday, out of curiosity, I enabled Smart HDR on the display's menu and while I hadn't enabled HDR at all in Windows 11 yet, that change in the menu just modified my entire monitor data and ICC profile, including SDR.

Windows was still in SDR mode, so it should keep the Rtings profile enabled, but it didn't. It treated the monitor as it was an entirely new display.

I didn't bother and will never bother -I only use HDR on my TV where it's worth it-. Just disabled Smart HDR -it has very mediocre HDR- from the display's settings menu, saved some power consumption and then Windows automatically enabled again the Rtings ICC colour profil that I was using.

Last edited:

HDR looks so good when it works though. I didn’t realize how flat SDR looks in comparison. I’m really questioning the need for super bright displays though. Even at 500 nits I find myself squinting at highlights. Can’t imagine why we need 1000 or god forbid 10000 nit monitors.

To really squint those eyesHDR looks so good when it works though. I didn’t realize how flat SDR looks in comparison. I’m really questioning the need for super bright displays though. Even at 500 nits I find myself squinting at highlights. Can’t imagine why we need 1000 or god forbid 10000 nit monitors.

Similar threads

- Replies

- 7

- Views

- 783

- Replies

- 2

- Views

- 957

- Replies

- 14

- Views

- 3K

- Replies

- 44

- Views

- 14K