DavidGraham

Veteran

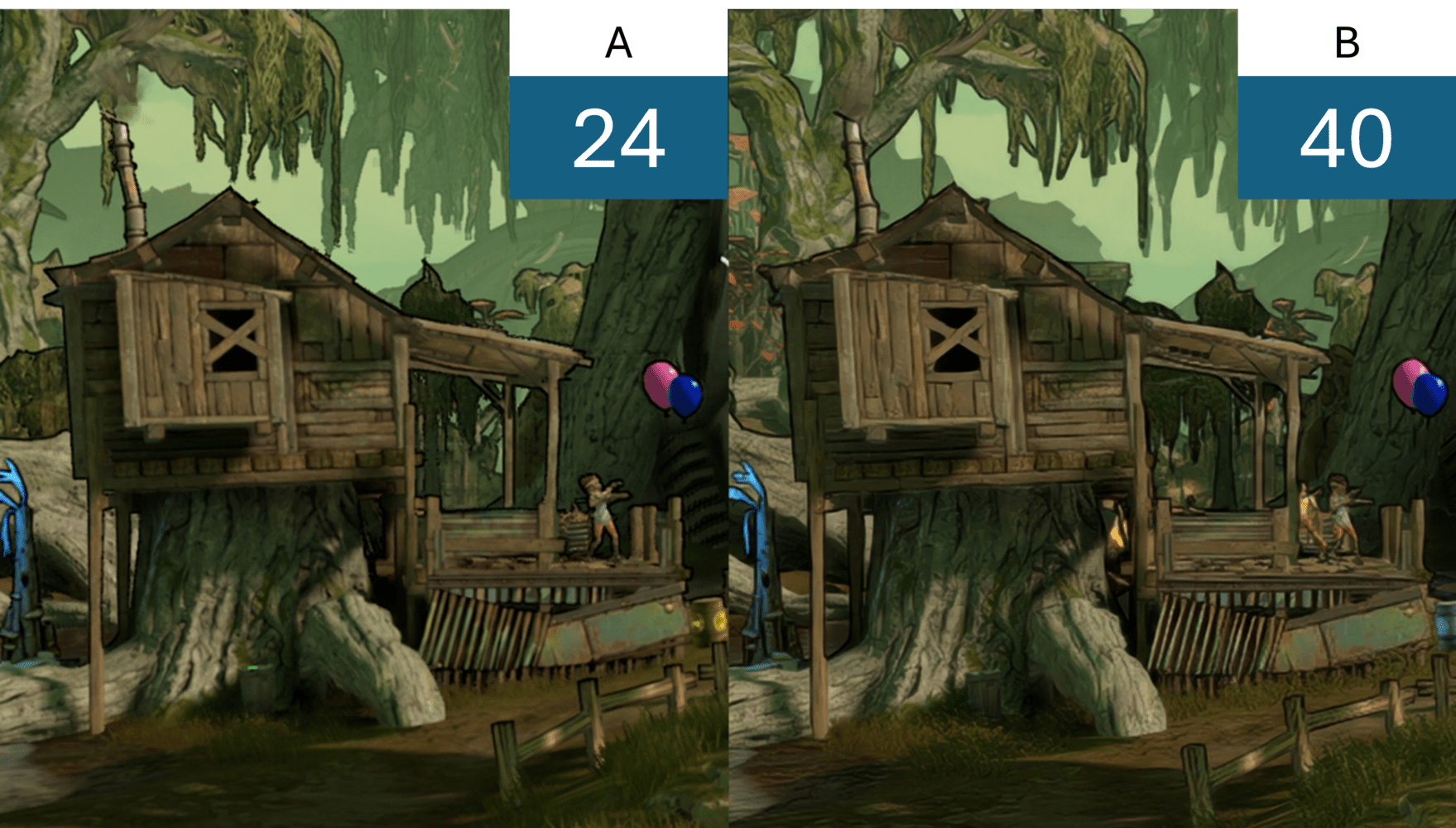

So AutoSR is an AI spatial upscaler? like FSR1 + AI kind of solution? I wonder what the difference in quality between FSR1 and AutoSR would be?

Automatic Super Resolution: The First OS-Integrated AI-Based Super Resolution for Gaming - DirectX Developer Blog

Auto SR automatically enhances select existing games allowing players to effortlessly enjoy stunning visuals and fast framerates on CoPilot+ PCs equipped with a Snapdragon® X processor. DirectSR focuses on next generation games and developers, Together, they create a comprehensive SR solution...devblogs.microsoft.com