Of course they probably would adjust the clocks based on what they've seen from NV, but by how much? You can't magically change silicon, PCB and cooler design overnight to dissipate 50-100W more. Testing, validation, production and distribution take a lot longer than the approx 4-6 weeks since Ampere has been out.

AMD's done exactly that a few times before and caused some significant heartache for their board partners - most recently:

https://www.anandtech.com/show/15422/the-amd-radeon-rx-5600-xt-review/2

https://www.pcgamer.com/amds-last-minute-5600-xt-bios-update-feels-like-a-bait-and-switch/

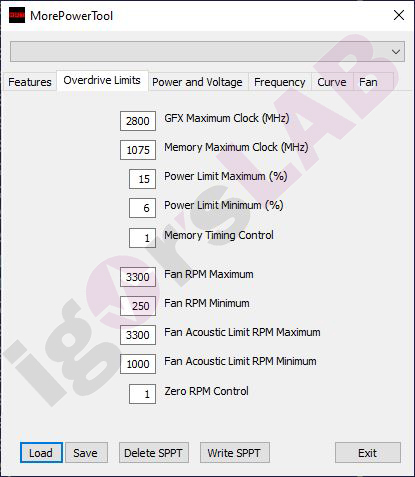

You're right in that they can't change the silicon or board layout but there's an awful lot of latitude to make changes to V/F curves and clocks in the vBIOS.

And not all cards were stable with the 'revised' vBIOS and higher clocks, which also lead to some unpleasantness for consumers who bought a 5600XT and expected it to perform exactly the way all the review samples did:

https://www.igorslab.de/en/radeon-r...mits-and-benchmark-morepowertool-tutorial/12/