Is it the platform or the developer?

Non-UWP games have plenty of problems of their own. The recent Mafia 3 release, for example. Or when Batman: Arkham Knight released if you want a rather extreme example. Assassins Creed: Unity didn't have UWP to blame either.

Some of it was obviously UWP for something like Tomb Raider when UWP was just starting to be used for AAA games. Or Quantum Break at the start with them releasing their first Dx12 game. It's much better now, and much of the problems some people experience with the recent releases could also be that these are the first full titles by these developers on PC. It's entirely possible that their non-UWP release would have had similar issues.

Heck, depending on your criteria (frame time consistency or FPS) and your hardware, the UWP version of Quantum Break runs better than the non-UWP version of Quantum Break.

Regards,

SB

That is a good question, and tbh I think it is a mixture of both.

Regarding Quantum Break, you would expect the DX12 version to perform better than the DX11, but remember how much of a crap storm that game was for the 1st months and required patching game and UWP, it was a nightmare to begin with and yet again we see problems for other games that look to be a mix of developer's getting to grips with UWP and UWP itself as well.

BTW which site you going by regarding Quantum Break?

The Computerbase.de review is a bit confusing because it mentions the UWP 'is now better' with the update that improves VSYNC and the related issues, but their charts clearly show the DX11 is actually faster than the UWP-DX12 version and this is also applicable to AMD (apart from Fury X) albeit with a bit more frame variance so far (AMD suffering much more with the 480 but lets see if they can resolve this in under 2 months for what it has taken to get QB-UWP stable with most issues resolved, and does this also apply to Hawaii or just Polaris card - shame we did not have info on the other models).

That said they also mention the UWP-DX12 QB still suffers hiccups when they were evaluating it to the Steam DX11.

And this is comparing a lengthy patched UWP-DX12 Quantum Break to a just launched DX11 version with no further optimisation patches so far....

Maybe perspective regarding Quantum Break and which manufacturer/models one looks at, if only they had kept DX12 as well for the Steam version as AMD seems to need this for now, while Nvidia is coping well with the Steam DX11.

Anyway I guess time will tell and how long it takes to iron out those game issues for both FH3 and also Gears of War 4, and whether we will see another UWP update as well (and how often this will need to happen for games).

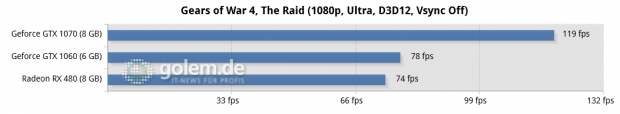

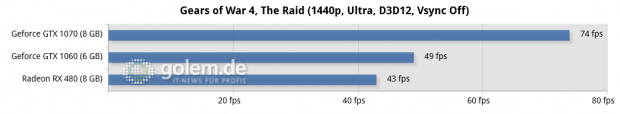

However a key factor is also curveball settings/hardware setups that may have 'side effects' with UWP version, and as Computerbase.de mention Gears of War 4 would be perfect if it was on a different platform to UWP-Microsoft Store

According to them it and FH3 are still held back with issues that seem to stem from UWP rather than the game.

Your 1st sentence would have merit if Steam was the issue for Mafia 3/Batman/etc; indications are the issues I am talking about and also raised by credible review sites comes back to UWP rather than actual game related flaws and bugs.

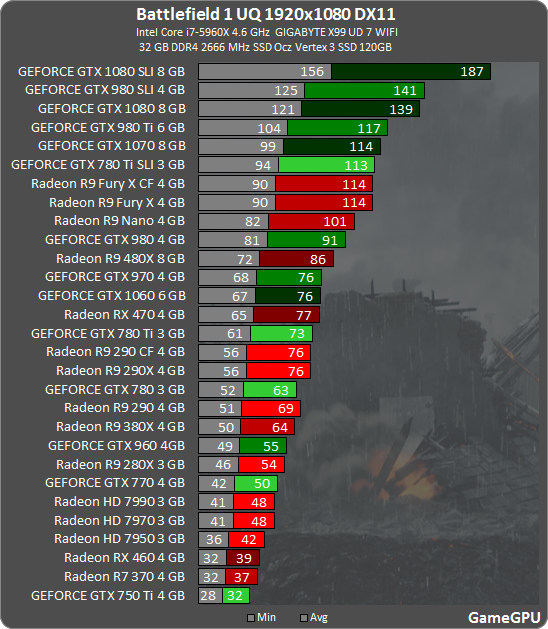

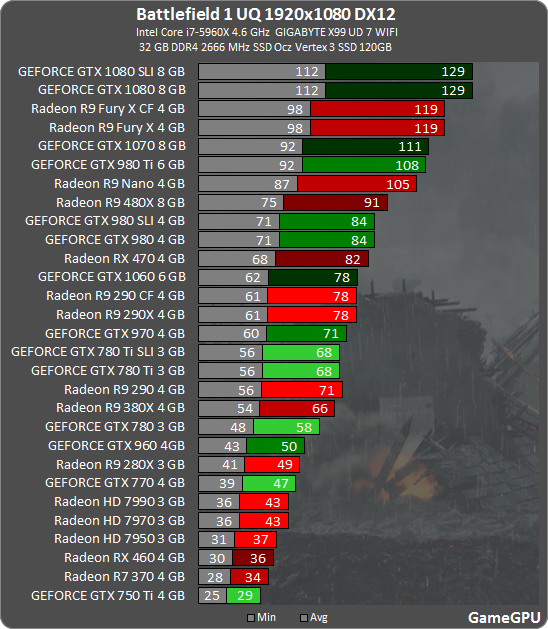

This does also fit into context of DX12 testing and reviewing.

If a game is released on multiple PC 'store' platforms, which one do they to their test/analysis on such as released on both Steam and UWP-Microsoft store.

There is a likelyhood of different behaviour-issues encountered; in theory the platform should be transparent and maybe at some point the UWP-Microsoft store will achieve that but until then it is a consideration with regards to testing-evaluating games.

Cheers