You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why does Frostbite engine perform relatively better on PS4 than XB1? *spawn

- Thread starter Recop

- Start date

GPU and bandwidth?Anyone can explain why the gap is so large in some Frostbite game ? GPU/Bandwidth bound ? Async compute ?

Lol

I think we've debated a lot on how esram should work, and if the textures are being held in system RAM than at most the GPU is getting anywhere between 40-60GB/s of bandwidth to work with. The remaining 192GB/s is for other things, unless they figure out how to put gigs of textures into 32MB.

I'm not sure how high these consoles can scale, it's interesting to see how high 360 is still going but it's not wasting all its juice pushing out more pixels like these ones are. It also says a lot a out what happens when you launch a console with the best technology at the time: it lasts.

No different if you buy the latest PC setups either.

steveOrino

Regular

Anyone can explain why the gap is so large in some Frostbite game ? GPU/Bandwidth bound ? Async compute ?

The PS4 has a better GPU.

Anyone can explain why the gap is so large in some Frostbite game ? GPU/Bandwidth bound ? Async compute ?

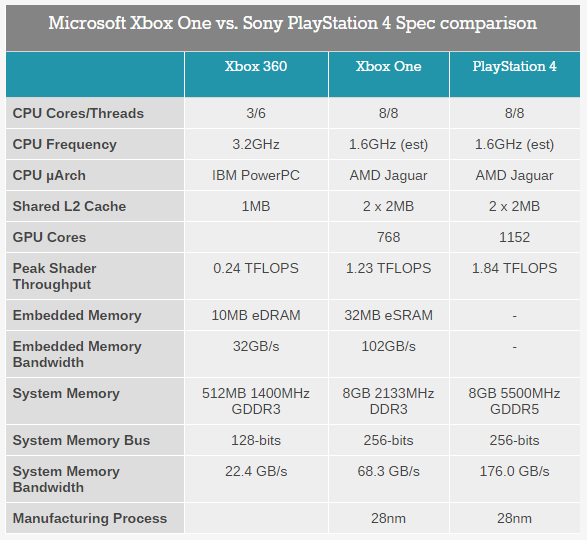

From anandtech (only difference is that we now know X1 CPU is running at 1.75Ghz)

It's also possible that Forstbite 3 complements the Ps4 architecture more. We've also seen games doing the opposite (Fallout 4, Mad Max), doesn't mean there isn't a difference between the two. It really depends on the engine and the game; for example, Just Cause 3 is using the same engine Mad Max and it runs 900p on X1 and 1080p on Ps4 whereas Mad Max runs 1080p on both consoles.

This topic should be old enough, so most in this forum should know, that 192GB/s is the calculated peak of the esram. It can't be 204GB/s because not every cycle could be used in both directions (MS was honest enough to give these numbers). The 140-150GB/s were reached in some scenarios my game code (according ton an interview with someone from MS). Just like GDDR5 oder DDR3 memory, you only give the peak-bandwidth that is theoretically reachable, the theoretical limit of the esram is 192GB/s ... maybe some GB/s more, because I think this was the value before the small upclock to 853MHz.192GB/s ? Where did you get that number ? I think the max for eSRAM is around 140-150GB/s and only in some situations.

Metal_Spirit

Regular

The above Chart is incorrect! Xbox One eSRAM maximum theoretical speed is 204 GB/s. But that number is irrelevant since real life numbers stated by Microsoft point to real life values of something between 140-150 GB/s. Not much different from PS4!

The above Chart is incorrect! Xbox One eSRAM maximum theoretical speed is 204 GB/s. But that number is irrelevant since real life numbers stated by Microsoft point to real life values of something between 140-150 GB/s. Not much different from PS4!

Directly from the article you posted

I think anandtech is using the second metric for ESRAM b/w.the actual theoretical bandwidth ends up being about 204GB per second or 102GB per second in either direction

Even with the esram performance debate as the gpu is only pushing 50% of the pixels is that half the total bandwidth or are there big hogs whuch might pull usage closer.

Could the async compute queues be causing the difference, we know the ps4 bf4 release was already using this, ps4 has more queue granularity as well as the volatile bit?

Could the async compute queues be causing the difference, we know the ps4 bf4 release was already using this, ps4 has more queue granularity as well as the volatile bit?

Even with the esram performance debate as the gpu is only pushing 50% of the pixels is that half the total bandwidth or are there big hogs whuch might pull usage closer.

Could the async compute queues be causing the difference, we know the ps4 bf4 release was already using this, ps4 has more queue granularity as well as the volatile bit?

The Xbox One has a pretty decent number of compute queues. The PS4's count is one that Sony admits is overkill.

The volatile bit has been described in the case of writing non-graphics compute data back to memory in order to sync with the CPU without wiping the whole L2 and disrupting the graphics context.

The major use cases for compute so far are graphics-related and wouldn't need it. I am curious what examples there are of significant use of such compute. The use of compute for more graphics or different graphics has so far proven more than sufficient to soak up the PS4's capabilities.

Performance is ROP bound and they can't go larger than 720p anyway due to their g-buffer format exceeding ESRAM size.Anyone can explain why the gap is so large in some Frostbite game ? GPU/Bandwidth bound ? Async compute ?

Metal_Spirit

Regular

Directly from the article you posted

I think anandtech is using the second metric for ESRAM b/w.

Yes. But why only speak in reads or writes when they both can be done at once? Unfortunatly not exactly at double speed since real life values on those cases, and in specific operarions, stay in the 140 to 150 GB/s range.

this is correct, but it's worth noting that attempting to do read & write simultaneously for most memory is results in huge bandwidth loss. Where we don't see such an effect for esram. Why it matters could be as simple as writing x = f(x); Which is essentially reading and writing values back over the target. As I understand it with esram, there is a hard limit writing back to the same read/write memory location because it's using the same memory block, so I think that's why we're seeing 140/150 GB/s. IIRC there are 4x blocks of 8MB sram. If for some reason you were reading from 1 memory block and writing to another, that's when you'd be able to push to 192/204 GB/s.Yes. But why only speak in reads or writes when they both can be done at once? Unfortunatly not exactly at double speed since real life values on those cases, and in specific operarions, stay in the 140 to 150 GB/s range.

I'm not sure if that's exactly sure how often that would ever be a situation. I would love to know how things change over time when it comes to esram usage in xbox, since it's an interesting development story to read about how engineers develop with/around esram. Fingers crossed there is some breakthrough to read about in the future.

pretty sure this is incorrect considering that Frostbite games have gone to 900p on XBO. I think NFS Rivals (the one released in 2013/2014) uses FB3 and thats running 1080p. Both consoles ran that game 1080p@ 30FPS. We could quip about how PS4 wasn't pushed thus parity etc, but that's not what I'm debating here.they can't go larger than 720p anyway due to their g-buffer format exceeding ESRAM size.

There are bottlenecks on xbox one, but not necessarily all of them point towards esram available space and or bandwidth.

Last edited:

this is correct, but it's worth noting that attempting to do read & write simultaneously for most memory is results in huge bandwidth loss. Where we don't see such an effect for esram. Why it matters could be as simple as writing x = f(x); Which is essentially reading and writing values back over the target. As I understand it with esram, there is a hard limit writing back to the same read/write memory location because it's using the same memory block, so I think that's why we're seeing 140/150 GB/s. IIRC there are 4x blocks of 8MB sram. If for some reason you were reading from 1 memory block and writing to another, that's when you'd be able to push to 192/204 GB/s.

I'm not sure if that's exactly sure how often that would ever be a situation. I would love to know how things change over time when it comes to esram usage in xbox, since it's an interesting development story to read about how engineers develop with/around esram. Fingers crossed there is some breakthrough to read about in the future.

As far as we know the limit is ~145 GB/s only during alpha blend operations. Those being Microsoft numbers.

If they had reached 200 GB/s in a real scenario they would have probably told us.

Wouldn't be so sure about the GBuffer layout, they have switched to PBR recently, so GBuffer layout possibly has changed toopretty sure this is incorrect considering that Frostbite games have gone to 900p on XBO. I think NFS Rivals (the one released in 2013/2014) uses FB3 and thats running 1080p. Both consoles ran that game 1080p@ 30FPS. We could quip about how PS4 wasn't pushed thus parity etc, but that's not what I'm debating here.There are bottlenecks on xbox one, but not necessarily all of them point towards esram available space and or bandwidth.

Isn't alpha blend reading and writing back to the same location ?As far as we know the limit is ~145 GB/s only during alpha blend operations. Those being Microsoft numbers.

If they had reached 200 GB/s in a real scenario they would have probably told us.

Sorry I don't understand. Is there something about PBR that would change the Buffer size ?Wouldn't be so sure about the GBuffer layout, they have switched to PBR recently, so GBuffer layout possibly has changed too

Depends. Could read one buffer and write to another.Isn't alpha blend reading and writing back to the same location ?

May have different channels and channel formats. Probably can't be assumed the GBuffer is the same format, though it might be.Sorry I don't understand. Is there something about PBR that would change the Buffer size ?

Similar threads

- Replies

- 124

- Views

- 10K

- Locked

- Replies

- 27

- Views

- 2K

- Replies

- 21

- Views

- 6K

- Replies

- 14

- Views

- 2K