The game was just made available to the general public, being released we have actual confirmation DLSS isn't included like many feared, and there have been mods released. I mean, why wouldn't this thread 'still' be going at this point? Odd comment.

I fail to see how this is relevant at all. The thread was not born out of a simple technical question of how to add it, it was about the corporate politics involved in preventing developers from adding it themselves. It's not a nothingburger simply because it's technically possible to be done by modders, the ease of which just further underscores that the decision to not include it was indeed political.

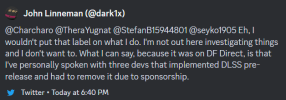

Oh please, this is truly ridiculous at this point. It was never about 'time constraints' or 'priorities being swayed' the actual reason was always obvious, was made even more clear when a modder added DLSS within

2 hours, and how we have confirmation from John of DF that working implementations in other games were actually

removed due to AMD's contract.

It wasn't included because AMD didn't want it included, and they were forced to put the onus on Bethesda after the huge backlash, and the likely resulting modifying of the contract to remove that exclusionary wording. It is not a coincidence that Jedi just got a DLSS patch after all this now as well.

View attachment 9542