Megadrive1988

Veteran

A post I just read on NeoGAF:

edit: I'm still expecting ~300 gflops on the low-end and ~500 gflops on the high-end.

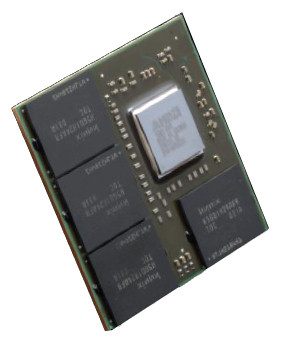

Also people need to stop worrying about power consumption and how it relates to the Wii U's technical power. The Wii U by all accounts has dedicated DSP, I/O controller, and i believe even an ARM CPU for some O/S functions. Not to mention IBM's modern Power based CPUs are incredibly efficient.

The reason consoles like the Xbox 360 and PS3 have been such high draw is not just because they had very high end parts for their time, but also because they went down a path where they had single components like the CPU doing an awful lot of functions. The Xbox 360's CPU does I/O, sound, general processing, FPU tasks, SMID tasks etc. The Xbox 360's CPU is no where near as efficient as a dedicated DSP at doing sound, or as good as a dedicated I/O controller at handling I/O tasks. Tthe Xbox 360 performs many tasks that a processor of significnatly less wattage and clock speed could do, and do better. Nintendo have simply identified which tasks could be done by a seperate dedicated chip better, and put it in their console.

With the Wii U using lots of dedicated chips for specific tasks, that boosts efficiency and reduces power consumption. Sure the Wii U won't be a power house, but it does seem like Nintendo have invested significant engineering into ensuring the Wii U's hardware is as power efficient as possible, and that consumers are getting maximum performance per wattage. If BG's suggestion of the Wii U's GPU being around 6570 performance /600GLFOPs are true, the Wii U Would actually quite a engineering achievement. Not because it's a power house of a console, but because it delivers brlliant performance per watt.

edit: I'm still expecting ~300 gflops on the low-end and ~500 gflops on the high-end.