That's completely untrue. The jump from the 2080>3080>4080 is larger than the jump from 2080 Ti>3090>4090.

Those tiers are above what Sony and Microsoft go for, so irrelevant.

There was some major stagnation with the 60 series but this is the exception, not the norm.

It's the norm, and this is the tier Sony and Microsoft typically aim for.

The performance differentials from 960>1060>2060>3060 were quite good. It wasn't until the 4060 that it fell apart but I'm not expecting a repeat of this.

I am.

We're talking 3-4 years from now, late 2027/early 2028. The 4090 will be as old as the 2080 Ti is now. We'll be at the RTX 60 series and the 4090 will be two generations removed from its top tier status. Do you think a console released today for $500 would have a GPU weaker than the 2080 Ti?

Yes, I suggest you go and look at AMD's power consumption numbers for RDNA3.

For the ~180w power budget they have, they're not getting a 2080ti.

The PS5 Pro will be released at the end of the year and we're expecting it to have a GPU on the level of a 3080/4070. If that comes to pass but the PS6 is still weaker than a 4090, then it means it won't even be twice the performance of the PS5 Pro which I find extremely unlikely.

The closest GPU to the 3080/4070 from RDNA3 is the 7800XT, which according to Techpowerup consumes 250w during gaming.

So unless Sony are willing to pull 300w and also able to cool it you won't get 3080/4070 as they'll need to downclock to reduce power draw, which will reduce performance.

And even then, in RT, the 3080/4070 (especially the 4070) will batter the 7800XT.

No, they won't. A $500 console released in 2028 will have a GPU that outperforms the top PC one from the year 2022. It would be ridiculous if it didn't. Look at when the PS5 was released, it managed to be close to a 2080 just two years later.

And in ray tracing (which will be PS5 Pro's and PS6's main focus) the 2080 batters PS5.

And unless AMD massively increase their RT performance, PS6 will arguably, still struggle to match a 4090.

Which is nice and all but in 3-4 years from now, there will be significant performance/watt improvements.

AMD have yet to show this, RDNA3 was a step backwards.

If the trend continues, the PS6 will have a GPU on the level of a 5070 but will be out against the 6060/6070.

Actually if the current trend continues, PS6 will be no where close to that due to pricing, GPU's cost is going up, not down.

Heck they might only to be able to afford a 5050.

I'm fully expecting it to be not only faster than the 4090 but to also have a much more advanced feature set. That's in gaming only though. It won't come all that close in compute.

Don't be blinded by the current way the 4090 crushes everything. It looks mighty impressive but it'll be old in 2028.

Again, unless AMD massively increase their RT performance (which again, will be next gens focus) then they'll struggle to match the 4090.

The 1080 Ti also seemed unstoppable, yet the consoles managed to have better GPUs less than 4 years later.

Looking at

Techpowerup the 1080ti is 6600XT/2070 Super performance in raster, so no, the consoles didn;t manage to have better GPU's less than 4 years later.

The argument could easily be made that they're slightly slower than the 1080ti.

You need to come a little bit, GPU's are getting more and more expensive, and next gen is going to be purely about RT performance, maybe even PT performance.

And in RT/PT loads the 4090 is so far a head of the 7900XTX and AMD's other efforts it's not even funny, unless AMD mange to triple RT performance in RDNA4's

mid-range offerings you're not getting 4090 performance in PS6.

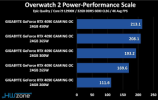

This should put it in to context how far behind AMD are behind Nvidia in next gen RT/PT....notice that 2080ti you've been talking about, beats AMD's current best GPU? PS5 in RT is the 6600XT.....at 0.5fps