Here are some graphs illustrating the performance issues of updating the page table mappings in Vulkan ...

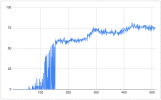

View attachment 10838

The first graph above depicts the behaviour of the RTX 4090

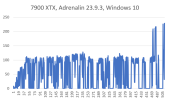

View attachment 10839

What you see in these graphs are how long it takes to complete a vkQueueBindSparse (

UpdateTileMappings in D3D) call as the hardware queues are getting saturated over each submission. The horizontal axis represents a new iteration for a vkQueueBindSparse submission while the vertical axis represents the total time in milliseconds ...

On the 4090, the first 50 calls of vkQueueBindSparse appear to have no performance impact but then the cost of each new vkQueueBindSparse from the 50th to 150th submission sharply increases. Just before the 4090 reaches the 300th submission, each new vkQueueBindSparse submission will take over 70ms to to complete ...

On the 7900 XTX, the cost of each new vkQueueBindSparse submission will sharply rise at the very start until it's ~40th iteration for which after every new submission of vkQueueBindSparse will take over 100ms to finish ...

So we can clearly identify the performance

problems of updating the page table mappings in each of these graphs for hardware virtual texturing implementations on both vendors. Making API calls to update the page table mappings can get prohibitively expensive when done in succession for many new frames as is potentially the case with sparse/virtual shadow maps ...