It is fake performance if you claim that it renders at a higher resolution when it's not.

By that definition every game using shadow maps also has fake performance. Upscaling is just another shortcut in a long line of shortcuts.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

It is fake performance if you claim that it renders at a higher resolution when it's not.

Actually, you are making a political statement, whether you are aware or not. Voting with money always works. If you buy shoes that were created by child slavery, even if it was never your intention, and even if you are unaware, you are literally funding child slavery. Whatever we buy influences the world, because where the money goes, the same things grow.

But I guess that is too raw for most. Everyone prefers to see themselves as good, rather than contributing to atrocities.

There is no such thing as rasterization performance anymore, has not been for a long time. Shadows Maps, Screen Space Shadows, Global Illumination (probes/screen space), Ambient Occlusion, Screen Space Reflections, Cubemaps, Motion Blur, and Depth of Field are all rendered at a fraction of the native resolution (1/2, 1/3, or 1/4). TAA is using temporal past frames to do Anti Aliasing, it's even integrated into the design of Ambient Occlusion and Screen Space Reflections to aid in performance.So if we are measuring rasterization performance

RT distorts what? RT is just another Ultra option available to gamers, you shouldn't even isolate RT games from non RT games at all. You should just treat RT as another graphics preset and test GPUs against it like you do with any other game. Even HardwareUnboxed finally started doing this recently in the main reviews, they bundle RT games together with non RT games and give an average score.RT is an additional variable that distorts results

Doesn't matter; it's all rasterized in the end. They are different graphical settings, and nothing more.Lol, they're all generating pixels from samples, the samples are just collected differently. If I switch back and forth between trilinear filtering and anisotropic filtering, which one generates the real pixels, as they're sampling differently?

They are all real, with different graphics settings. The first one is 1440p native, the second is 4k native with low settings. Why is that so difficult? And pixel shading is a primary component for rasterization. Without it, rasterization can be considered incomplete. The whole rendering pipeline is considered rasterization by the likes of Hardware Unboxed, Gamers Nexus and so on, and for good reason. After all, all those techniques like AO, Dynamic Shadows etc. are there because of rasterization. If we would use path tracing, all those separate techniques would not be necessary at all, because they were developed specifically for improving graphics while using rasterization. The final resolution directly influences the workload of rasterization, because more pixels need to be produced.Screen-space effects (depth-of field, motion blur, AO, GI, shadows etc) are not rasterization, Ray/path tracing is not rasterization, fragment/pixel shading is not rasterization. Rasterization in hardware is just one step that takes polygons (post-culling) and generates fragments to be shaded. If I play a game at 1440p "native" with full resolution screen-space effects or a game at 4k "native" with 1/4 resolution for screen-space effects, which one is the "real" pixels?

You've produced pixels twice in your explanation. But your explanation ignores the different amount of pixels produced at different resolutions. Different amount of pixels means different amount of work. As long as you have enough VRAM, detailed textures aren't really influencing the GPU significantly. It's the sampling, i.e. the number of pixels that need to be drawn from said texture, that determines how heavy the GPU is taxed. If you have a quarter the pixels from rendering at 1080p and pretend that it's the same as having 4k resolution, because you have an algorithm in the middle, you're deluding yourself.The way rendering works is you sample data (geometry, textures, materials) and you produce pixels. You feed the data into a pipeline (collection of algorithms) and pixels come out the other side. You can change the sampling rates, and you can sample spatially and temporally. No matter what, the pixels that come out the other side are as "real" as any other. Subjectively quality will vary.

IMHO that's a very rigid and, honestly a sad point of view, in a graphical forum, to classify a GPU performance by its rasterization only, which nowadays represents a fraction of what is mostly compute workloads. 20 years ago, you would be fine with this reasoning, but in 2023? seriously?Doesn't matter; it's all rasterized in the end. They are different graphical settings, and nothing more.

Doesn't matter; it's all rasterized in the end. They are different graphical settings, and nothing more.

They are all real, with different graphics settings. The first one is 1440p native, the second is 4k native with low settings. Why is that so difficult? And pixel shading is a primary component for rasterization. Without it, rasterization can be considered incomplete. The whole rendering pipeline is considered rasterization by the likes of Hardware Unboxed, Gamers Nexus and so on, and for good reason. After all, all those techniques like AO, Dynamic Shadows etc. are there because of rasterization. If we would use path tracing, all those separate techniques would not be necessary at all, because they were developed specifically for improving graphics while using rasterization. The final resolution directly influences the workload of rasterization, because more pixels need to be produced.

Also, I find it funny how you include ray/path tracing, as that is a completely different rendering technique with different requirements. You don't even really need pixel shaders for it, but compute shaders. This is where the general term rasterization vs path tracing comes from.

You've produced pixels twice in your explanation. But your explanation ignores the different amount of pixels produced at different resolutions. Different amount of pixels means different amount of work. As long as you have enough VRAM, detailed textures aren't really influencing the GPU significantly. It's the sampling, i.e. the number of pixels that need to be drawn from said texture, that determines how heavy the GPU is taxed. If you have a quarter the pixels from rendering at 1080p and pretend that it's the same as having 4k resolution, because you have an algorithm in the middle, you're deluding yourself.

I mean, there's a bit of truth to this. Obviously your example is purposefully exaggerated, but it's not unreasonable to think that techniques that require high end, unaffordable-to-most hardware to use will not be adopted by developers heavily until this changes. The large majority of hardware RT implementations to date are not game changing, visually. With the RDNA2 consoles being the general AAA baseline, developers are going to be reluctant to use any overly heavy RT effects, and while there's certainly the possibility on PC to do more, there's not necessarily the financial incentive to go big there with some great effort.They think RT will be a gimmick until a $300 GPU can run CP2077 in 4K PT at 60fps

IMHO that's a very rigid and, honestly a sad point of view, in a graphical forum, to classify a GPU performance by its rasterization only, which nowadays represents a fraction of what is mostly compute workloads. 20 years ago, you would be fine with this reasoning, but in 2023? seriously?

The truth is that many people are against progress and evolution if it doesn't fit their wishes. They think RT will be a gimmick until a $300 GPU can run CP2077 in 4K PT at 60fps, and they forget that 3D real time rendering has always been a story of incremental features and performances, starting from high-end and slowly moving down the product stack...

The entire pipleline: data -> transform -> pixels

Rasterization: one step (one transform) in the pipeline that transforms culled polygons into fragments/pixels to be shaded. What comes out of the rasterization stage is a fragment/pixel without materials or lighting.

Rasterization is a visibility test to see which polygons cover which pixels on the screen. Ray-tracing/path-tracing is a replacement for that visibility test which can also test for the visibility of reflections and lighting.

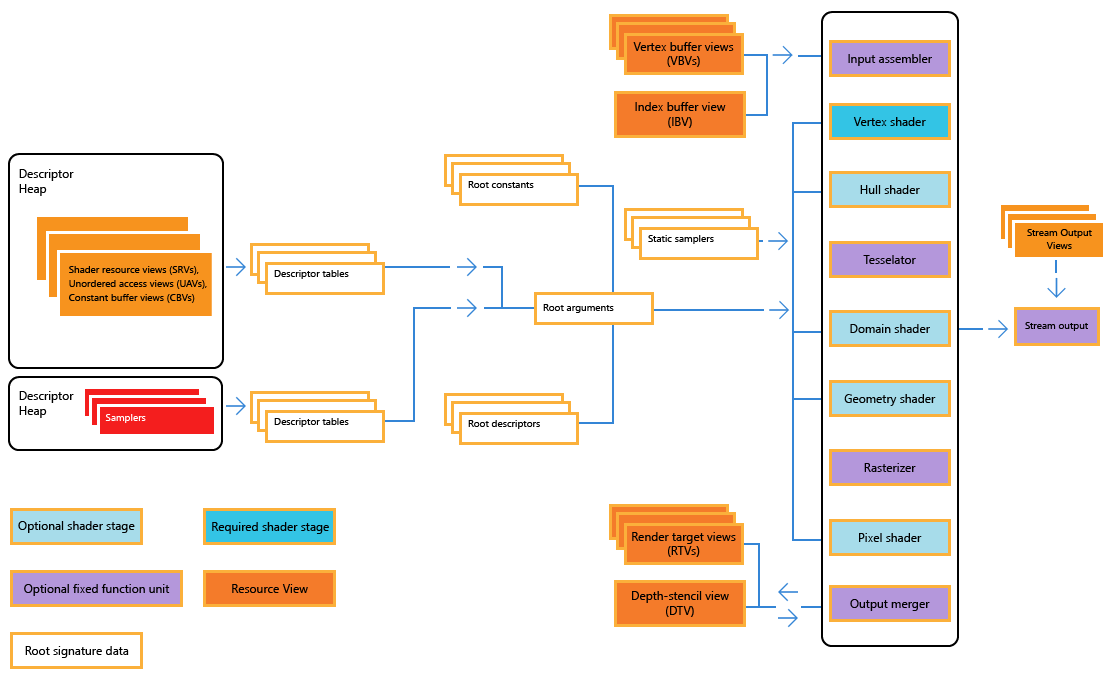

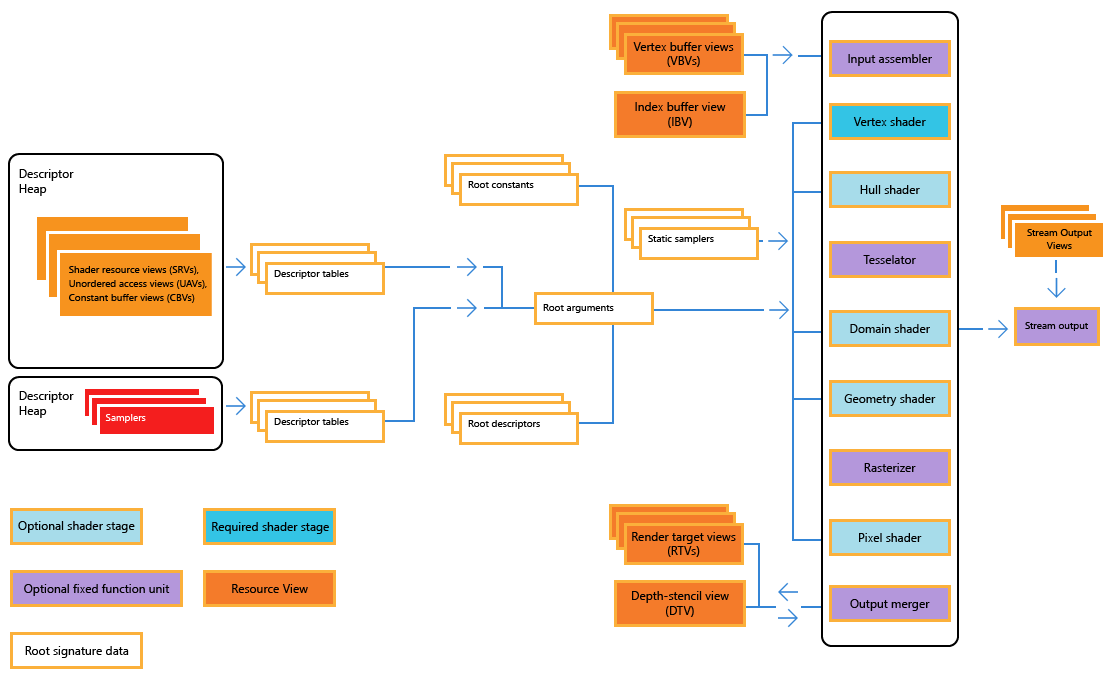

DX12 pipeline: Note that the rasterizer fits in the pipeline after geometry processing and before pixel shading.

Edit: Sometimes you'll hear people say "Raster pipeline" and they mean the pipeline includes rasterization. The whole pipeline is not rasterization. For example, you could test primary visibility with ray tracing and then do screen-space reflections, screen-space shadows etc and a whole bunch of other stuff. I guess technically a lot of screen-space effects are done with screen-space rays, even if you're testing primary visibility with rasterization.

In the end there are tons of different ways to take samples from data and turn them into pixels, none of which is more "fake" than any other.

We have to agree to disagree. I don't think consoles are the limiting factor in determining whether studios utilize full RT effects since it is just as easy to provide gamers the means to scale back RT effects, also if it was true we wouldn't currently have any RT games. As we have seen obligations due to contractual sponsorships might have more to do with studios providing "watered down" RT effects in games as a means to conceal IHV architectural weaknesses rather than any financial development hardship. I believe consensus is that the development workflow required for "bells and whistles" RT effects requires not much more developer effort than providing a "watered down" RT version.With the RDNA2 consoles being the general AAA baseline, developers are going to be reluctant to use any overly heavy RT effects, and while there's certainly the possibility on PC to do more, there's not necessarily the financial incentive to go big there with some great effort.

Adding in completely different/new RT features is not simple, though. Sure, you can do full res reflections on PC versus like quarter res on console or something, but it's a whole different thing to add in RTAO just for PC, for example. It's nice when devs add in PC-only RT features, but I dont think most developers will feel compelled to do this necessarily. It's still gonna be viewed as an optional bonus, and I think this sets the tone in how gamers view it as well.We have to agree to disagree. I don't think consoles are the limiting factor in determining whether studios utilize full RT effects since it is just as easy to provide gamers the means to scale back RT effects, also if it was true we wouldn't currently have any RT games. As we have seen obligations due to contractual sponsorships might have more to do with studios providing "watered down" RT effects in games as a means to conceal IHV architectural weaknesses rather than any financial development hardship. I believe consensus is that the development workflow required for "bells and whistles" RT effects requires not much more developer effort than providing a "watered down" RT version.

If specific platforms/GPU tiers are not in a position to visually enjoy fully enabled RT effects with current hardware they will fall back to the weaker RT version. At a later date once hardware is capable they can run the fully enabled RT options with at most minimal developer involvement.

Not really. If your engine already have it then adding it is trivial - unless you're unwilling to deal with the art issues which may arise from that. If not then it's more complex but again if the engine does some RT already it's not exactly hard.Sure, you can do full res reflections on PC versus like quarter res on console or something, but it's a whole different thing to add in RTAO just for PC, for example.

It is never as simple as just turning on an effect, even if it's built into the engine. As you say, there will be art issues and other tech conflicts that all need to be balanced/ironed out to make everything work as desired. Point is - it is absolutely more work. Very little in game development is ever 'easy'.Not really. If your engine already have it then adding it is trivial - unless you're unwilling to deal with the art issues which may arise from that. If not then it's more complex but again if the engine does some RT already it's not exactly hard.

I mean, that was the promise, but it's definitely not how it actually works in practice from what I've read from developers. Especially anything with lighting. The relationship between lighting and other areas of visuals can be fairly sensitive.No, Raytracing is not more work. It just works. Would it be the other way no developer would have ever used SSAO, SSRs and some kind of real time GI.

When they are calculated is really not relevant.I dont know which developer has said something like this. 4A Games was very clear why they have gone to raytraced GI with Metro Exodus EE.

And there is no difference between Raytracing and Screenspace. Both are calculated at runtime.

Porting a game to PC is more work yet nobody seem to discuss the idea that you could just not port anything - because it's more work. Same thinking applies to RT - you could implement/improve it in your PC version, and it will make the game better which in turn may help you sell more copies.It is never as simple as just turning on an effect, even if it's built into the engine. As you say, there will be art issues and other tech conflicts that all need to be balanced/ironed out to make everything work as desired. Point is - it is absolutely more work. Very little in game development is ever 'easy'.

When they are calculated is really not relevant.

And I think you're still missing the point here - I'm talking about adding in extra RT features specifically for PC version. Meaning devs have to include both non-RT and RT implementations of whatever feature we're talking about. It is absolutely going to be more work. Your idea that RT can just be flicked on like a switch and everything will be good is simply not how it actually works.

Once we have more powerful hardware that is available at a mainstream price point, then yes, developers can start to use ray tracing as standard, where it could no longer be viewed as a gimmick and would involve less overall work.

Porting/developing a game for PC is obviously sensible from a sales perspective. The idea of putting in more work to include high end options that only a small percentage of an already limited playerbase can actually use, much less will actually want to use, is a VERY different prospect. That's genuinely my whole point here. Like I said, it may make sense for studios who want to make their game a tech showcase title, but otherwise will not be seen as worth it for many, or even most.Porting a game to PC is more work yet nobody seem to discuss the idea that you could just not port anything - because it's more work. Same thinking applies to RT - you could implement/improve it in your PC version, and it will make the game better which in turn may help you sell more copies.