I do. Do you?

I agree. You should be banned. Because you haven't provided any evidence to back Steve's claims yet decided to dismiss what I've said simply because you don't like it.

So Steve's video.

"Fun" stuff first

https://www.techspot.com/article/2583-amd-fsr-vs-dlss/ - Here in HUB's own previous video FM5 with DLSS is faster than FSR2 in comparison to HUB's own new video where it's almost on par with FSR2 ¯\_(ツ)_/¯

https://www.techspot.com/review/2464-amd-fsr-2-vs-dlss-benchmark/ - here DLSS is considerably faster than FSR2.

That's about it for DLSS vs FSR2 comparisons from HUB. So based on this they made the decision that DLSS doesn't matter?

Hitman 3 - 1 fps difference in favour of DLSS in Steve's new video

4 fps difference in HUB's own previous test of H3 here:

Spider-Man: Remastered - no difference in latest video

1 to 2 fps difference in favour of DLSS here in HUB's own test:

Death Stranding - 2-3 fps in favour of DLSS

Again, 4-5 fps here:

FH5 - no difference in the new video

1-2 fps faster in their own previous benchmark:

Other benchmarks

Deathloop - DLSS is noticeably faster in every benchmark out there besides HUB's, so "different pass" suddenly doesn't work

Greatly improved image quality, without machine learning hardware

www.tomshardware.com

FidelityFX Super Resolution im Test: Die Bildqualität von FSR 2.0 und die Performance / Testsystem und Testmethodik

www.computerbase.de

SMR

Spider-Man Remastered im Test: AMDs FSR 2.0 und Nvidia DLSS 2.4 im Detail / Nvidia DLSS und AMD FSR 2.0 (und IGTI) in der Analyse

www.computerbase.de

Confirms the first benchmark on HUB - DLSS is 1-2 fps faster

Same is true for SMMM btw:

https://www.computerbase.de/2022-11..._nvidia_dlss_24_bildqualitaet_und_performance

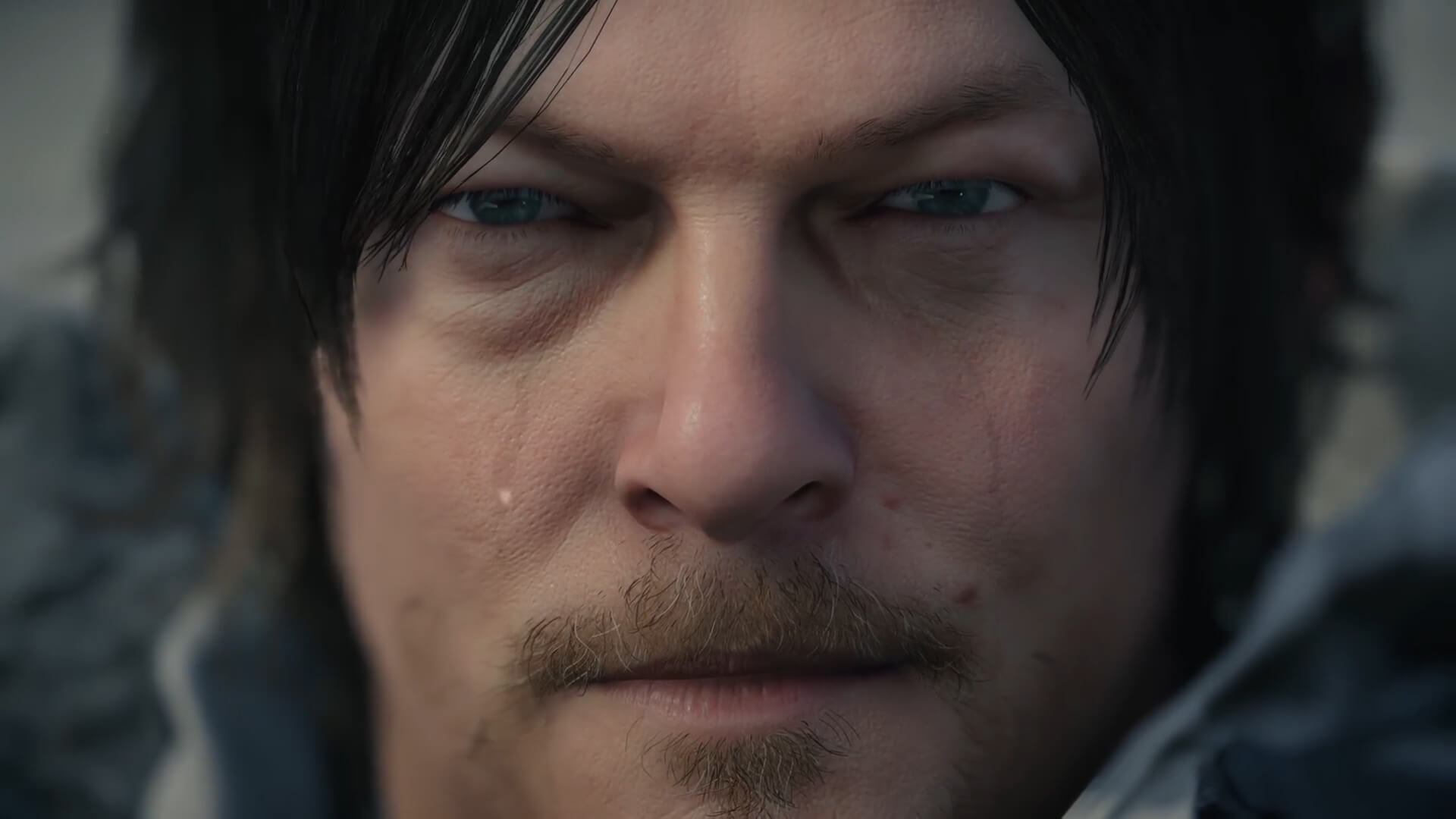

Death Stranding

Death Stranding is the first game that supports all available PC upscaling techniques (DLSS, FSR and XeSS) so we've decided to benchmark them

www.dsogaming.com

DLSS is about 7 fps faster here instead of Steve's 2-3

Destroy All Humans Remaster

Destroy All Humans! 2 - Reprobed uses Unreal Engine 4 and supports both NVIDIA's DLSS and AMD's FSR 2.0, so it's time to benchmark them.

www.dsogaming.com

DLSS is slightly faster

Uncharted

Uncharted: Legacy of Thieves im Test: AMD FSR 2 und Nvidia DLSS 2.4 im Kurzüberblick / Die Bildqualität von AMD FSR 2.x und Nvidia DLSS 2.4

www.computerbase.de

DLSS is about on par

The only game where DLSS does worse than FSR2 which can be corroborated by other benchmarks is F1 2022

F1 22 ist mit Patch 1.17 um AMD FSR 2.2 reicher. Im Test muss es sich bei Bildqualität und Leistung FSR 1.0 sowie Nvidia DLSS stellen.

www.computerbase.de

Likely because DLSS integration in F1 titles has always be rather bad.

This trend is typical. I read through most benchmarks which appear out there and FSR2 being faster on GF is an exception, not a rule.

It is in fact so obvious that most places which did DLSS vs FSR2 benchmarks regularly last year have basically dropped that in favour of testing DLSS on compatible GFs and FSR2 on the rest.

Now the "funniest" part is that Atomic Heart doesn't even support FSR2.

It's using FSR1.

Why did he even included it into the last video? To make his point stronger or something?

What things?

GFs run FSR2 math on tensor units in parallel with FP32 shading and if optimized properly can likely hide the majority of FSR2 cost this way - which no one will do of course.

Radeons run it on the main SIMDs eating cycles otherwise spent on graphics rendering.

It's not equal no matter how you slice it.