There’s only one way to calculate theoratical performance which is:

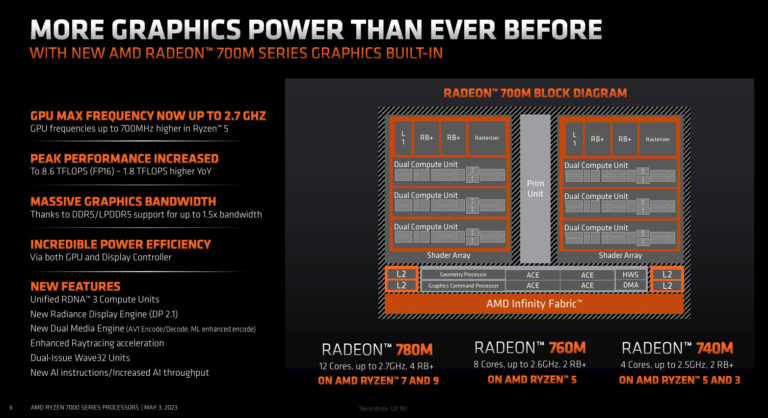

total number of cores (in this case is 768 sp’s) X 2 (each core performs 2 ops per clock) X clockspeed (in mhz) / 1000000 (converting gflops to tflops) == 8.6 Tflops

Now let’s replace variables with actual numbers:

768 X 2 X Clk / 1000000 = 8.6

1536 X Clk = 8600000

Clk = 8600000 / 1536

Clk = 5598 mhz

in order for the ROG Ally Z1 extreme to hit 8.6 Tflops the GPU needs to run at clock speed of ~ 5.59 Ghz. to my knowledge that clock speed couldn’t be reached for desktop liquid cooled GPU’s let alone a handheld GPU. Even with boost clocks still this seems questionable, appreciate your thoughts.