Sega_Model_4

Newcomer

Profit isn’t a four letter word like so many seem to think it is.

In what way is it worse than what came before?

I have no idea how you think product segmentation causes FOMO.

Outside of the false statements above, it sounds like how you stay in business and pay your employees and owners.

I don't know why you segmented the text, since the logic is that it is complete. The problem is not profit, the problem is creating methods that are bad for the consumer in order to achieve this. For example, I can sell pure whole milk or I can add water to it to make more money and make more products.

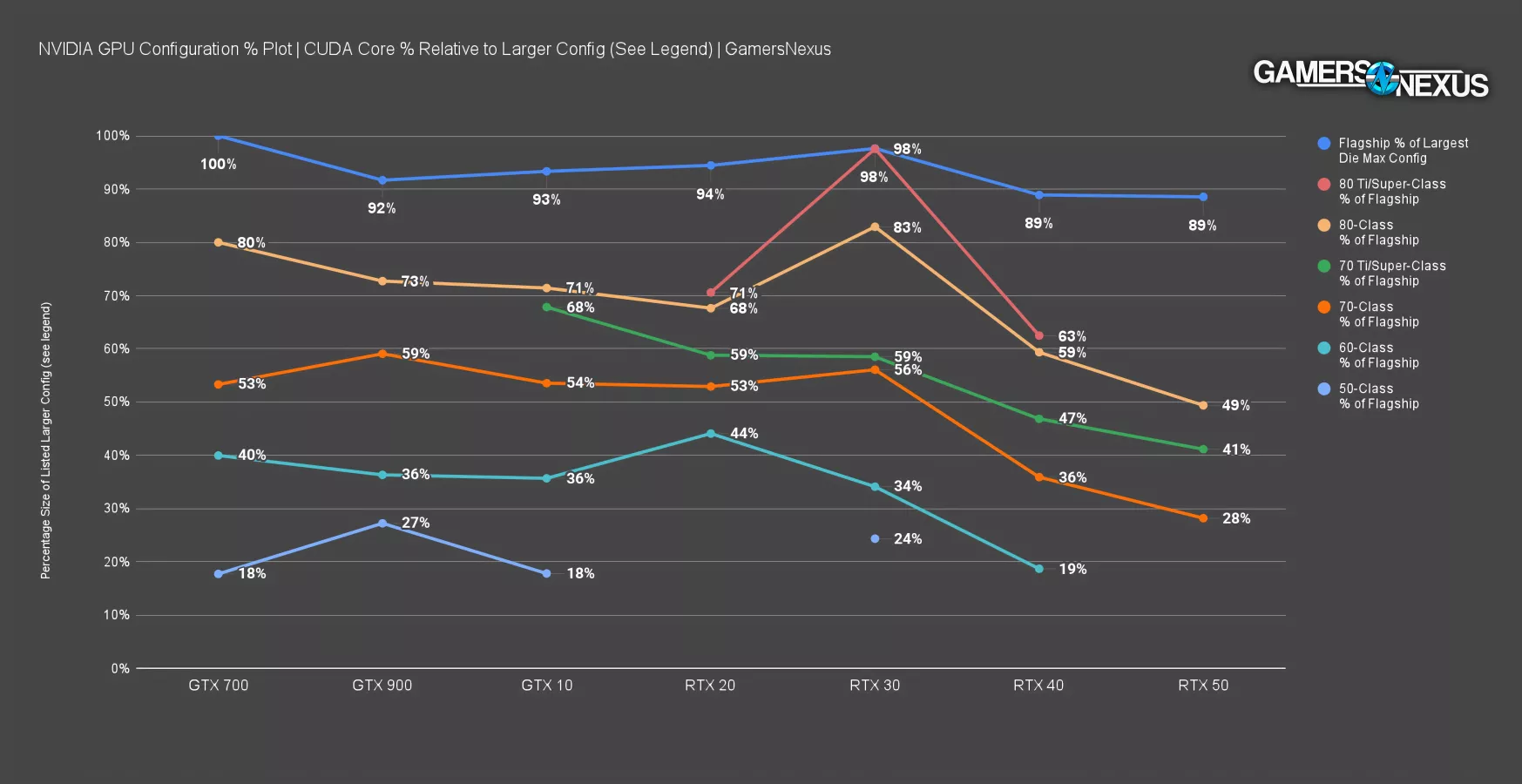

Nvidia makes a worse product today compared to the past. The chip scaling is very aggressive, and the performance improvement is small. A 60 series chip used to be half the flagship chip. Today, an 80 series chip has that.

The segmentation creates FOMO in the following. You look at the 5060 and see that it has 8 GB of memory. Many games have limited performance due to the low amount of memory, so you look at a segment above. The 5060 Ti 16 GB seems better, but it doesn't seem that much better, and the gap to the 5070 is large and the price is close to the 5060 Ti 16 GB. However, the 5070 only has 12 GB, and this can be limiting for future games, since you want a card that will last for a good few years. Finally, you end up getting the 5070 Ti, which is 3x the price of the 5060.

It's the same strategy that Apple uses in its products, you will always feel the need for something better, but the options above always have something that makes you go higher and higher.