You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Next-Generation NVMe SSD and I/O Technology [PC, PS5, XBSX|S]

- Thread starter Shortbread

- Start date

I thought on PS5 you didn't have to "use" the decompressor, it was done by default by the api / hardware if the format / compressor was right (soo Oodle / Kraken) ?

Devs need to use the hardware API after it is automatic(decompression) but if you call the software oodle API it will not use the hardware part from what Fabian Giesen told.

Most ports and cross-generation titles (which is almost everything currently released on Xbox Series X and PS5) don't use the HW decoders at all and do SW decompression on the CPU cores. We're still selling lots of Oodle Data licenses for PS5 and Xbox Series X titles, despite both having free HW decompression. Just because it's available doesn't mean software actually uses it.

Either device has 16GB of RAM and easily reads above 3.2GB/s with decompression, and a good chunk of RAM goes into purely transient memory like GPU render targets etc, so in practice there's a lot less data that actually needs to be resident, especially since many of the most memory-intensive things like high-detail mip map levels don't need to be present for the first frame to render and can continue loading in the background. Any load times significantly above 5 seconds, on either device, have nothing to do with either the SSD or codec speeds and are bottlenecked elsewhere, usually CPU bound.

EDIT:

@Flappy Pannus Did you see tons of current gen games exclusives? There are Plague Tales Requiem, Callisto Protocol, Gotham Knights, Demon's soul's remake, Forspoken, TLOU Part 1 and Ratchet and Clank Rift Apart. We know for example HFW don't use ID buffer for build visibility buffer because this is not available on PS4 and only on PS4 pro and PS5. I doubt cross gen games aren't design around the last gen console.

Last edited:

I really wish AMD's DirectStorage sample from their GDC talk was available for download. I'd like to try it out.

Anyway, looking at the results from the video:

You can clearly see that DirectStorage whether utilizing GPU decompression or not, is simply much quicker than without. Just look at the CPU Load Times....massive savings there. As the size of the asset goes up, the scaling improves. The CPU without DirectStorage can't even load the SpaceShuttle asset in the time that they travel through the bounding box and thus never fully loads in time. You can also see that DirectStorage with GPU decompression is over 2x as fast at loading the asset than CPU decompression.

Hopefully they put it up for download soon.

Anyway, looking at the results from the video:

You can clearly see that DirectStorage whether utilizing GPU decompression or not, is simply much quicker than without. Just look at the CPU Load Times....massive savings there. As the size of the asset goes up, the scaling improves. The CPU without DirectStorage can't even load the SpaceShuttle asset in the time that they travel through the bounding box and thus never fully loads in time. You can also see that DirectStorage with GPU decompression is over 2x as fast at loading the asset than CPU decompression.

Hopefully they put it up for download soon.

I think this a bit of misreading here. It isn't a CPU limitation, it's a code limitation. The CPU isn't "busy" in the slow examples at all, the big difference here (and the reason why you're seeing large assets gaining more) is because we're finally stuffing the disk I/O queue full of parallel things to do.You can clearly see that DirectStorage whether utilizing GPU decompression or not, is simply much quicker than without. Just look at the CPU Load Times....massive savings there. As the size of the asset goes up, the scaling improves. The CPU without DirectStorage can't even load the SpaceShuttle asset in the time that they travel through the bounding box and thus never fully loads in time. You can also see that DirectStorage with GPU decompression is over 2x as fast at loading the asset than CPU decompression.

Really, this issue dates back to games being written for storage based on ATA standards. Until NVMe showed up, every disk I/O request was a serial request -- issue request, wait to complete, repeat. NVMe gives us the ability to issue parallel commands (most commodity drives expose four simultaneous I/O channels) which thus allows a significant increase in I/O capability. And here's the trick: many high performance Windows applications exist which have figured out how to properly queue and pipeline disk I/O to really drive the maximum performance from the I/O stack.

THe really big winner here is the IORing work; it allows a developer to skip having to write the hard part of pipelineing and queueing, and instead use a kernel API call to load up the assets in the fastest way possible. It's not about CPU bypass, it's about finally pushing disks to their full ability without an app developer having to think about making it work.

The main bottleneck being solved is a code problem, not a CPU or disk problem.

I didn't think I was insinuating that it was a CPU limitation. I thought the takeaway from my post was that it's more of a code limitation of the old API more than anything else.. considering the improvement when using the CPU with Directstorage.I think this a bit of misreading here. It isn't a CPU limitation, it's a code limitation. The CPU isn't "busy" in the slow examples at all, the big difference here (and the reason why you're seeing large assets gaining more) is because we're finally stuffing the disk I/O queue full of parallel things to do.

Really, this issue dates back to games being written for storage based on ATA standards. Until NVMe showed up, every disk I/O request was a serial request -- issue request, wait to complete, repeat. NVMe gives us the ability to issue parallel commands (most commodity drives expose four simultaneous I/O channels) which thus allows a significant increase in I/O capability. And here's the trick: many high performance Windows applications exist which have figured out how to properly queue and pipeline disk I/O to really drive the maximum performance from the I/O stack.

THe really big winner here is the IORing work; it allows a developer to skip having to write the hard part of pipelineing and queueing, and instead use a kernel API call to load up the assets in the fastest way possible. It's not about CPU bypass, it's about finally pushing disks to their full ability without an app developer having to think about making it work.

The main bottleneck being solved is a code problem, not a CPU or disk problem.

But yea, that's interesting about IORing. Whatever makes it simpler and easier for developers to implement the better.

Devs need to use the hardware API after it is automatic(decompression) but if you call the software oodle API it will not use the hardware part from what Fabian Giesen.

What would be the point? If decompression is automated and requires only to be presented the proper format, why would a dev ever go the software route?

Devs are just paying extra to unnecessarily consume cpu cycles and pci-e bandwidth. There has to be a motivating factor.

What would be the point? If decompression is automated and requires only to be presented the proper format, why would a dev ever go the software route?

Devs are just paying extra to unnecessarily consume cpu cycles and pci-e bandwidth. There has to be a motivating factor.

Because it is additional work on consoles. They want the same code for old gen and current gen. Devs won't buy additionnal license for pleasure, this is money and I suppose this is cheaper than maintain two version of the code. In the answer Fabian Giesen said they have the CPU cycle and enough RAM to do it and PCIE bandwidth. Some cross gen games like HFW or Spiderman probably use the HW decompressor but it seems this is not the case all time.

Last edited:

You've been on this 'the CPU processing savings with DirectStorage doesn't matter' trick for quite a while now, haven't you? Wasn't it you who kept trying to suggest that CPU decompression was still more than enough for modern, high bandwidth applications?I think this a bit of misreading here. It isn't a CPU limitation, it's a code limitation. The CPU isn't "busy" in the slow examples at all, the big difference here (and the reason why you're seeing large assets gaining more) is because we're finally stuffing the disk I/O queue full of parallel things to do.

Really, this issue dates back to games being written for storage based on ATA standards. Until NVMe showed up, every disk I/O request was a serial request -- issue request, wait to complete, repeat. NVMe gives us the ability to issue parallel commands (most commodity drives expose four simultaneous I/O channels) which thus allows a significant increase in I/O capability. And here's the trick: many high performance Windows applications exist which have figured out how to properly queue and pipeline disk I/O to really drive the maximum performance from the I/O stack.

THe really big winner here is the IORing work; it allows a developer to skip having to write the hard part of pipelineing and queueing, and instead use a kernel API call to load up the assets in the fastest way possible. It's not about CPU bypass, it's about finally pushing disks to their full ability without an app developer having to think about making it work.

The main bottleneck being solved is a code problem, not a CPU or disk problem.

I think this is properly debunked by now. Everything you're saying about parallel I/O queuing being a big advantage is true, but this simply goes alongside the simultaneous advantages of freeing up CPU overhead.

It'd be one thing if we expected modern games to not tax CPU's very hard, much like we saw with most last gen games, but this is definitely going to change(and is already changing). Everything that frees up CPU resources will be welcome. I mean, Microsoft and Sony didn't go out of their way to include dedicated decompression and audio hardware in their consoles for no reason. If the CPU's were gonna be more than enough for all this, why would they bother?

As a matter of fact, I have. Turns out I was right. It was never a CPU bottleneck, or an I/O bottleneck, it was a code bottleneck the whole time -- exactly as I described. This is surprising to literally nobody who actually does this for a living..You've been on this 'the CPU processing savings with DirectStorage doesn't matter' trick for quite a while now, haven't you?

Here's a link to me saying exactly as much: https://forum.beyond3d.com/threads/...mart-access-storage.62362/page-2#post-2216377

Actually I spoke even more at length about how CPU cycles really aren't linked to I/O here: https://forum.beyond3d.com/threads/...mart-access-storage.62362/page-2#post-2216382

What YOU might be conflating is me reminding people that removing the CPU from the path of storage to VRAM is functionally impossible in how filesystems non-contiguously map back to volumes, which may non-contiguously map back to logical blocks, which may non-contiguously map back to physical blocks presented by drivers, which then actually may non-contiguously map back to physical topology (eg RAID cards or HBAs.) Here's a link of me saying it: https://forum.beyond3d.com/threads/...mart-access-storage.62362/page-3#post-2255338

Turns out I was exactly right about that too, the CPU still ends up pulling the file into memory via the filesystem (albeit now only one copy in memory!) and then moving it into VRAM. Nowhere in any of the modern DirectStorage slides have we seen any indication of block level reads coming from an NVMe drive to VRAM. And we won't see it until the pile of stuff I described somehow gets solved -- and none of it is easy, nor is it really necessary for high performance I/O.

Actually no, very specifically I said GPU decompression might be the only really significant new thing DirectStorage may provide. Here's me saying exactly as much in the first link I posted above:Wasn't it you who kept trying to suggest that CPU decompression was still more than enough for modern, high bandwidth applications?

I also buy into the GPU decompression conversation being more of the "meat and potatoes" of a newfangled feature being added.

However, that isn't to say that data compression hasn't happened for decades (it has) and that somehow conflating all this new "compression" with the actual performance increases in level load times was a foolish link (it is.) Here's another thread of me saying exactly this: https://forum.beyond3d.com/threads/...mart-access-storage.62362/page-2#post-2216696

Looks like your memory is crap.As I have stated previously multiple times, I absolutely buy decompression needs can be far more highly optimized. <snip> Now, sustaining that "bandwidth" while decompressing textures needing extra CPU, as specifically linked to the decompression function itself? Yup, I buy that all day.

LOL, what are you even talking about? Everything I said would come to fruition, has.I think this is properly debunked by now,

Look at my first link again:

I suspect what we're really facing here is an API which does all the heavy lifting work for game designers who don't want to put in the code effort, which is truly fine. Making it easier for a dev is a rational and reasonable argument, far more so than making the kernel servicing I/O in some remarkably, game-changing (ha!) faster way. I'm sure the kernel can use more tweaking as all code can; it isn't bottlenecking disk I/O today on the crap storage we find in commodity grade consumer devices like typical NVMe drives.

I wrote that 20 months ago, and guess what: IORing did exactly, precisely what I thought it would.

Step off my far larger nutsack than yours, junior.

Last edited:

Flappy Pannus

Veteran

According to Nvidia GDeflate is a candidate for fixed function hardware. Would be interesting to see if any PC IHVs spend transistors on it in the future.

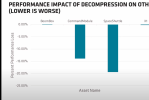

Was thinking about this since I saw those GDC slides from Amd on Directstorage, this is the hit a 7900XT takes (the Space Shuttle is 1GB compressed file):

Now this isn't necessarily representative of the 'hit' you can expect in a game, even with how fast it is, decompressing that asset took around 250 ms - so if your rendering engine is waiting on those materials, you're going to get a very large stutter - albeit of course, that depends on how soon/late you're calling it in. So there are ways to manage this in smaller chunks of course, and the resulting GPU hit will be lower as a result.

Still....1GB compressed is not that large, and that's a very powerful GPU.

But yeah, I'm thinking why even 'burden' the GPU with this at all and just have GPU's with fixed function hardware to do this going forward? AFAIK the silicon area actually dedicated to this in the PS5 is quite small.

Last edited:

I don't think that's how it works. If that was the case... the CPU would take longer... and rendering would still have to halt until it's loaded...Now this isn't necessarily representative of the 'hit' you can expect in a game, even with how fast it is, decompressing that asset took around 250 ms - so if your rendering engine is waiting on those materials, you're going to get a very large stutter. So obviously this has to be managed in smaller chunks, and the resulting GPU hit will be lower as a result.

Those metrics are simply showing the difference in time... and shouldn't be misunderstood as the time a game will be stopped until those assets are loaded. And if you watch the presentation.. there's no suttering happening at all. The frametime graph they show (which does show bumps) is in "us" meaning microseconds and not milliseconds.

Last edited:

Flappy Pannus

Veteran

Was editing as you replied. But yes I am obviously not well educated on how modern streaming systems function.

Granted, but isn't that managed today in part due by having outsized VRAM requirements - the hit to CPU is managed by decompressing that at a much slower rate and filling up vram in anticipation of the assets the player will be encountering long before they're actually needed?

The 'hope' I've seen from some with DS 1.1 is that this will alleviate some pressure on VRAM by delaying the need to call in these assets from storage until they're closer to actually being needed, and the assets for the section just traversed through can be ejected (they can't be held until the exact frame they're going to pulled in of course, GPU hit or not). My concern is that if there is a noticeable GPU hit when decompressing these large chunks, then you might have periodic GPU spikes during this process. Or you do it very similar to the way it is now, but we're back into still needing lots of vram.

Far, far better than constant high CPU load of course! The way it is now, we need a ton of vram, and also have constant high CPU usage (well, maybe - again I shouldn't be using TLOU as the base when I've critiqued others for doing the same). Even if these usage 'spikes' were to occur, you can manage this from an end user perspective far easier if you get 'stutters' because your GPU usage jumps 15% for a second, just lower your settings vs. getting a new CPU.

I'm just wondering if there's a cost effective way to avoid even this small possibility entirely.

The second graph I posted yes, that much is clear. CPU DS decompression is 1/2 to 1/3 as performant as the GPU in time taken, and I'm sure during that demo it's fully utilized. The "% performance loss" graph is what I'm largely looking at though. That doesn't mean a game with DS GPU is going to be utilizing 20% of your 7900XT constantly, but 1GB of compressed assets is not that large...is it? I have no idea what size chunks PS5 games that fully utilize the IO system are pulling in at one time.

I don't think that's how it works. If that was the case... the CPU would take longer... and rendering would still have to halt until it's loaded...

Granted, but isn't that managed today in part due by having outsized VRAM requirements - the hit to CPU is managed by decompressing that at a much slower rate and filling up vram in anticipation of the assets the player will be encountering long before they're actually needed?

The 'hope' I've seen from some with DS 1.1 is that this will alleviate some pressure on VRAM by delaying the need to call in these assets from storage until they're closer to actually being needed, and the assets for the section just traversed through can be ejected (they can't be held until the exact frame they're going to pulled in of course, GPU hit or not). My concern is that if there is a noticeable GPU hit when decompressing these large chunks, then you might have periodic GPU spikes during this process. Or you do it very similar to the way it is now, but we're back into still needing lots of vram.

Far, far better than constant high CPU load of course! The way it is now, we need a ton of vram, and also have constant high CPU usage (well, maybe - again I shouldn't be using TLOU as the base when I've critiqued others for doing the same). Even if these usage 'spikes' were to occur, you can manage this from an end user perspective far easier if you get 'stutters' because your GPU usage jumps 15% for a second, just lower your settings vs. getting a new CPU.

I'm just wondering if there's a cost effective way to avoid even this small possibility entirely.

Those metrics are simply showing the difference in time..

The second graph I posted yes, that much is clear. CPU DS decompression is 1/2 to 1/3 as performant as the GPU in time taken, and I'm sure during that demo it's fully utilized. The "% performance loss" graph is what I'm largely looking at though. That doesn't mean a game with DS GPU is going to be utilizing 20% of your 7900XT constantly, but 1GB of compressed assets is not that large...is it? I have no idea what size chunks PS5 games that fully utilize the IO system are pulling in at one time.

Was thinking about this since I saw those GDC slides from Amd on Directstorage, this is the hit a 7900XT takes (the Space Shuttle is 1GB compressed file):

View attachment 8702

Now this isn't necessarily representative of the 'hit' you can expect in a game, even with how fast it is, decompressing that asset took around 250 ms - so if your rendering engine is waiting on those materials, you're going to get a very large stutter - albeit of course, that depends on how soon/late you're calling it in. So there are ways to manage this in smaller chunks of course, and the resulting GPU hit will be lower as a result.

Still....1GB compressed is not that large, and that's a very powerful GPU.

That's interesting data. However I'd say 1GB compressed (so 2GB in VRAM) is a hell of a lot to want to stream in during gameplay over a such a small period of time (equivalent to 8GB/s!). That's essentially the same as refreshing the entire 16GB framebuffer of a 4080 in 2 seconds flat. That's not normal streaming behaviour. I assume you'd be streaming that amount of data in much more slowly. Forsaken for example had some rather "crazy" streaming of 500MB/s or 1/16th of that. Which would result in a little over 1% performance loss on the 7900XT assuming it's linear. The Matrix city demo was only 150MB is I recall correctly which tallies well with Nvidia's earlier claims that the performance hit from this is basically unnoticeable.

Obviously much faster (within a frame) loads of smaller amounts but with very high throughput would have the potential to hit the performance harder. The limits of modern SSD's is around 14GB/s so if you max that out, it equates to a roughly 40% drop off for a period of a few ms. Although the real world drop off would likely be much smaller as the short time period would make slotting the workload into spare compute resources much easier.

View attachment 8703

But yeah, I'm thinking why even 'burden' the GPU with this at all and just have GPU's with fixed function hardware to do this going forward? AFAIK the silicon area actually dedicated to this in the PS5 is quite small.

My previous position on this was that it won't happen because it would eliminate the flexibility of the GPU based approach to use different compression formats. But since we seem to have standardised on GDeflate, I think it does actually makes sense for the GPU IHV's to include this on the board as long as it doesn't increase costs too much.

For those who watched the AMD presentation on DirectStorage, it's worth noting the accelerated GPU decompression comes at a notable cost: significant VRAM consumption. Compare these two slides from the AMD presentation; pay attention to the little flow blocks which indicate copies of the asset data: (hint: press play on each one, then press pause as it finally starts to play, and then "x" out of the more videos that shows up so you can see the full slide on pause)

The 15:30 mark:

The 15:55 mark:

In the earlier slide, he calls out up to 5x copies of the asset data in memory: four in main memory, and the final asset data in GPU memory. Now look in the next slide, we still have just as many potential copies of the data, but now 3x of them live in VRAM. It's also worth considering, when the resources are decompressed in main memory, we can elect which sub-resources are transferred over the PCIe bus for inclusion in the next frame -- the whole asset doesn't actually HAVE to be in VRAM. With DirectStorage, the entire parent resource is loaded with all subresources into VRAM in their three buffered / staged states.

So it isn't just a tripling of VRAM consumption from today, because today we can selectively decide which subresources need to move over. No, the entire resource chain is a preallocated blob now, so everything about the resource is potentially moved into VRAM. This means our VRAM consumption (on a per-asset basis) is possibly a whole lot more than 3x what we face today.

GPU compression is definitely faster; it also comes at a cost and it isn't just GPU cycles.

The 15:30 mark:

In the earlier slide, he calls out up to 5x copies of the asset data in memory: four in main memory, and the final asset data in GPU memory. Now look in the next slide, we still have just as many potential copies of the data, but now 3x of them live in VRAM. It's also worth considering, when the resources are decompressed in main memory, we can elect which sub-resources are transferred over the PCIe bus for inclusion in the next frame -- the whole asset doesn't actually HAVE to be in VRAM. With DirectStorage, the entire parent resource is loaded with all subresources into VRAM in their three buffered / staged states.

So it isn't just a tripling of VRAM consumption from today, because today we can selectively decide which subresources need to move over. No, the entire resource chain is a preallocated blob now, so everything about the resource is potentially moved into VRAM. This means our VRAM consumption (on a per-asset basis) is possibly a whole lot more than 3x what we face today.

GPU compression is definitely faster; it also comes at a cost and it isn't just GPU cycles.

That's completely ridiculous... There's the staging buffer size, typically 128MB - 256MB, and the size of the asset. The asset is compressed in VRAM.. and decompresses to the size the asset would have been transferred over the PCIe bus the old way..For those who watched the AMD presentation on DirectStorage, it's worth noting the accelerated GPU decompression comes at a notable cost: significant VRAM consumption. Compare these two slides from the AMD presentation; pay attention to the little flow blocks which indicate copies of the asset data: (hint: press play on each one, then press pause as it finally starts to play, and then "x" out of the more videos that shows up so you can see the full slide on pause)

The 15:30 mark:The 15:55 mark:

In the earlier slide, he calls out up to 5x copies of the asset data in memory: four in main memory, and the final asset data in GPU memory. Now look in the next slide, we still have just as many potential copies of the data, but now 3x of them live in VRAM. It's also worth considering, when the resources are decompressed in main memory, we can elect which sub-resources are transferred over the PCIe bus for inclusion in the next frame -- the whole asset doesn't actually HAVE to be in VRAM. With DirectStorage, the entire parent resource is loaded with all subresources into VRAM in their three buffered / staged states.

So it isn't just a tripling of VRAM consumption from today, because today we can selectively decide which subresources need to move over. No, the entire resource chain is a preallocated blob now, so everything about the resource is potentially moved into VRAM. This means our VRAM consumption (on a per-asset basis) is possibly a whole lot more than 3x what we face today.

GPU compression is definitely faster; it also comes at a cost and it isn't just GPU cycles.

So essentially you're adding the staging buffer size onto the VRAM.. so it will go up, likely around 1GB at most.. and yet allow you to stream in data much faster.

For those who watched the AMD presentation on DirectStorage, it's worth noting the accelerated GPU decompression comes at a notable cost: significant VRAM consumption. Compare these two slides from the AMD presentation; pay attention to the little flow blocks which indicate copies of the asset data: (hint: press play on each one, then press pause as it finally starts to play, and then "x" out of the more videos that shows up so you can see the full slide on pause)

The 15:30 mark:The 15:55 mark:

In the earlier slide, he calls out up to 5x copies of the asset data in memory: four in main memory, and the final asset data in GPU memory. Now look in the next slide, we still have just as many potential copies of the data, but now 3x of them live in VRAM. It's also worth considering, when the resources are decompressed in main memory, we can elect which sub-resources are transferred over the PCIe bus for inclusion in the next frame -- the whole asset doesn't actually HAVE to be in VRAM. With DirectStorage, the entire parent resource is loaded with all subresources into VRAM in their three buffered / staged states.

So it isn't just a tripling of VRAM consumption from today, because today we can selectively decide which subresources need to move over. No, the entire resource chain is a preallocated blob now, so everything about the resource is potentially moved into VRAM. This means our VRAM consumption (on a per-asset basis) is possibly a whole lot more than 3x what we face today.

GPU compression is definitely faster; it also comes at a cost and it isn't just GPU cycles.

What’s the definition of a subresource? DirectStorage supposedly supports tiled resources with tile size at 64KB and you can choose to stream / decompress a single tile.

I understand your statement, but also consider the differences. in a prior life, we could pick and choose which very specific pieces of an asset needed to come out of system RAM into the VRAM pool. If you want a hand-wavey example, think of miplevels on a megatexture. No need to move the entire texture and all miplevels as a single pool, when you could move just the specific miplevel and even at block-granularity. Yeah, they came across the PCIe bus, but only those specific parts you needed. Is it still going to be faster to use GPU decompression? Absolutely. Is it going to come at a VRAM cost? Absolutely.That's completely ridiculous... There's the staging buffer size, typically 128MB - 256MB, and the size of the asset. The asset is compressed in VRAM.. and decompresses to the size the asset would have been transferred over the PCIe bus the old way..

So essentially you're adding the staging buffer size onto the VRAM.. so it will go up, likely around 1GB at most.. and yet allow you to stream in data much faster.

And consider how much moaning we've all endured about how modern games are so VRAM hungry. 1GB of extra VRAM is meaningful when the overwhelming majority of video cards on the market are 8GB of VRAM or less... Versus system RAM which, bluntly, is a whole lot cheaper and typically larger (or at least, easier to make larger in a pinch.) For a VRAM-constrained system, GPU decompression has a good probability of being worse.

Here's the great news: GPU decompression is just one aspect of DirectStorage; the really BIG part (IMO, of course) of DirectStorage is the IORing API which is what gets all the fantastic loading speed increases. As such, we may see GPU decompression as an optional feature, reserved for those cards with enough VRAM to really make use of it. Nothing really wrong with that, IMO.

Start watching around here:What’s the definition of a subresource? DirectStorage supposedly supports tiled resources with tile size at 64KB and you can choose to stream / decompress a single tile.

This precomputed blob stores all the linked resources for the asset, and according to the AMD presentation, those are called subresources (he's talking about these around the 14m mark. Also see the API documentation on-screen, specifically calling out SubResources class.)

Let's say there's a 1GB overhead required for decompression.. surely games which utilize DirectStorage and more specifically GPU decompression, can lean more heavily on the fact that data coming over the PCIe bus is ~half the size it would otherwise be.. and surely their games will be designed around streaming in assets with that guarantee in mind.I understand your statement, but also consider the differences. in a prior life, we could pick and choose which very specific pieces of an asset needed to come out of system RAM into the VRAM pool. If you want a hand-wavey example, think of miplevels on a megatexture. No need to move the entire texture and all miplevels as a single pool, when you could move just the specific miplevel and even at block-granularity. Yeah, they came across the PCIe bus, but only those specific parts you needed. Is it still going to be faster to use GPU decompression? Absolutely. Is it going to come at a VRAM cost? Absolutely.

And consider how much moaning we've all endured about how modern games are so VRAM hungry. 1GB of extra VRAM is meaningful when the overwhelming majority of video cards on the market are 8GB of VRAM or less... Versus system RAM which, bluntly, is a whole lot cheaper and typically larger (or at least, easier to make larger in a pinch.) For a VRAM-constrained system, GPU decompression has a good probability of being worse.

Here's the great news: GPU decompression is just one aspect of DirectStorage; the really BIG part (IMO, of course) of DirectStorage is the IORing API which is what gets all the fantastic loading speed increases. As such, we may see GPU decompression as an optional feature, reserved for those cards with enough VRAM to really make use of it. Nothing really wrong with that, IMO.

And lets consider the different measurements of bandwidth achieved utilizing different sized staging buffers:

A staging buffer of 32MB - 64MB seems to be the sufficient for Gen3/4 NVMe drives which is likely to be more than enough for anything games will be utilizing to stream in at runtime. Let's not forget, the goal of these tech demos is to show max bandwidth attainable utilizing the GPU to decompress.. This one in particular uses a 256MB buffer, and while we can't test it directly yet.. I bet VRAM utilization is quite similar between CPU decompression and GPU decompression. We already know the GPU can decompress at extremely high bandwidths... but nothing about any of these tech demos is considering how to optimally stream in data while utilizing the lowest amount of resources possible.

I'm fairly certain that developers making games with this tech in mind, will be able to keep less assets resident in memory, decreasing VRAM utilization, and lean more on the increased bandwidth available over the PCIe bus due to the assets still being compressed. These assets also remain compressed in system RAM.. meaning more data can be loaded into system RAM upfront if desired.

That's all fine and great. What happens when you run out of VRAM?

The picture from the AMD deck isn't my material, so I'm not sure why you're arguing with me about it, I'm only here to point it out to anyone who missed it. Funny anecdote: note in the slide deck, the "up to 5x copies" footnote never disappears even when showing the 3x copies in VRAM and 2x copies in main memory.

I'm sorry if you don't like the message; it isn't my message.

The picture from the AMD deck isn't my material, so I'm not sure why you're arguing with me about it, I'm only here to point it out to anyone who missed it. Funny anecdote: note in the slide deck, the "up to 5x copies" footnote never disappears even when showing the 3x copies in VRAM and 2x copies in main memory.

I'm sorry if you don't like the message; it isn't my message.

Similar threads

- Locked

- Replies

- 10

- Views

- 843

- Replies

- 20

- Views

- 3K

- Replies

- 52

- Views

- 6K