Jawed said:Dunno. I'm still trying to understand why HDR isn't rendered directly into the framebuffer - what is all this compositing stuff?

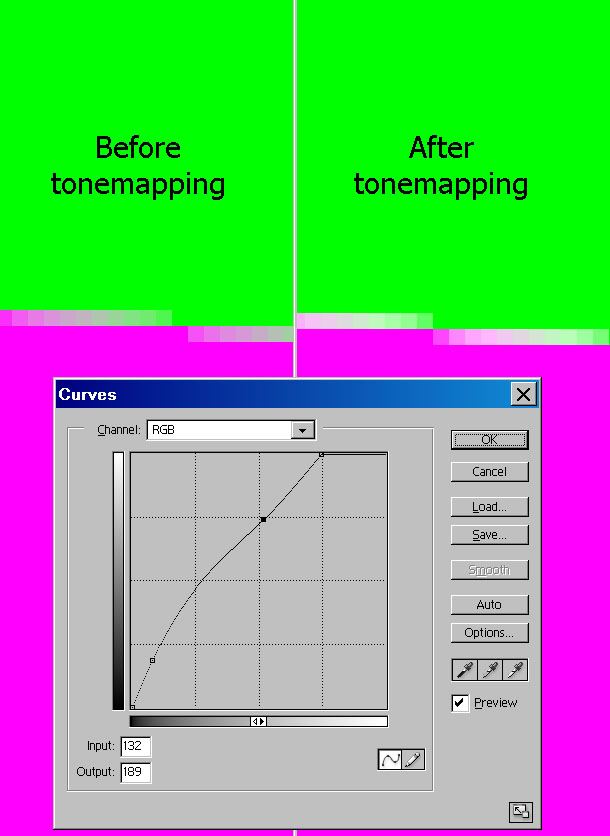

You'd need an FP16 framebuffer. The compositing stuff is mainly adding bloom and/or working around lack of blending by rendering to different buffers and the combining them in the end. Also, you need the tonemapping pass in the end. Unless you're just going to use a plain scale operation to reduce the range you can't express the math with blending (1 - exp(-exposure * x)), so you'd have to have the rendering in a separate buffer as an input for a final pass to that goes to the framebuffer.