agent_x007

Newcomer

First of all : Hello everyone

I know it's a silly (and quite complex) question, but I want to see if I understand 3D rendering idea in context of GPU architecture, right

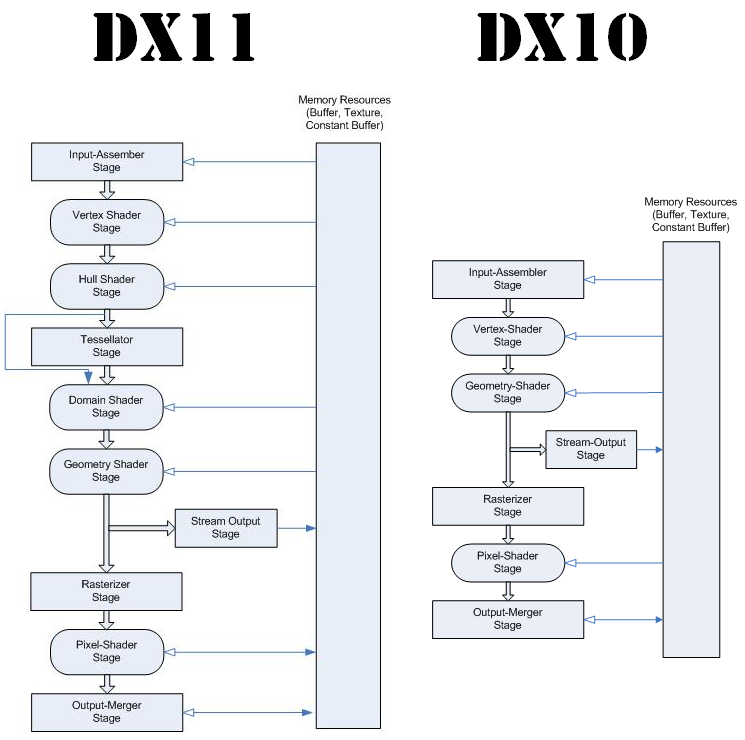

Simple way to put it - I want to join these :

With those :

I do know (more-less) how Deffered Rendering works (and since it's quite common in games these days I want to base on it).

SO, here's how I think a modern GPUs work :

DX10 (using/handled in GT200 aka. GTX 2xx) :

We start at VS (Vertex Shader), that works on points in space (delivered by CPU through PCI-e).

VS will transform them (move, rotate, etc.) any way we want and combine them together to form vertices and primitives/objects.

Vertex Shader stage uses "Streaming Procesors" (SPs) in GT200 combined with cache and VRAM to move data around.

Next stage, Geometry Shader (GS), does similar thing to VS, but it does it on larger scale (entire vertices, objects/primitives) + it can create new vertices (VS can't make new geometry).

Like VS, GS is handled by SPs.

After we complete all transformations, we rasterise the image (ie. change 3D space of vertices, to 2D space of pixels - triangles in, pixels out) using Rasteriser in GPU.

Next, we have to "put wallpaper" on our new pixels, to figure out what colour they should have now (using GPU TMU's), and after that we pass them on to Pixel Shader (PS) that can do interesting stuff to them and take care of lighting entire scene (Deffered Rendering "thing"). PS is again done by SP unit's in GPU.

Important thing to note is that SP's operate in blocks and if they are in the same SM, they can't do two different things (like PS and VS) at the same time.

After all that, all what's left is to blend our pixels into something more useful than some numbers, so we input them to ROP unit(s) (which give us a image frame as ouput.

Once we have it, we send it through RAMDAC(s) (RAMDAC translates "a frame", into something monitor can understand), to monitor(s).

I didn't mentioned culling and cutting since it takes place in almost every stage (culling/cutting reduces workload for this stage, and those after it).

In DX11, before Geometry Shader and after Vertex Shader we have a Tessalation stage (that consists of Hull Shader, Tesselator unit and Domain Shader). It can create Enourmous amounts of new geometry REALLY FAST (it's based on fixed function setup, like T&L in days pased or fast Video Decoding today ).

).

Hull Shader takes care of controling other stages, Tesselator... tesselates, and Domain Shader combines data gathered from all previous stages (including Vertex Shader) and prepares it for Geometry Shader.

VS, HS, DS, GS and PS, are all handled by Cuda Core's (new marketing term, since SP's can't handle HS and DS stages ie. GT200 is not capable of DX11).

Cuda Core's (CC's) are present in Fermi, Kepler and Maxwell based cards.

CC's have the same limitations as SP's had (working in groups, the CC's of the same group can't hadle different tasks).

Other stages are pretty much the same as the ones in DX10 (altho they do have more capabilities than old versions).

From GPU perspective It's worth noting that all DX10 GPU's have only one Rasteriser ("thing" that changes 3D to 2D). DX11 based ones can have 4 or even 5 of these, working in parallel.

OK, that's it (I THINK I got it right).

I don't need ALU or Register detail level here - I just want to know if my thinking (and understanding of it all), is correct.

PS. Also one other thing :

I know Immediate/Direct Rendering (DR) differs from Deffered Rendering in Lighting stage - in DR it takes place early (ie. in Vertex Shader).

But are there any other this type of things or other stuff, that make GPU handled it differently ?

Thank's for all responds.

I know it's a silly (and quite complex) question, but I want to see if I understand 3D rendering idea in context of GPU architecture, right

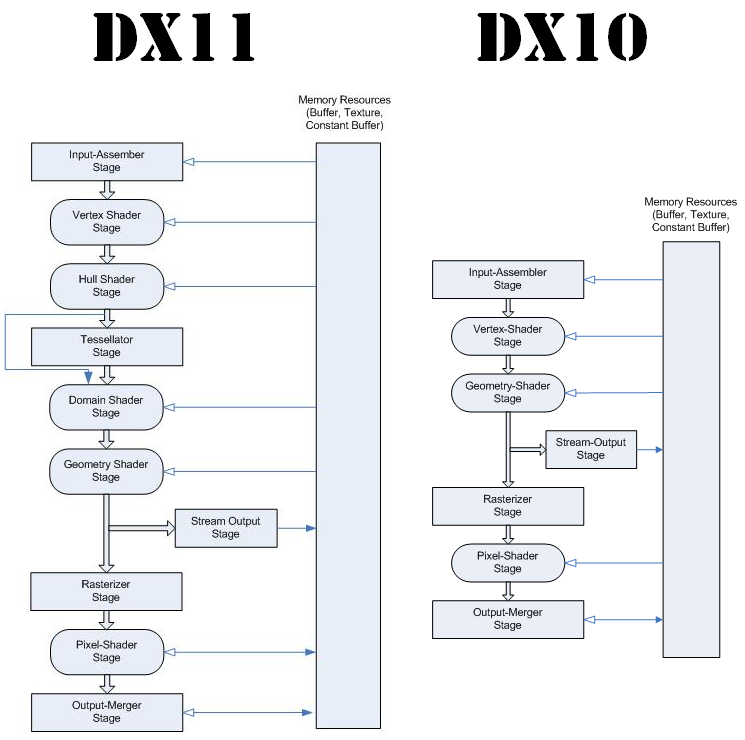

Simple way to put it - I want to join these :

With those :

I do know (more-less) how Deffered Rendering works (and since it's quite common in games these days I want to base on it).

SO, here's how I think a modern GPUs work :

DX10 (using/handled in GT200 aka. GTX 2xx) :

We start at VS (Vertex Shader), that works on points in space (delivered by CPU through PCI-e).

VS will transform them (move, rotate, etc.) any way we want and combine them together to form vertices and primitives/objects.

Vertex Shader stage uses "Streaming Procesors" (SPs) in GT200 combined with cache and VRAM to move data around.

Next stage, Geometry Shader (GS), does similar thing to VS, but it does it on larger scale (entire vertices, objects/primitives) + it can create new vertices (VS can't make new geometry).

Like VS, GS is handled by SPs.

After we complete all transformations, we rasterise the image (ie. change 3D space of vertices, to 2D space of pixels - triangles in, pixels out) using Rasteriser in GPU.

Next, we have to "put wallpaper" on our new pixels, to figure out what colour they should have now (using GPU TMU's), and after that we pass them on to Pixel Shader (PS) that can do interesting stuff to them and take care of lighting entire scene (Deffered Rendering "thing"). PS is again done by SP unit's in GPU.

Important thing to note is that SP's operate in blocks and if they are in the same SM, they can't do two different things (like PS and VS) at the same time.

After all that, all what's left is to blend our pixels into something more useful than some numbers, so we input them to ROP unit(s) (which give us a image frame as ouput.

Once we have it, we send it through RAMDAC(s) (RAMDAC translates "a frame", into something monitor can understand), to monitor(s).

I didn't mentioned culling and cutting since it takes place in almost every stage (culling/cutting reduces workload for this stage, and those after it).

In DX11, before Geometry Shader and after Vertex Shader we have a Tessalation stage (that consists of Hull Shader, Tesselator unit and Domain Shader). It can create Enourmous amounts of new geometry REALLY FAST (it's based on fixed function setup, like T&L in days pased or fast Video Decoding today

Hull Shader takes care of controling other stages, Tesselator... tesselates, and Domain Shader combines data gathered from all previous stages (including Vertex Shader) and prepares it for Geometry Shader.

VS, HS, DS, GS and PS, are all handled by Cuda Core's (new marketing term, since SP's can't handle HS and DS stages ie. GT200 is not capable of DX11).

Cuda Core's (CC's) are present in Fermi, Kepler and Maxwell based cards.

CC's have the same limitations as SP's had (working in groups, the CC's of the same group can't hadle different tasks).

Other stages are pretty much the same as the ones in DX10 (altho they do have more capabilities than old versions).

From GPU perspective It's worth noting that all DX10 GPU's have only one Rasteriser ("thing" that changes 3D to 2D). DX11 based ones can have 4 or even 5 of these, working in parallel.

OK, that's it (I THINK I got it right).

I don't need ALU or Register detail level here - I just want to know if my thinking (and understanding of it all), is correct.

PS. Also one other thing :

I know Immediate/Direct Rendering (DR) differs from Deffered Rendering in Lighting stage - in DR it takes place early (ie. in Vertex Shader).

But are there any other this type of things or other stuff, that make GPU handled it differently ?

Thank's for all responds.