Jawed

Legend

Over here:

http://www.beyond3d.com/forum/showpost.php?p=830639&postcount=16

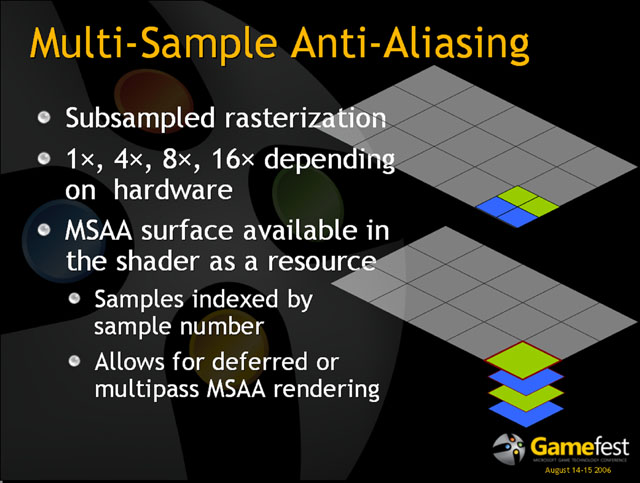

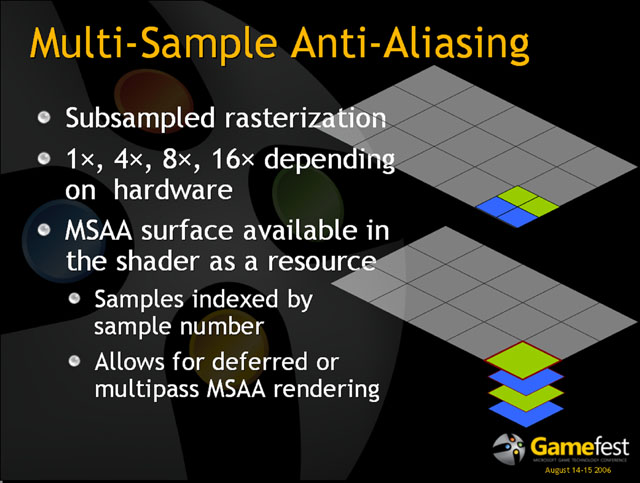

the subject of MSAA with deferred shading has come up.

So, how would D3D10 allow MSAA to be implemented in a deferred shading engine

How would it perform, is it likely to be viable any time soon

Would MSAA in such games be a compelling reason to upgrade to Vista

Or, is deferred shading destined to be nothing more than a medium-term fad, a bit like stencil shadowing

Jawed

http://www.beyond3d.com/forum/showpost.php?p=830639&postcount=16

the subject of MSAA with deferred shading has come up.

So, how would D3D10 allow MSAA to be implemented in a deferred shading engine

How would it perform, is it likely to be viable any time soon

Would MSAA in such games be a compelling reason to upgrade to Vista

Or, is deferred shading destined to be nothing more than a medium-term fad, a bit like stencil shadowing

Jawed