You'd still want more resolution for certain objects than others. You could use deep shadow maps but their accuracy goes out the window with a non-linear projection the further away your light is from the object. Because the renderer assumes that the further away it is, the less important it is.

When I say "tools" I mean the entire offline rendering pipeline. The whole "want more resolution for certain objects" shouldn't even be relevant because modern shadow mapping techniques can pretty easily attain subpixel resolution, that's my point. If the ones in your renderer/tools don't attain that, there's a problem.

2k shadow maps won't eliminate aliasing in volumes or assets such as feather/fur (which very few game studios even try implementing).

That's the geometry itself aliasing, not the shadow map projection. "Should be using LOD" is the high level answer, but in practice you can special-case these situations and brute force them separately in offline, since they really are not the same as the rest of the rendering workload.

The more you want to approach realism, the more you will bias towards brute force programming IMO.

When I say "brute force" here, I mean as an alternative to a better algorithm that produces the same results, not as an alternative to approximations. Certainly I agree that there's less reason to make approximations offline than real-time, and indeed sometimes real-time research does migrate into offline, but I'd like to see bidirectional use more often than happens currently. It's possible the economic constraints in the two spaces are just too different though.

How would you implement area lights in real-time?

You need multi-layered shadow maps at least of course. But let's not pretend area shadows are going to be cheap enough for real-time in ray tracers either! With area lights it's definitely a toss-up over which is ultimately going to be better if/when they come to real-time rendering commonly, but we're quite a ways off there.

How about transparent shadows from a light refracting in something like ice?

That's caustics and there are rasterization-based ways to do it (see Chris Wyman's work), but indeed you end up with fairly incoherent rays depending on the object. Same situation as above really... no one is saying rasterization can solve all the same things that ray tracing can, just that conventional hard shadows it does just fine with. Thus ray traced shadows only really get interesting when you're using a *lot* of rays... i.e. still not practical for a long time yet.

I have yet to see multiple shadow intensities that produce different soft shadows based on the light falloff, prenumbra, and umbra settings.

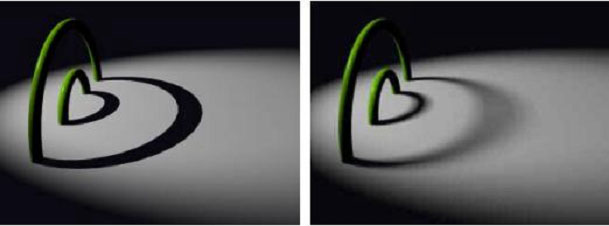

There are a few games that use filter-based plausible approximations, but fundamentally you don't have enough data to do it artifact-free in a single-layer shadow map of course. Same problem as depth of field, with an indirection thrown in to make it even more fun.

I agree though that while it seems the offline render world is in the stone age, we really have time to brute force things. That's why they look so good.

No doubt, and I'm not criticising the results or the method. I'm just explaining why your constraints and conclusions are not necessarily right for real-time.