Fair enough. I guess I should wait for actual evidence before accusing them of purposely slowing down ATI cards. All we have for now is a slowdown without any explanation.Big Bertha EA said:ChrisW said:It seems obvious to me that PS 3.0 was not going to be enough to match ATI's PS 2.0 speed, so they had to find additional ways to force ATI cards to run even slower.

Any proof to this accusation?

Seems like a pretty significant claim that if proven true would be VERY difficult for Crytek to defend, dontcha think?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

ATI & Nvidia Game Tampering??

- Thread starter Dimahnbloe

- Start date

That's because "the rest of sound cards" (=Creative + compatibles) provided absolutely no help with 3D-localization whatsoever. EAX at the time was weakly glorified reverb. The occlusion/reflection model that A3D provided was vastly superior and actually provided a sonic representation of the 3D environment of the player. Owners of other cards weren't "screwed over", their cards simply couldn't achieve anything of the kind.ChrisW said:Not only did he go out of his way to favor nVidia, he also screwed over the entire rest of the sound card industry in favor of one manufacturer...Aureal. He didn't give a flying crap about what card you owned, he was only going to support A3D and the rest of sound cards were forced to use plain stereo sound.

digitalwanderer said:Seconded. Speculation is all well and good, but no accusations should be levelled until the patch is out and we hear what they have to say.

And ATI.

1) I don't think it is at all reasonable to equate HL2 with anything that might be suspected for Far Cry and nVidia. The accusation of favoritism was made on the basis of a FUD campaign portraying the performance disparity Valve showed beteween NV3x and R3xx cards as being a lie. It wasn't...and this is shown by the program suspected of favoring nVidia to boot!

2) As far as Carmack/id, there was a (big) issue with beta Doom 3 benchmarks, but it comes with lots of other indications that show something else entirely (overwhelmingly so in my estimation, at least towards the actual game coding).

...

3) Has everyone else forgotten about nVidia's on-the-rail driver enhancements when considering who is actually behind anomalous Far Cry performance, or were the nVidia supplied demos to websites not on-the-rail and I missed it?

2) As far as Carmack/id, there was a (big) issue with beta Doom 3 benchmarks, but it comes with lots of other indications that show something else entirely (overwhelmingly so in my estimation, at least towards the actual game coding).

...

3) Has everyone else forgotten about nVidia's on-the-rail driver enhancements when considering who is actually behind anomalous Far Cry performance, or were the nVidia supplied demos to websites not on-the-rail and I missed it?

What ATi has to say about the seeming slowdown from the 1.2 patch do you mean? Are you implying that it's something on their end like an optimization that the 1.2 patch broke? :|DaveBaumann said:digitalwanderer said:Seconded. Speculation is all well and good, but no accusations should be levelled until the patch is out and we hear what they have to say.

And ATI.

hothardware thinks that patch 1.2 is pretty good for X800, no drops compared to 1.1.

1-2 FPS more actually.

http://www.hothardware.com/viewarticle.cfm?articleid=550

I can't say i've noticed any difference either with my X800 pro (CAT 4.6). Who reported a 15 fps drop btw?

1-2 FPS more actually.

http://www.hothardware.com/viewarticle.cfm?articleid=550

I can't say i've noticed any difference either with my X800 pro (CAT 4.6). Who reported a 15 fps drop btw?

That never stopped about all other games based on the Quake3 engine from having EAX support. Are there any other Quake 3 engine games out there that don't have EAX support?Entropy said:That's because "the rest of sound cards" (=Creative + compatibles) provided absolutely no help with 3D-localization whatsoever. EAX at the time was weakly glorified reverb. The occlusion/reflection model that A3D provided was vastly superior and actually provided a sonic representation of the 3D environment of the player. Owners of other cards weren't "screwed over", their cards simply couldn't achieve anything of the kind.ChrisW said:Not only did he go out of his way to favor nVidia, he also screwed over the entire rest of the sound card industry in favor of one manufacturer...Aureal. He didn't give a flying crap about what card you owned, he was only going to support A3D and the rest of sound cards were forced to use plain stereo sound.

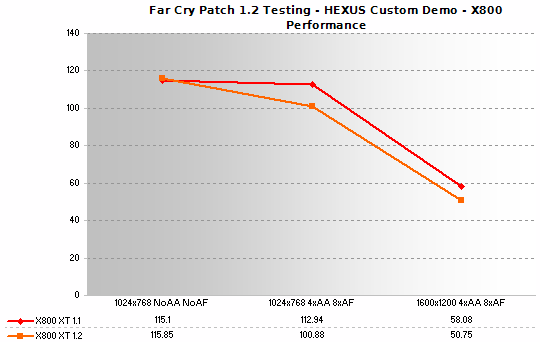

This is what I'm talking about:IbaneZ said:hothardware thinks that patch 1.2 is pretty good for X800, no drops compared to 1.1.

1-2 FPS more actually.

http://www.hothardware.com/viewarticle.cfm?articleid=550

I can't say i've noticed any difference either with my X800 pro (CAT 4.6). Who reported a 15 fps drop btw?

It looks like ATI cards are losing about 10 fps with the new path. Perhaps they screwed up the benchmarks? Anyway, if this is true, I'm sure ATI users will want their 10 fps back or at least an explanation as to what happened to them.

Just to play the devil's advocate for a moment, lemme throw on me green-colored lenses for a second....

Did you notice how their is no change in fps without AA/AF applied? The loss only occurs when there is AA/AF applied, mayhaps something in this new patch breaks something in an ATi AA/AF optimization? Mebbe one we do NOT know about yet?

The Dig removes his green-coloured lenses. (And he knows he spelled "color/colour" two different ways, he likes to keep people guessing.)

Did you notice how their is no change in fps without AA/AF applied? The loss only occurs when there is AA/AF applied, mayhaps something in this new patch breaks something in an ATi AA/AF optimization? Mebbe one we do NOT know about yet?

The Dig removes his green-coloured lenses. (And he knows he spelled "color/colour" two different ways, he likes to keep people guessing.)

ChrisW said:It looks like ATI cards are losing about 10 fps with the new path. Perhaps they screwed up the benchmarks? Anyway, if this is true, I'm sure ATI users will want their 10 fps back or at least an explanation as to what happened to them.

There was some confusion amongst reviewers about what aa mode was being applied by different flavours of cards.

going from 2x to 4x or from no aa to 4x could be one big explanation of why the results seem disparate.

We can't trust reviewers anymore..

ChrisW said:That never stopped about all other games based on the Quake3 engine from having EAX support. Are there any other Quake 3 engine games out there that don't have EAX support?Entropy said:That's because "the rest of sound cards" (=Creative + compatibles) provided absolutely no help with 3D-localization whatsoever. EAX at the time was weakly glorified reverb. The occlusion/reflection model that A3D provided was vastly superior and actually provided a sonic representation of the 3D environment of the player. Owners of other cards weren't "screwed over", their cards simply couldn't achieve anything of the kind.ChrisW said:Not only did he go out of his way to favor nVidia, he also screwed over the entire rest of the sound card industry in favor of one manufacturer...Aureal. He didn't give a flying crap about what card you owned, he was only going to support A3D and the rest of sound cards were forced to use plain stereo sound.

Hmmm, why support a technology that isn't worth much. Carmack was trying to help get out real 3d audio. I really miss A3D, and unfortunately since A3D has died EAX has become and stayed absolute garbage. Creative has has no interest in making a real 3d audio card. I was hoping Nvidia was going to with Soundstorm but they seem to have decided to fade that. Maybe hopefully with PCI-E they will make another one to take on Creative directly (its nice have that DICE chip there). Basically the only reason you want to work with a creative card is if you want to have special effects done to the audio which Carmack had no interest in (he was interested though in having real 3d audio). I wonder what Trent Reznor would have done with those audio effects if given the option. Notice for Doom 3 how as a result due to Creative, Carmack has now had to make an entire 3d audio sound engine on the CPU. It would have been much more ideal if he could have had the sound card doing that. Oh and might as well give a link to the engine doing the 3d sounds for Doom 3.

"Touching on what PC Gamer Magazine had written -- The Doom 3 physics engine will generate the sound effects. It will do this by calculating the sound velocity, where it occurs and what things around it will affect how this sound is produced on a particular object this is happening to. Things like energy absorption, reflection and mixing is taken into account through sound occlusion. For example a bullet ricocheting off a wall would sound different based on the type of wall, whether that wall is in a hallway, closed room and the shape of the room etc. With all these complex computations going on, one would think this game would require some hefty processing power. At least this writer firmly believes Doom 3 Surround is really going to bring the gaming experience to life like never before."

http://www.planetdoom.com/doom3/articles/d3sound.shtml

Heh, why not rose-colored lenses.Just to play the devil's advocate for a moment, lemme throw on me green-colored lenses for a second....

Bouncing Zabaglione Bros.

Legend

Cryect said:I was hoping Nvidia was going to with Soundstorm but they seem to have decided to fade that. Maybe hopefully with PCI-E they will make another one to take on Creative directly (its nice have that DICE chip there).

Nvidia are bringing Soundstorm back with Nforce 4, Via have Envy, and Intel/MS have a new sound system standard in progress. There's always alternatives to the awful Creative products.

Bouncing Zabaglione Bros. said:Nvidia are bringing Soundstorm back with Nforce 4, Via have Envy, and Intel/MS have a new sound system standard in progress. There's always alternatives to the awful Creative products.

I hope thats true, just did a quick check and the news seems come from The Inquirer (which I do find to be somewhat trustworthy ^_^). But, so far from within Nvidia, TheInq is wrong.

From Seawolf over at nforcershq, who seems to have contacts potentially at Nvidia.

"Annnnd here's NVIDIA:

Quote:

There may be some truth in there, but none of it has anything to do with audio. Makes me wonder how old this guy's data is.

So there is NO SOUNDSTORM 2 at the moment as I said..."

http://www.nforcershq.com/forum/viewtopic.php?t=49270

Envy is alright and waiting for my decision on the new Intel one. Heh, I just really like being able to hook up to my reciever for audio by DD5.1 instead of analog.

I dunno, it just seemed to be the color for that position.Cryect said:Heh, why not rose-colored lenses.

radar1200gs

Regular

IMO you don't need to ask crytek anything. You just need to ask ATi why they haven't supported SM3.0.DaveBaumann said:digitalwanderer said:Seconded. Speculation is all well and good, but no accusations should be levelled until the patch is out and we hear what they have to say.

And ATI.

I'm quite confident that crytek have done no wrong - they have simply written to the DX9 spec. Those cards that match the spec benefit, those that don't take a hit.

----------

www.nvnews.net/vbulletin/attachment.php?attachmentid=6733

Save_The_Nanosecond.ppt, slide 31 notes

Steer people away from flow control in ps3.0 because we expect it to hurt badly. (Also it’s the main extra feature on NV40 vs R420 so let’s discourage people from using it until R5xx shows up with decent performance...)

radar1200gs said:IMO you don't need to ask crytek anything. You just need to ask ATi why they haven't supported SM3.0.

Been there, done that, we know why. Let move on shall we.

radar1200gs said:I'm quite confident that crytek have done no wrong - they have simply written to the DX9 spec. Those cards that match the spec benefit, those that don't take a hit.

Hmmm??? So they enable a feature that shouldn't be doing a thing for the codepath that ATI cards are using but because there is something changed that supposedly shouldn't effect ATI cards, therefore they should have a speed loss? Is that what you are saying?

SM3 shouldn't effect the ATI cards at all is what people are saying. There shouldn't be a speed loss or a speed gain by the inclusion of SM3 code for cards that don't support it (assuming nothing else has changed which supposedly hadn't).

Edit: Just want to make sure you know I'm not arguing whether ATI should have or not supported SM3. I just want to know how supposedly a feature that isn't being used on a card will slow it down?

radar1200gs

Regular

My guess (and it is only a guess) is that crytek rewrote the shaders for SM3.0 and allows HLSL to do the fallback to SM2.0.

If ATi cards are having issues with the new shaders I'd suggest the fallback translation is where the problem lies.

Its already been noted in other threads that the MS HLSL compiler isn't particuarly intelligent in these cases, ignoring optimizations that could be done and producing straight SM2.0 code.

That means ATi probably have to do some work on their HLSL optimizing compiler, to get the performance back to where it was.

If ATi cards are having issues with the new shaders I'd suggest the fallback translation is where the problem lies.

Its already been noted in other threads that the MS HLSL compiler isn't particuarly intelligent in these cases, ignoring optimizations that could be done and producing straight SM2.0 code.

That means ATi probably have to do some work on their HLSL optimizing compiler, to get the performance back to where it was.

According to Anand the requirements they have will not fit into SM2.0's model - HLSL does not provide auto multipassing or anything like that, its still up to the developer to tell HLSL what to do if if can't operate on a particular shader capabilities.

radar1200gs

Regular

Is the patch purely a SM3.0 patch, or does it fix other bugs in the game also?

If it is a required patch, there may be some cause for concern, if it is an optional SM3.0 showcase patch, you can avoid the slowdowns simply by not applying it.

If it is a required patch, there may be some cause for concern, if it is an optional SM3.0 showcase patch, you can avoid the slowdowns simply by not applying it.

Similar threads

- Replies

- 2

- Views

- 403