Don't worry about that, Advanced and Pro are the same save for some command line tools, Pro is meant for enterprise usewith the Advanced - not Pro - upgrade.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DegustatoR

Legend

DavidGraham

Veteran

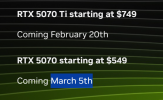

Apparently the 5070Ti is the same performance as the 4080 Super.

Apparently the 5070Ti is the same performance as the 4080 Super.

I've a hard time believing that given the relatively tiny jump from there to the 5080 but significant price increase. If true though I would very likely get one - mostly for the 16GB VRAM but the performance boost would be nice too.

You could *try* to get oneI've a hard time believing that given the relatively tiny jump from there to the 5080 but significant price increase. If true though I would very likely get one - mostly for the 16GB VRAM but the performance boost would be nice too.

I got a feeling these are gonna fly off the shelves like nothing we've seen yet.

DegustatoR

Legend

$750 to $1000 is a +33% price increase but it's the same chip and the same VRAM and all so the difference should be somewhat small, likely less than that on price.I've a hard time believing that given the relatively tiny jump from there to the 5080 but significant price increase. If true though I would very likely get one - mostly for the 16GB VRAM but the performance boost would be nice too.

One thing to ponder upon is that 5070 and 5070Ti prices are likely set with knowledge of where Navi 48 will be and they are the most likely reason why AMD has scrapped their RDNA4 launch at CES.

So a smaller perf gap can be a result of these cards actually having some competition.

arandomguy

Veteran

I've a hard time believing that given the relatively tiny jump from there to the 5080 but significant price increase. If true though I would very likely get one - mostly for the 16GB VRAM but the performance boost would be nice too.

I'm guessing it's from the Videocards article - https://videocardz.com/newz/geforce...ender-benchmark-7-6-faster-than-4070-ti-super

But the "source" they use shows a 4070 ti super scoring higher.

With Geekbench in general I'm not sure how people are actually judging representative scores whenever they cite from the database.

As for Blender results cited the it shows the 5080 as 20% faster than the 5070ti. But the 4080 Super is also 20% faster than the 4070 Ti super. This time around the 5070ti is $50 cheaper (at least officially MSRP), so the value differential is higher but not really that dramatically so.

For context if it does follow the same product stack targets it's worth keeping in mind the 4080 Super was only just under 20% faster on aggregate than the 4070 Ti Super according to the newest TPU numbers at 4k - https://www.techpowerup.com/review/gpu-test-system-update-for-2025/2.html

including RT numbers -

GPU Test System Update for 2025

2025 will be busy with GPU launches, all three GPU vendors are expected to release new cards in the coming weeks and months. To prepare for this, we've upgraded our GPU Test System, with a new Ryzen X3D CPU and more. The list of games has changed substantially, too.

NVIDIA definitely runs tensor and fp32 ops concurrently, especially now with their tensor cores busy almost 100% of the time (doing upscaling, frame generation, denoising, HDR post processing, and in the future neural rendering).

Latest NVIDIA generations have become exceedingly better at mixing all 3 workloads (tensor+ray+fp32) concurrently, I read somewhere (I can't find the source now) that ray tracing + tensor are the most common concurrent ops, followed by ray tracing + fp32/tensor + fp32.

Concurrent execution of CUDA and Tensor cores

Yes, that is what it means. I don’t know where you got that. If the compiler did not schedule tensor core instructions along with other instructions, what else would it be doing? NOP? Empty space? Maybe you are mixing up what the compiler does and what the warp scheduler does. The warp...forums.developer.nvidia.com

I need help understanding how concurrency of CUDA Cores and Tensor Cores works between Turing and Ampere/Ada?

There isn’t much difference between Turing, Ampere and Ada in this area. This question in various forms comes up from time to time, here is a recent thread. It’s also necessary to have a basic understanding of how instructions are issued and how work is scheduled in CUDA GPUs, unit 3 of this...forums.developer.nvidia.com

Informative threads. Confirms my understanding of how instruction issue works on Nvidia.

It's interesting that Nvidia never talks about the uniform math pipeline while AMD marketing makes a big deal about the scalar pipe. Presumably they perform similar functions.

Nah, running those lines is actually a lot more expensive than regular cables, and presumably you’d need to have a bunch so people could plug things in anywhere they wanted. Also a 20A outlet can run 1800W continuously (80% of max load).Will we see a future where high end gaming PCs have to be connected to 240V outlets like you use for your dryer? A PC with a 5090 and 14900K could already use half the continuous capacity (80%) of a 120V 20A breaker.

And if somehow 1900W (20A at 80% derate for code is 16A at 120v nominal, which is ~1920W) isn't enough, then the same 20A wiring for US code should also support the 6-20 receptacle, which is two 20A hots and a ground rather than a single 20A hot, a neutral and ground. It would take up one additional space in your breaker box, but would swap the 5-20 receptacle you have today for a 6-20 receptacle, which would provide double the voltage and thus double the available wattage. IF/when we get to the point where 3800W continuous isn't enough for your favorite PC gaming session, well, then we have a larger problem! Although, 1800W as a ceiling isn't as far off as some might think.

The real challenge is most outlets used for PC gaming aren't a single receptacle on a single breaker. As an example, all the outlets within my office connect to a single 20A breaker, and my office includes a Bambu X1C 3D printer, another bigass Fedora Linux PC with its own 27" Dell Ultrasharp monitor, a small drinks fridge, and two LED lamps. Today, the office breaker regularly sees ~1100W continuous load with "spikes" to 1300W with only my gaming rig and my Linux rig both folding 24/7. When I crank up the 3D printer, the outlet will absolutely get to 1500W sustained for an hour or two or more. And when the beer fridge compressor kicks on? Another 50-60W depending. Also while that Dell Ultrasharp is awake, just by itself is another ~100W (it's an old model.)

Yes, the room stays nice and warm And I'm within a few hundred watts of looking at dropping a 240v outlet into my own office without even talking about a new video card.

And I'm within a few hundred watts of looking at dropping a 240v outlet into my own office without even talking about a new video card.

The real challenge is most outlets used for PC gaming aren't a single receptacle on a single breaker. As an example, all the outlets within my office connect to a single 20A breaker, and my office includes a Bambu X1C 3D printer, another bigass Fedora Linux PC with its own 27" Dell Ultrasharp monitor, a small drinks fridge, and two LED lamps. Today, the office breaker regularly sees ~1100W continuous load with "spikes" to 1300W with only my gaming rig and my Linux rig both folding 24/7. When I crank up the 3D printer, the outlet will absolutely get to 1500W sustained for an hour or two or more. And when the beer fridge compressor kicks on? Another 50-60W depending. Also while that Dell Ultrasharp is awake, just by itself is another ~100W (it's an old model.)

Yes, the room stays nice and warm

Informative threads. Confirms my understanding of how instruction issue works on Nvidia.

It's interesting that Nvidia never talks about the uniform math pipeline while AMD marketing makes a big deal about the scalar pipe. Presumably they perform similar functions.

Faith Ekstrand (@gfxstrand@mastodon.gamedev.place)

Next up: Bindless UBOs. On NVIDIA, bindless UBOs use a 64-bit descriptor with 40 bits of base address and 14 bits of size / 4. Those can be referenced directly from ALU instructions and should get nearly the same shader perf as bound cbufs. The real trick here, though, is that they require the...

Hugo Devillers (@gob@mastodon.gamedev.place)

@gfxstrand This reads like hell. What the hell was nvidia smoking? Is this stuff only meant to be used in a prologue and/or maybe even vestigial ? The whole notion of "uniformity" seems like it should be a lost cause once you have hardware that may "spontaneously" diverge and reconverge.

According to an open source driver developer who writes drivers for Nvidia HW, their UGPRs have really complex rules and stringent restrictions for usage ...

I assume it explains why their hardware is unable to optimize the divergent indexing of constant buffers (cbuffer{float4} load linear/random) in this perftest because divergent resource access breaks one of the conditions that "uniform control flow" must be required to make use of UGPRs. They speculate that it's mainly designed for address calculations at the start of a program ...

Flappy Pannus

Veteran

DegustatoR

Legend

Why would they make anything on an AD102 when it's EOL since November and there's GB202 in production?

They might be obligated due datacenter / prosumer stuff to keep sufficient supply available for quite some time longer though? If there is a 96GB version it's not GeForce for sure.Why would they make anything on an AD102 when it's EOL since November and there's GB202 in production?

Good point. I know Micron talked about creating 32 Gbit GDDR6 modules, but I can't find anywhere suggesting they ever did or they have any for sale. The spec does allow for such a capacity...

raytracingfan

Newcomer

Every article on the high VRAM 4090 I can find implies it's a Chinese factory modification, not an official Nvidia SKU.

Oh yeah 100% it's pure aftermarket. They have to use a different PCB because the "stock" 4090 card doesn't have a spot for clamshell-mounted DRAM chips. The rumored 4090-96GB would be the same design but ostensibly with 32Gbit chips (instead of the 16Gbit ones available today.) The trick is, 32 Gbit chips don't seem to exist...

Yeah pretty obvious, 3GB Modules + 512-bit bus = 48GB/96GB. That said I expect RTX 60 GeForce to use 3GB modules across the board (maybe 6090 has 42GB and a 6090 Ti with 48GB? And hopefully RDNA 5/UDNA Gen 1 has a top end option as well).The discussion presupposes that it's possible to fit 96 GB on an AD102, which it just isn't. 12 memory channels, two DRAMs per channel, 2 GB per DRAM = 48 GB.

A GB202 with 96 GB makes sense and is almost certainly gonna be the RTX 6000 Blackwell

Following on that, I don't expect 32 Gibt/4GB Modules to appear until like RTX 70, UDNA Gen 2/RDNA 6 and maybe Xe4 dGPU if that's 2028.Good point. I know Micron talked about creating 32 Gbit GDDR6 modules, but I can't find anywhere suggesting they ever did or they have any for sale. The spec does allow for such a capacity...