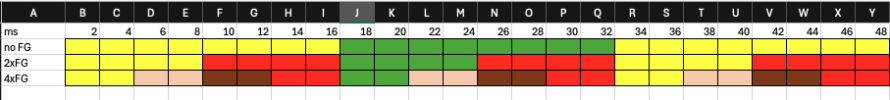

@Cappuccino Frame gen is both smoothing and motion blur reduction.

I think maybe it’s time that reviewers define performance. I guess the standard the look for is something like average smoothness, pacing, latency, frame pacing, power consumption and motion blur controlled for image quality which includes in-game settings, resolution and upscaling.

That’s the best I can come up with to articulate the expectation to this point. So if you improve a number of the performance metrics without regression to the others you have increased overall performance. You can lower image quality to increase performance but when comparing performance image quality should be very close to equal.

I think the point of defining performance this way is it’s not reliant on frames. We now have frame gen which increases frame rate but causes a latency regression which would betray the definition. It will be better to measure latency and all of the other performance metrics directly. In some cases that will still be frame time, like average smoothness and motion blur.

I do think it’s tricky still. I I run uncapped and my gpu hits 100% that decreases frame times but increases latency. If I frame cap I can increase frame times slightly but lower latency and improve frame pacing. Is that better or worse performance? Same with reflex. Lower latency but tiny bit higher average frame time and worse frame pacing.