Frame generation won't help in slowdown of the game engine...

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Lossless Scaling. FGx2-x20 & upscaling on ANY GPU! Miniguide page 11

- Thread starter Cyan

- Start date

Tested 2D games like Contra Anniversary Collection on Steam.

On my 165Hz monitor..., it was tremendous.

I've managed to multiply the framerate of Contra (1987 arcade version) up to x11

while letting the game run the typical arcade gameplay all arcade machines had, without artifacts.

while letting the game run the typical arcade gameplay all arcade machines had, without artifacts.

It's night and day, the high frames mean that you don't see jaggies on the edges of the sprites, it looks much smoother. The so called motion clarity.

From x11 onwards some artifacts appeared because the monitor refresh rate can't keep up with the game and you see ghosting on the characters in certain animations.

On the TV at 4K 60fps, it's easy to see artifacts because there's little to multiply. The maximum without too many problems is FGx4. In FGx4 the game runs much smoother and has almost no artifacts.

Those extra frames generate some antialiasing effect, but it's not as pronounced as on the 165Hz monitor, which looks much smoother and also provides a greater antialiasing effect.

On my 165Hz monitor..., it was tremendous.

I've managed to multiply the framerate of Contra (1987 arcade version) up to x11

It's night and day, the high frames mean that you don't see jaggies on the edges of the sprites, it looks much smoother. The so called motion clarity.

From x11 onwards some artifacts appeared because the monitor refresh rate can't keep up with the game and you see ghosting on the characters in certain animations.

On the TV at 4K 60fps, it's easy to see artifacts because there's little to multiply. The maximum without too many problems is FGx4. In FGx4 the game runs much smoother and has almost no artifacts.

Those extra frames generate some antialiasing effect, but it's not as pronounced as on the 165Hz monitor, which looks much smoother and also provides a greater antialiasing effect.

Mind blowingnow with up to Frame Generation x 20.

Lossless Scaling - LSFG 3 - Steam News

Happy anniversary, LSFG! LSFG 3 is built on a new, efficient architecture that introduces significant improvements in quality, performance, and latency. Key improvements include: Better quality: Reduced flickering and border artifacts, with noticeable enhancements in motion clarity and overall...store.steampowered.com

Happy anniversary, LSFG!

LSFG 3 is built on a new, efficient architecture that introduces significant improvements in quality, performance, and latency.

Key improvements include:

- Better quality:

Reduced flickering and border artifacts, with noticeable enhancements in motion clarity and overall smoothness.

- Lower GPU load:

A 40% reduction for X2 mode compared to LSFG 2 (non-performance mode). Over 45% reduction for multipliers above X2 compared to LSFG 2 (non-performance mode).

The "Resolution Scale" feature remains an excellent way to further reduce GPU load. For instance, setting it to 90% roughly aligns with the LSFG 2 "Performance" mode.

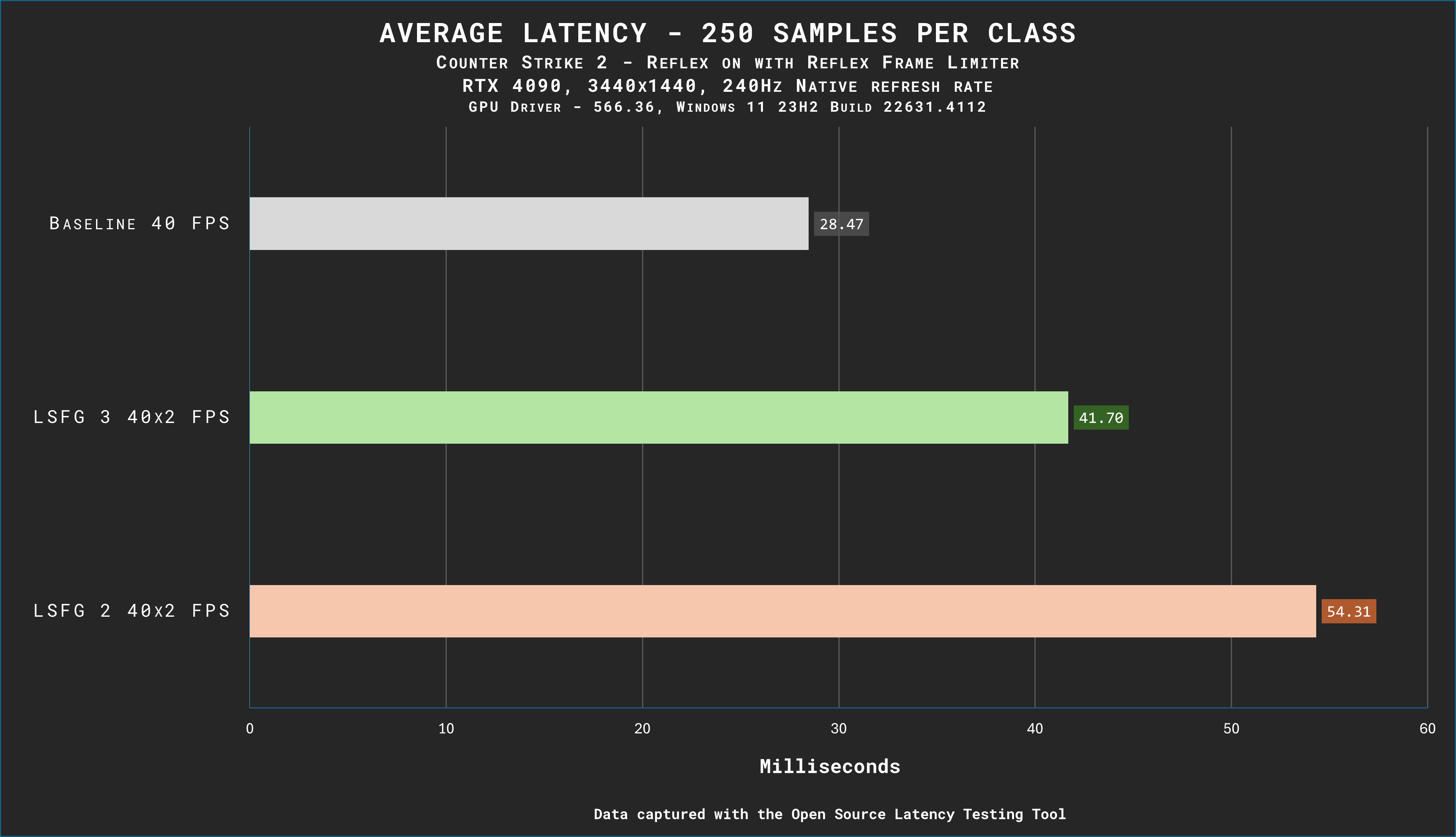

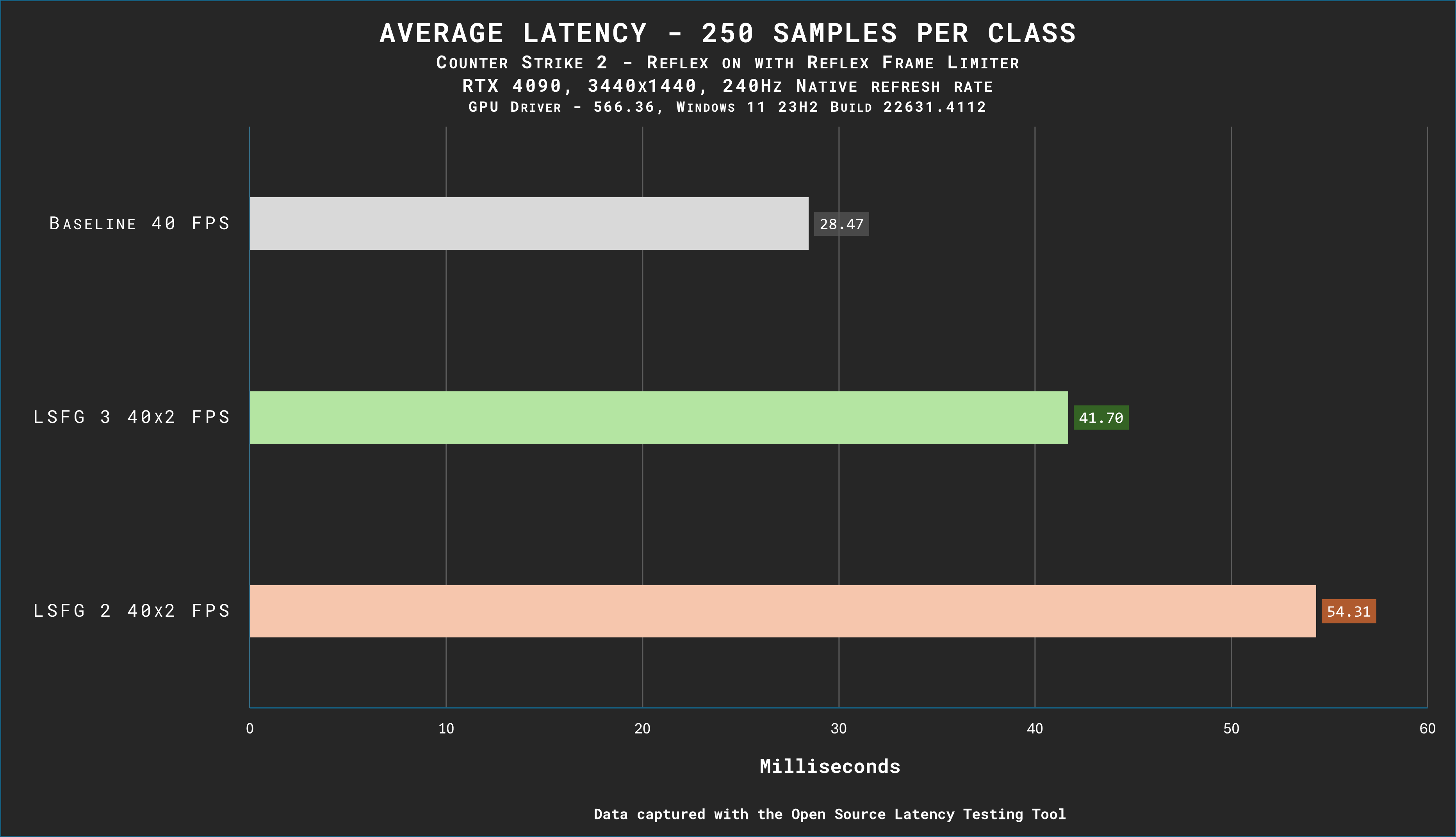

- Improved latency:

Latency testing with the OSLTT tool (at 40 base FPS, X2) shows approximately 24% better end-to-end latency compared to LSFG 2.

- Unlocked multiplier:

LSFG 3 also introduces an unlocked multiplier, now capped at X20. While this offers greater flexibility, the following recommendations apply for optimal results:

Base framerate: A minimum of 30 FPS is required (40 FPS or higher is preferred, with 60 FPS being ideal) at 1080p.

For best overall experience, locking the game framerate is recommended. This helps to avoid 100% GPU load (reducing its impact on latency) and ensures smoother framepacing.

For higher resolution use at higher than recommended framerates or use the "Resolution Scale" option to downscale input to 1080p:

For 1440p, set it to 75%.

For 4K, set it to 50%.

Higher multipliers (e.g., X5 or above) are best suited for high refresh rate setups, such as:

48 FPS × X5 for 240Hz.

60 FPS × X6 for 360Hz.

60 FPS × X8 for 480Hz.

By CptTombstone:

----------------------------------------------

We’re also happy to announce the Lossless Scaling 3.0 UI Update Beta! To join the beta testing, follow these steps:

Open Steam.

Navigate to Lossless Scaling in your library.

Right-click and select Properties.

Go to the Betas tab and select "beta" from the dropdown.

Your feedback on the new UI is invaluable as we continue to improve!

----------------------------------------------

On a side note, the DXGI Capture API is working again in Windows 11 24H2, thanks to the KB5046617 update. Much appreciated, Microsoft.

Pretty awesome IMO. I might have to spend the seven bucks just to check this out on my GTX 1070MQ laptop. Could breathe some new life into it for sure!

Pretty impressive that it's doing all of this without access to motion vectors.Tested 2D games like Contra Anniversary Collection on Steam.

On my 165Hz monitor..., it was tremendous.

I've managed to multiply the framerate of Contra (1987 arcade version) up to x11while letting the game run the typical arcade gameplay all arcade machines had, without artifacts.

It's night and day, the high frames mean that you don't see jaggies on the edges of the sprites, it looks much smoother. The so called motion clarity.

From x11 onwards some artifacts appeared because the monitor refresh rate can't keep up with the game and you see ghosting on the characters in certain animations.

On the TV at 4K 60fps, it's easy to see artifacts because there's little to multiply. The maximum without too many problems is FGx4. In FGx4 the game runs much smoother and has almost no artifacts.

Those extra frames generate some antialiasing effect, but it's not as pronounced as on the 165Hz monitor, which looks much smoother and also provides a greater antialiasing effect.

now that you mention it, utilities like this could bring new life to many devices, or extend the capabilities of future handhelds with high refresh rate displays, like for instance the new 2025 GPD Win Mini which has a VRR 120Hz display, but you aren't going to run many games at that framerate natively.Pretty awesome IMO. I might have to spend the seven bucks just to check this out on my GTX 1070MQ laptop. Could breathe some new life into it for sure!

In the end you are internally running those games at the original framerate -so, you aren't breaking the game- but the extra smoothness certainly helps and makes those games actually more enjoyable.

Last edited:

The graphs included in the announcement seem to indicate it's one additional (generated) frame of latency over the base framerate, approximately matching how DLSS-FG behaves:48 FPS × X5 for 240Hz.

60 FPS × X6 for 360Hz.

60 FPS × X8 for 480Hz.

Latency for these recommendations would be interesting to see vs baseline.

Math:

- At 40FPS, the latency is 28.5ms

- At 80FPS, we assume the latency is 14.25ms. (The assumption being input latency is coupled to frametime, because if it wasn't, we wouldn't be having this conversation.)

- We are still subject to the raw underlying frames, so 28.5msec is still the absolute floor, but...

- If we add 14.25 (one generated frame at 80fps) to 28.5 (the underlying "true" rasterized framerate), we get 42.75ms -- which is actually slightly higher than the measured 41.8ms indicated on the graph.

Again, this roughly follows DLSS-FG performance as well, which adds one (generated) frame of additional latency in similar circumstances.

Tested the quite demanding Bright Memory Infinite with Raytracing at the highest settings. Here's the experience and tips for achieving a PERFECT stable high framerate (165fps in my case):

- Optimal settings:/ideal experience: Set the game's internal resolution scaling and Lossless Scaling (LS) to 50%. This provided a stable 41fps (locked via Rivatuner), and with FGx4, it consistently hit 165fps ALL the time.

- If FGx4 struggles to maintain 165fps (e.g., 41/152 fps instead of 41/164), it's likely due to GPU or CPU limitations. Reduce either the game's or LS's rendering scale (avoid using any specific upscalers in LS, as they consume resources). This happened to me and I fixed it that way.

- Lowering LS's rendering scale below 100% significantly helps achieve a consistent 165fps output.

- Locking the game at 55fps (1/3 of 165Hz) didn't work due to the high RT demands. Perhaps reducing LS scaling to 25% might help....At max settings with RT at 1440p, I managed a peak of 52fps, but it wasn't stable, not enough to achieve 55fps (1/3 of the 165Hz of the monitor).

Last edited:

It's worth the 7 dollars. My laptop has a 1660ti in it with a 120hz screen, and LS helps smooth things out quite a bit. I'm not disappointed that I spent the money on it.Pretty awesome IMO. I might have to spend the seven bucks just to check this out on my GTX 1070MQ laptop. Could breathe some new life into it for sure!

tested Castlevania Anniversary Collection at FGx10 by playing Super Castlevania IV. The difference is staggering, the animations feel super fluid, yours and those from the enemies. It actually improves the game, how it feels, you don't see the transitions between animations as sharp and abrupt as in the original.

Here the author of the video uses RDR2, Cyberpunk etc, and pushes LS to the limit, using a 20fps base framerate in some cases, and even 5fps base . Also with FGx 8, 45fps base he gets RDR2 to 360fps with a base framerate of 45fps.

He also locks a game at 30fps and multiply x20 and gets 520fps xDD.

He even plays Youtube videos at 30fps (480p so the video runs at 30fps) and compares it to 30 x FGx20 = 600fps.

He also locks a game at 30fps and multiply x20 and gets 520fps xDD.

He even plays Youtube videos at 30fps (480p so the video runs at 30fps) and compares it to 30 x FGx20 = 600fps.

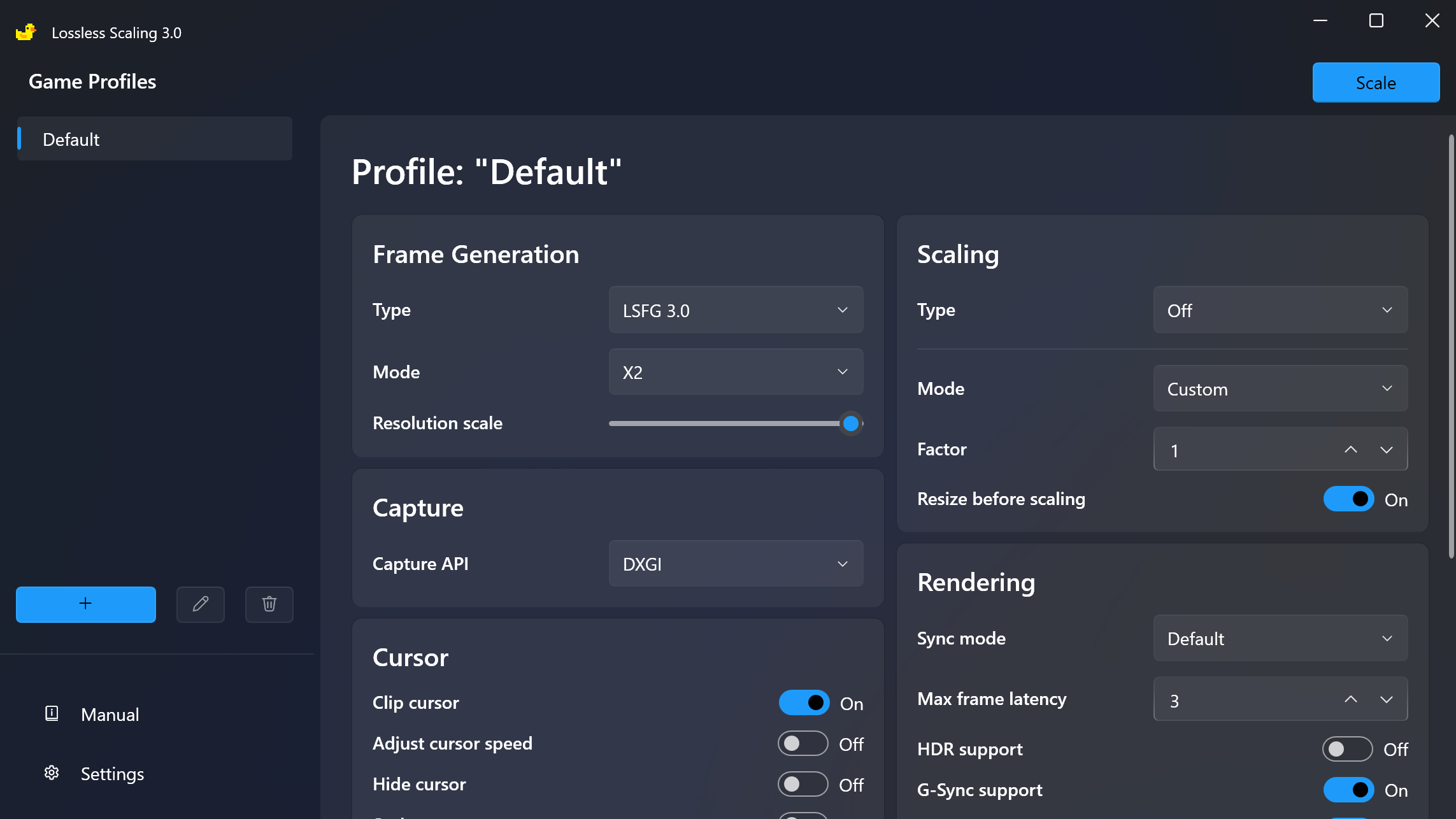

This is how I've set LS. It works fine this way for me.

I have a separate profile for Bright Memory Infinite, 'cos Highest RT entails a big performance hit, so I've set the Resolution Scale to less than 100% .

There is another profile for 2D games with which I use Frame Generation x 11. From then on my monitor shows artifacts, it can't keep up with the LS animation speed.

It's worth it because you play a SNES game or any 2D game the difference is night and day, you don't see abrupt transitions between animations typical of old games anymore.

I have the Sync Mode set to Off -Allow Tearing- because my monitor has Freesync and in the Intel Graphics app I already have the options configured to not allow tearing.

I don't use any upscaling method in LS because I don't like any of the default upscalers -and there are many and some of them aren't bad-, since they use resources,

When I need extra performance from upscaling I just use the Resolution Scale option.

I have Integer Scaling and the new Intel Low Latency enabled in the Intel Graphics Center app and both do a good job.

Hope this helps someone else.

I have a separate profile for Bright Memory Infinite, 'cos Highest RT entails a big performance hit, so I've set the Resolution Scale to less than 100% .

There is another profile for 2D games with which I use Frame Generation x 11. From then on my monitor shows artifacts, it can't keep up with the LS animation speed.

It's worth it because you play a SNES game or any 2D game the difference is night and day, you don't see abrupt transitions between animations typical of old games anymore.

I have the Sync Mode set to Off -Allow Tearing- because my monitor has Freesync and in the Intel Graphics app I already have the options configured to not allow tearing.

I don't use any upscaling method in LS because I don't like any of the default upscalers -and there are many and some of them aren't bad-, since they use resources,

When I need extra performance from upscaling I just use the Resolution Scale option.

I have Integer Scaling and the new Intel Low Latency enabled in the Intel Graphics Center app and both do a good job.

Hope this helps someone else.

Last edited:

GhostofWar

Regular

Well after seeing your screenshot I wondered why my UI was still the old one then noticed i was still on 2 aswell, worked out I needed to select the beta branch and got the 3 update. So went and done some tests again but it didn't seem to change my results. Bit more context so we know how i've set things up. I have a hyperspin launcher setup, it launches my snes/megadrive roms into retroarch and it has bezels/overlays configured and uses a crt shader. Amiga stuff launches into fs-uae arcade mode and it has it's own cover flow style ui. Mame launches mame roms, it uses overlays/bezels and a crt shader. I also have a taito type x emu which I havn't used for a while and that seems to not work with windows 11 so that's irrelevant.

Snes and Megadrive/Genesis I couldn't get LS to work on, I tried quickly not using my front end loading straight into retroarch and didn't seem to change anything, I tried wgc and dxgi.

Didn't have any better luck with fs-uae, didn't get it working there either.

Which left me with mame, and this worked fine. I tried a bunch of stuff from galaga/galaxian/scramble/asteroids to mortal kombat/operation wolf/punisher. Lets start with the good, the latency wasn't an issue for anything that didn't have any kinds of slowdowns, and I noticed the increased clarity pretty easily. This helped it get a tiny bit closer to a crt because of the decreased motion blur I guess. Vector games benefited from this pretty well too.

Now anything that has slowdowns I didn't like at all, I think the increased input lag from FG with the input lag getting worse from the performnce problems made it unplayable for me. I think there is a way to stop mame from emulating the base hardware performance slowdowns but can't remember so didn't try that. Metal Slug, Xaine Sleena, Double Dragon and Pacland were all things I could remember having slowdowns in the arcades so tried those and when the performance struggles the image manages to not fall apart but the input is bad, it obviously suffers without FG aswell but it still stays somewhat playable.

I'm going to see if I can beat any of my highscores on scramble/galaxian/elevator action/Rally x and a few others and see if it helps or hinders my performance, I do like the increased motion clarity so hopefully the latency doesn't have an impact even if I didn't really notice it in the quick tests.

I was also only using x2 mode, my monitor is 144hz and the base frame rates were 60 so 120 was my best option.

Snes and Megadrive/Genesis I couldn't get LS to work on, I tried quickly not using my front end loading straight into retroarch and didn't seem to change anything, I tried wgc and dxgi.

Didn't have any better luck with fs-uae, didn't get it working there either.

Which left me with mame, and this worked fine. I tried a bunch of stuff from galaga/galaxian/scramble/asteroids to mortal kombat/operation wolf/punisher. Lets start with the good, the latency wasn't an issue for anything that didn't have any kinds of slowdowns, and I noticed the increased clarity pretty easily. This helped it get a tiny bit closer to a crt because of the decreased motion blur I guess. Vector games benefited from this pretty well too.

Now anything that has slowdowns I didn't like at all, I think the increased input lag from FG with the input lag getting worse from the performnce problems made it unplayable for me. I think there is a way to stop mame from emulating the base hardware performance slowdowns but can't remember so didn't try that. Metal Slug, Xaine Sleena, Double Dragon and Pacland were all things I could remember having slowdowns in the arcades so tried those and when the performance struggles the image manages to not fall apart but the input is bad, it obviously suffers without FG aswell but it still stays somewhat playable.

I'm going to see if I can beat any of my highscores on scramble/galaxian/elevator action/Rally x and a few others and see if it helps or hinders my performance, I do like the increased motion clarity so hopefully the latency doesn't have an impact even if I didn't really notice it in the quick tests.

I was also only using x2 mode, my monitor is 144hz and the base frame rates were 60 so 120 was my best option.

Since you have a 144Hz monitor, you probably can scale well beyond x2 and even reach x8-x10 in 2D games without encountering artifacts. I’ve personally pushed it up to x10 (and even x11 in some cases), and the improvement in animation smoothness is quite the sight, not to mention motion clarity.Well after seeing your screenshot I wondered why my UI was still the old one then noticed i was still on 2 aswell, worked out I needed to select the beta branch and got the 3 update. So went and done some tests again but it didn't seem to change my results. Bit more context so we know how i've set things up. I have a hyperspin launcher setup, it launches my snes/megadrive roms into retroarch and it has bezels/overlays configured and uses a crt shader. Amiga stuff launches into fs-uae arcade mode and it has it's own cover flow style ui. Mame launches mame roms, it uses overlays/bezels and a crt shader. I also have a taito type x emu which I havn't used for a while and that seems to not work with windows 11 so that's irrelevant.

Snes and Megadrive/Genesis I couldn't get LS to work on, I tried quickly not using my front end loading straight into retroarch and didn't seem to change anything, I tried wgc and dxgi.

Didn't have any better luck with fs-uae, didn't get it working there either.

Which left me with mame, and this worked fine. I tried a bunch of stuff from galaga/galaxian/scramble/asteroids to mortal kombat/operation wolf/punisher. Lets start with the good, the latency wasn't an issue for anything that didn't have any kinds of slowdowns, and I noticed the increased clarity pretty easily. This helped it get a tiny bit closer to a crt because of the decreased motion blur I guess. Vector games benefited from this pretty well too.

Now anything that has slowdowns I didn't like at all, I think the increased input lag from FG with the input lag getting worse from the performnce problems made it unplayable for me. I think there is a way to stop mame from emulating the base hardware performance slowdowns but can't remember so didn't try that. Metal Slug, Xaine Sleena, Double Dragon and Pacland were all things I could remember having slowdowns in the arcades so tried those and when the performance struggles the image manages to not fall apart but the input is bad, it obviously suffers without FG aswell but it still stays somewhat playable.

I'm going to see if I can beat any of my highscores on scramble/galaxian/elevator action/Rally x and a few others and see if it helps or hinders my performance, I do like the increased motion clarity so hopefully the latency doesn't have an impact even if I didn't really notice it in the quick tests.

I was also only using x2 mode, my monitor is 144hz and the base frame rates were 60 so 120 was my best option.

With 2D games experimenting is easier. I tried at 4K 60fps on my TV and the artifacts are quite noticeable at FGx4, while on the 165Hz display the margin is a lot more ample.

Regarding overclocking in MAME, as far as I know, it’s possible to overclock the original arcade hardware through MAME, though I’m not entirely sure about the specific steps involved.

As for why this doesn’t work with other emulators, it might be because they typically run in fullscreen mode rather than windowed or borderless windowed mode. Lossless Scaling (LS) requires the game to run in either windowed or borderless windowed mode to function properly. I’m not very familiar with RetroArch—I’ve tried it a few times, but its interface confused me, so I stopped using it. However, pressing Alt + Enter to toggle windowed mode on your emulator might help to switch from fullscreen to windowed mode.

If your monitor supports FreeSync or G-Sync, you can set LS Sync Mode to "Off (Allow Tearing)" to minimize latency. From there, you can use your GPU's native drivers to limit the framerate.

If you have an option to enable Reflex for all games, it helps. I use the new setting of Intel Graphics app called Low Latency mode, which can be set to Off, On, and On+Boost -this one uses more CPU, so I just use the On option-.

GhostofWar

Regular

Interesting, I didn't think I would get much out of going above my refresh rate so i'll try that. Yeh it's a gsync monitor and I have the low latency set in the drivers to on+boost. If i'm going to try like x8 or more is there any reason to set a limit on the frame rate?Since you have a 144Hz monitor, you probably can scale well beyond x2 and even reach x8-x10 in 2D games without encountering artifacts. I’ve personally pushed it up to x10 (and even x11 in some cases), and the improvement in animation smoothness is quite the sight, not to mention motion clarity.

With 2D games experimenting is easier. I tried at 4K 60fps on my TV and the artifacts are quite noticeable at FGx4, while on the 165Hz display the margin is a lot more ample.

Regarding overclocking in MAME, as far as I know, it’s possible to overclock the original arcade hardware through MAME, though I’m not entirely sure about the specific steps involved.

As for why this doesn’t work with other emulators, it might be because they typically run in fullscreen mode rather than windowed or borderless windowed mode. Lossless Scaling (LS) requires the game to run in either windowed or borderless windowed mode to function properly. I’m not very familiar with RetroArch—I’ve tried it a few times, but its interface confused me, so I stopped using it. However, pressing Alt + Enter to toggle windowed mode on your emulator might help to switch from fullscreen to windowed mode.

If your monitor supports FreeSync or G-Sync, you can set LS Sync Mode to "Off (Allow Tearing)" to minimize latency. From there, you can use your GPU's native drivers to limit the framerate.

If you have an option to enable Reflex for all games, it helps. I use the new setting of Intel Graphics app called Low Latency mode, which can be set to Off, On, and On+Boost -this one uses more CPU, so I just use the On option-.

I think when turning on overlays in retroarch it uses fullscreen mode so I might have a play with that and set one up in a windowed or borderless windowed mode and try again, I think I've setup mame in fullscreen mode aswell and that works although i'm probably not going to play around there just to try break it lol.

I don't really use the default retroarch UI, I did to setup the controllers and some settings when setting it all up but now the hyperspin frontend is set to run retroarch with command line options to specify rom and a couple of other settings and it just goes straight into the game. I'm not a huge fan of the default UI on retroarch myself either.

the reason to set the limit in the framerate is basically achieving some consistency -but I unlocked the framerate and in 2D games I didn't find much of a difference between 165fps and 234fps (the max my rig can run Contra Anniversary Collection at) when it comes to gameplay, there wasn't screen tearing at all.Interesting, I didn't think I would get much out of going above my refresh rate so i'll try that. Yeh it's a gsync monitor and I have the low latency set in the drivers to on+boost. If i'm going to try like x8 or more is there any reason to set a limit on the frame rate?

I think when turning on overlays in retroarch it uses fullscreen mode so I might have a play with that and set one up in a windowed or borderless windowed mode and try again, I think I've setup mame in fullscreen mode aswell and that works although i'm probably not going to play around there just to try break it lol.

I don't really use the default retroarch UI, I did to setup the controllers and some settings when setting it all up but now the hyperspin frontend is set to run retroarch with command line options to specify rom and a couple of other settings and it just goes straight into the game. I'm not a huge fan of the default UI on retroarch myself either.

Although if you multiply by 8, or 10, etc, LS is running the game internally at that speed regardless of your display's refresh rate. On 2D games the animation is going to be much smoother until your screen starts to do crazy things 'cos it can't keep up.

There is a point where either your CPU (The Witcher 3 with RT on for instance= or GPU will be limited. On Forza Horizon 5 for instance -a game with excellent Vsync options, 1/4, 1/3, 1/2, 1/1-, it's easier for me to lock the game at 41fps and enable LS FGx4, at Ultra settings -except RT, which I set to Off-, than locking the game at 55fps and set LS to FGx3 'cos in the second case I can't get the game running at 55/164fps with Ultra settings, but 41/164fps is entirely possible for me.

CPU or GPU limitations arise in games. In Forza Horizon 5 -a game with excellent Vsync options, 1/4, 1/3, 1/2, 1/1--, I achieve stable 41/164 FPS at Ultra settings (RT off) with LS FGx4 but can't reach 55/164 FPS with LS FGx3 at the same settings.

The Witcher 3 with RT on is another example of the limitations of my CPU (Ryzen 3700X), i can barely get 33fps with RT on, the GPU resting on it laurels.

wonder if the Resolution Scale option in Lossless Scaling only affects the FG generated frames, because it certainly increases the performance when you decrease the resolution scale but the game still looks clean even if you go as low as 25% of Resolution Scale, as if the actual frame generated by the game isn't upscaled at all, but native.

Interesting testing, keep it up!wonder if the Resolution Scale option in Lossless Scaling only affects the FG generated frames, because it certainly increases the performance when you decrease the resolution scale but the game still looks clean even if you go as low as 25% of Resolution Scale, as if the actual frame generated by the game isn't upscaled at all, but native.

Similar threads

- Replies

- 15

- Views

- 2K

- Replies

- 13

- Views

- 6K

- Replies

- 91

- Views

- 20K

- Replies

- 98

- Views

- 36K