Frenetic Pony

Veteran

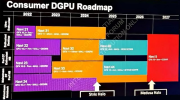

RDNA4 is out in less than 6 months! And we've got info on RDNA5 already:

DGF: A Dense, Hardware-Friendly Geometry Format for Lossily Compressing Meshlets with Arbitrary Topologies (hardware compressed mesh format that's raytracing friendly, already praised by lead designer of Nanite)

"the largest technical leap you will have ever seen in a hardware generation" (PR, but multiple leaks point towards Xbox going w/AMD again)

"RDNA5 to feature entirely chiplet arch" (according to patents)

"Medusa Point Zen 6/RDNA5 in 2026" (Zen 6/RDNA5 already scheduled for laptops/mobile in 2026)

Supposedly targeting late 2025 for desktop versions, though who knows if that date will be hit or if CES - Computex 2026 is more likey. Still, that's a heck of a lot info, implied and leaked (yes Medusa as a codename has come up more than once and looks to be guaranteed as the actual codename). Feels like enough to start a thread.

Other leak points towards some sort of increased AI instruction throughput. Maybe it's that block FP16 (an entire set of matrix multiplication instructions share the same exponent) that AMD is so fond of (already shipping in XDNA2, confirmed support in MI350)

DGF: A Dense, Hardware-Friendly Geometry Format for Lossily Compressing Meshlets with Arbitrary Topologies (hardware compressed mesh format that's raytracing friendly, already praised by lead designer of Nanite)

"the largest technical leap you will have ever seen in a hardware generation" (PR, but multiple leaks point towards Xbox going w/AMD again)

"RDNA5 to feature entirely chiplet arch" (according to patents)

"Medusa Point Zen 6/RDNA5 in 2026" (Zen 6/RDNA5 already scheduled for laptops/mobile in 2026)

Supposedly targeting late 2025 for desktop versions, though who knows if that date will be hit or if CES - Computex 2026 is more likey. Still, that's a heck of a lot info, implied and leaked (yes Medusa as a codename has come up more than once and looks to be guaranteed as the actual codename). Feels like enough to start a thread.

Other leak points towards some sort of increased AI instruction throughput. Maybe it's that block FP16 (an entire set of matrix multiplication instructions share the same exponent) that AMD is so fond of (already shipping in XDNA2, confirmed support in MI350)