That seems a really poor oversight IMO. We've had dev talks highlighting the need for CPU optimised engine design for years now, and the development of parallelisable entity based engines. Even me in my own efforts have designed my game around concepts that can be parallelised if/when the need arises. For mainstream studios not to be doing this when that's their paid job, and for independent modders to be able to hack it in themselves, is poor form indeed.

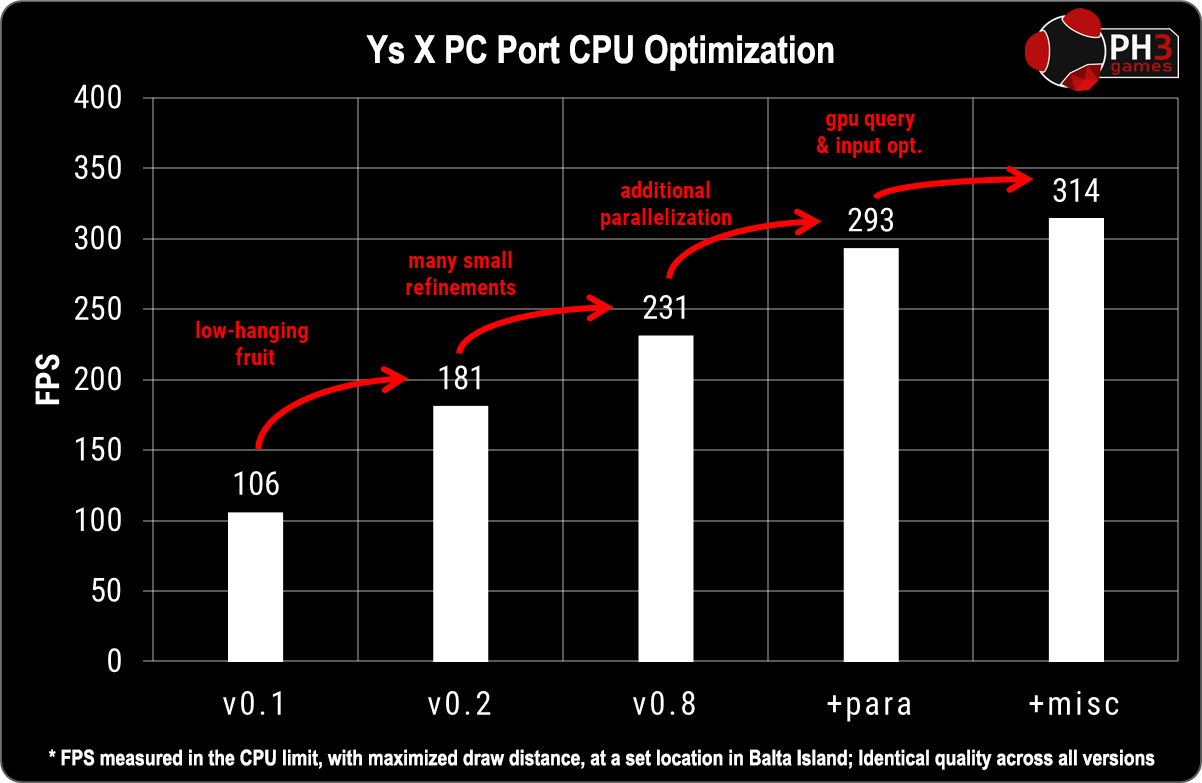

I feel there could do with being an 'Industry Award' review at the end of the year that highlights the highs and lows of games; "The Foundries" or something. And Ys X wins a Wooden Anvil for not parallelising its main code, and Durante et al get a Golden Hammer for doing it and showing what's possible and what devs should be doing.

Without shedding light on poor practice, it'll be difficult to pressure devs to amend their ways. When they are named and shamed, they might reconsider their priorities.

Note: 'Devs' means the whole studio including the execs making decisions that affect everything. The finger isn't being pointed at engineers who aren't doing it right, as we don't know if it's their call or if they request an update to the engine but the execs are refusing. Something somewhere in the studios needs to change when CPU utilisation in released games is this poor.