DLSS, XeSS and FSR certainly don’t produce the same output... It would be great if Microsoft could dictate some sort of minimum error or SNR metric that upscalers would be required to meet

I don't think it's a different level of abstraction. This is no different to anisotropic filtering or MSAA.

If image upscaling is an extension of MSAA, I'd expect all upscaling methods to be precisely defined, just like bilinear/trilinear/anisotropic texure filtering - so there would be detailed specifications on

DirectX Specs and an WARP12 implementation, just like it happened with other major features introduced in the last ten years. Judging by earlier examples, the common API would certainly draw on prior proprietary technologies, but it won't simply copy previous designs to the letter.

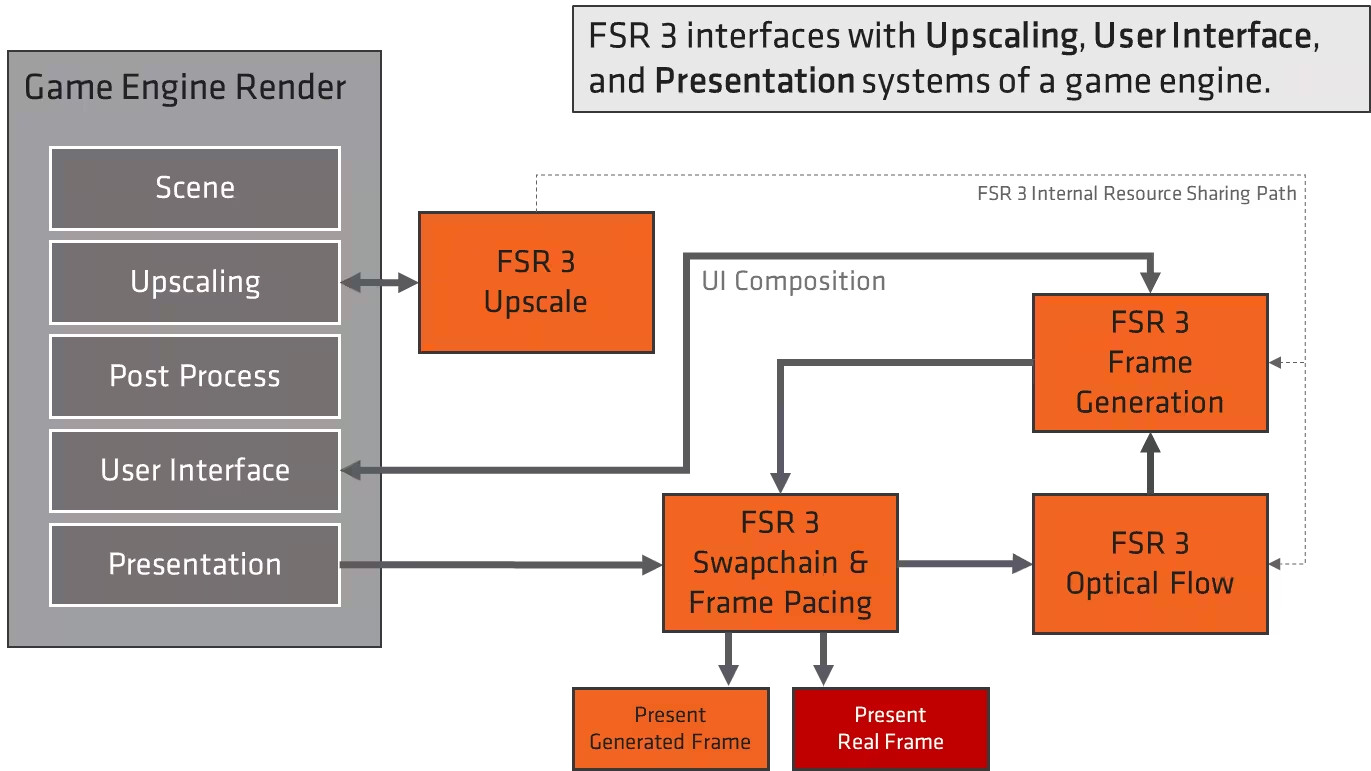

As for motion frame generation, it's still an evolving technology and there is no universally accepted post-processing algorithm to serve as a reference implementation. Current designs are much like

Phong/

Gouraud color/lighting systems in early days of 3D graphics - once hardware became more powerful, these were supreceded by superior methods like textures and shader programs (which in turn are being superceded by global illumination techniques based on ray/path-tracing, which require much higher levels of processing peformance).

Ideally, post-processing should be user-pluggable, so there would be a standard DirectML-based reference and proprietary driver-based implemenations, each with a predefined set of quality/performance controls, as well as custom post-processing callbacks for those developers who want finer control over quality/performance...