Its comparison necessitates a bit of analysis, though.

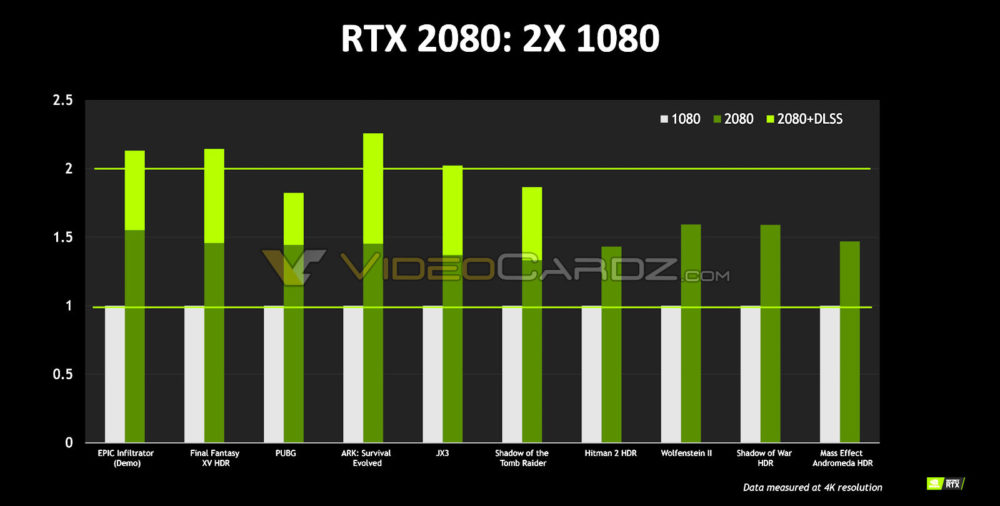

Right out of the gate, we see that six of the 10 tested games include results with Deep Learning Super-Sampling enabled. DLSS is a technology under the RTX umbrella requiring developer support. It purportedly improves image quality through a neural network trained by 64 jittered samples of a very high-quality ground truth image. This capability is accelerated by the Turing architecture’s tensor cores and not yet available to the general public (although Tom’s Hardware had the opportunity to experience DLSS, and it was quite compelling in the Epic Infiltrator demo Nvidia had on display).

The only way for performance to increase using DLSS is if Nvidia’s baseline was established with some form of anti-aliasing applied at 3840x2160. By turning AA off and using DLSS instead, the company achieves similar image quality, but benefits greatly from hardware acceleration to improve performance. Thus, in those six games, Nvidia demonstrates one big boost over Pascal from undisclosed Turing architectural enhancements, and a second speed-up from turning AA off and DLSS on. Shadow of the Tomb Raider, for instance, appears to get a ~35 percent boost from Turing's tweaks, plus another ~50 percent after switching from AA to DLSS.

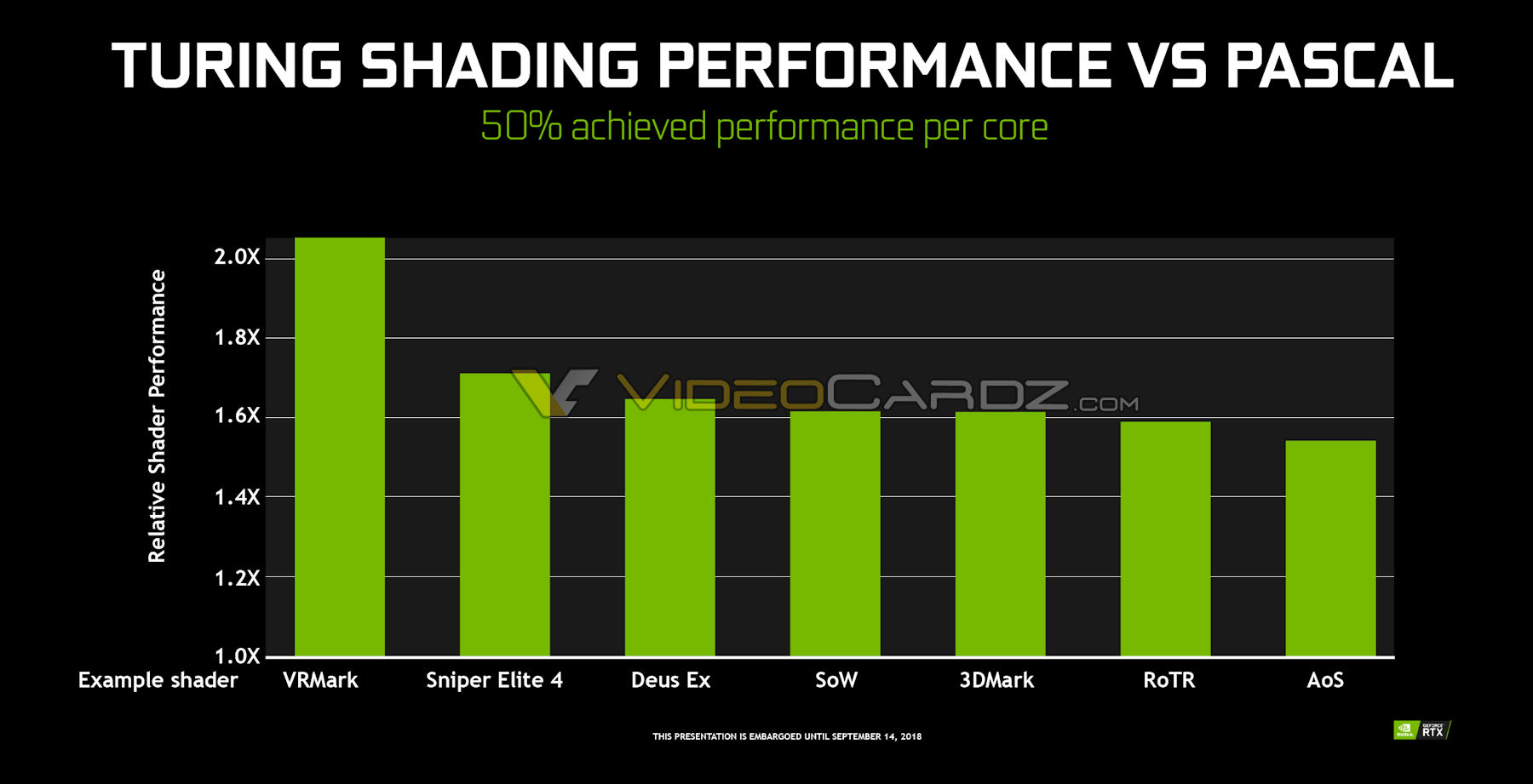

In the other four games, improvements to the Turing architecture are wholly responsible for gains ranging between ~40 percent and ~60 percent. Without question, those are hand-picked results. We’re not expecting to average 50%-higher frame rates across our benchmark suite. However, enthusiasts who previously speculated that Turing wouldn’t be much faster than Pascal due to its relatively lower CUDA core count weren’t taking underlying architecture into account. There’s more going on under the hood than the specification sheet suggests.