Geforce GTX 980 (non Ti) also had 4 GB of memory. I know several persons with 1440p display + GTX 980. We also have them at work. I also know people with GTX 970 + 1440p display (and that card had "only" 3.5 GB usable memory). I don't agree that 4 GB GPU + 1440p is a bad pairing. Current games run just fine.

Lots of people are still using Geforce GTX 680 paired usually with a 1080p display. All new games must be designed to work perfectly at 1080p with a 2 GB GPU. Popular Nvidia flagship GPUs had just 2 GB memory a few years ago. Devs just can't ignore those. GTX 680 is roughly as powerful as PS4 Pro.

At the time of GTX 480/ 5870 (2009-2010), 512MB cards were the norm and almost all games were designed around that limit, there was only one game that ever needed more than 1 GB of VRAM on PC, that was GTA IV, it needed 1.5GB @1080p, at that time 1080p wasn't as widespread as today. There was also COD Modern Warfare 2 which required close to 1GB+ of VRAM at 1080p. As well as Arma 2, but those were nothing special.

By the time GTX 580/6970 got out, we saw 2GB+ cards become the standard at high end (NV later released 3GB versions of GTX 580), but you couldn't really require that much RAM unless you wanted to play with ultra wide screen resolutions or heavily modded games. So outside of GTA IV All was well and dandy.

That changed though in 2011 era with Rage, which required 1.5GB of VRAM at the highest texture level, to prevent streaming issues, and later with he advent of Max Payne 3 which pushed the same memory usage as Rage and GTA V. But by that time we had GTX 680/7970, and 3GB/4GB cards were a reality (4GB GTX 680's). However 1.5 GB cards were more than enough, and you only needed higher if you planned to use resolutions above 1080p, at least in those 3 games (GTA V, Max Payne 3, Rage).

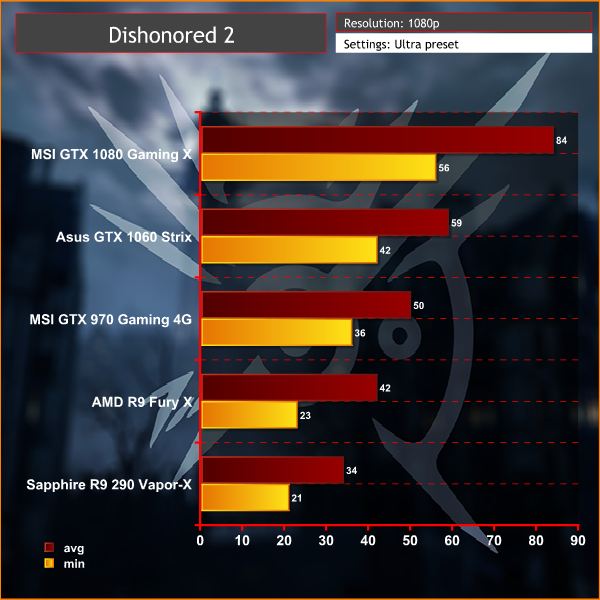

In 2013, things got out of hands with the release of the likes of COD Ghosts, now we enter the territory where video games use crazy amount of VRAM irrespective of the user's resolution, and they use it just to store textures. at that time we saw the necessity of 3GB and 4GB cards. BF4 recommended 2GB+ cards for large maps, Arma 3 did the same, we then had Thief do the same thing as well! Then we had Watch_Dogs, Wolfenstein, Titanfall, Daylight, Dead Rising 3, Ryse, COD Advanced Warfare, Dying Light, Dragon Age Inquisition, Far Cry 4, The Evil Within, Just Cause 3, COD Black Ops 3, Evolve, all do the same, sometimes even pushing the 3GB limit into 4GB! Then we pushed further into the territory of 4GB+ games like Shadow Of Mordor, Batman Arkham Knight, Assassin's Creed Unity, Rise Of The Tomb Raider, Mirror's Edge Catalyst, Rainbow Six Siege, Doom, GTA V, And many of the AAA games released in 2016: Dishnonored 2, Gears Of War 4, Forza Horizon 3, Deus Ex ManKind Divided, COD Infinite Warfare, some of these games already push 7GB+ VRAM utilization!

In my personal experience, having 16GB of system RAM can sometimes negate the need for having more than 3GB or 4GB of Video RAM @1080p, but that depends on the game (tried that with Shadow Of Mordor, Batman Arkham Knight, Assassin's Creed Unity), and sometimes even the levels within those games. But for those who seek the maximum visual quality in the current roster of PC games, 4GB is not enough sadly.