You conveniently left out the second part of Timothy's statement thankyouverymuch.

Developers can selectively super-sample alpha-test with TXAA if they wanted to improve quality

This is no MLAA.

You conveniently left out the second part of Timothy's statement thankyouverymuch.

Developers can selectively super-sample alpha-test with TXAA if they wanted to improve quality

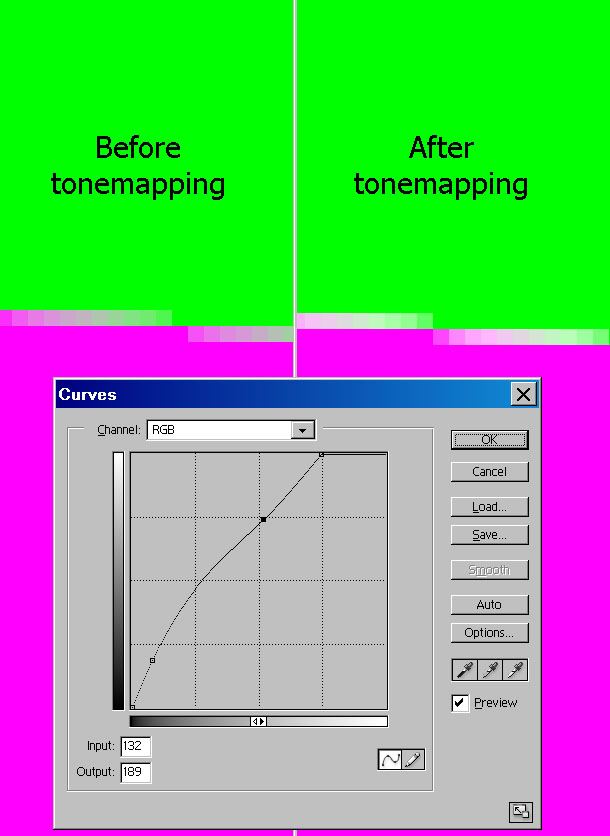

Because doing pre-tonemap or post-tonemap resolve is completely independent of MSAA itself as a method. Yes, initially MSAA resolve used purely fixed function hardware and this happened pre-tonemapping, so in the DX9 era you would have fundamental problems with MSAA and HDR, but that hasn't been a limitation for a large number of hardware generations now, so the quality constraint is largely artificial.

If I went away and created, say, a new texture compression technique, and then I wrote a paper for publication comparing the results of my new technique with a set of results that I obtained by running the worst available compressors for existing techniques, and then claimed victory, then during the review of my paper someone would (hopefully) point out that my methodology was fundamentally flawed, and that perhaps a fairer comparison would be in order if I wanted to be taken seriously.

If I then pointed out as justification that a lot of games had used the useless compressors, even though better ones had been available for 5 years then I would also sincerely hope that such an explanation would not hold any water with the reviewers.

I don't see that this is any different, and I don't see why poor comparisons of this nature deserve to get a free ride. In some ways it seems worse if such a comparison is made in a more public, less technical arena like a blog, where people may be less likely to understand what is really going on, and are therefore more likely to take the presented information purely at face value.

I would perhaps not be feeling so critical if it was pointed out in the context of the comparison that it was completely unnecessary for the MSAA shot to look anything like as bad as it does, but there is nothing of this nature.

Yes, that's why I used definition and resolution - you simply cannot apply movie-style techniques to interactive game content except maybe in narrative cut scenes. Movies and Games are two entirely different beasts, like books and a debate - one being static, the other interactive. If you would use writing-style language throughout a discussion, chances are people would consider you quite odd.

Take for example the defocus/depth of field that directs your attention to or highlights a particular part of a scene. In movies, that works well, since it's literally a rigidly scripted sequence of events that the writer and director laid out that way deliberately. In a game trying to create a convincing environment, however, all this focusing stuff did not yet work (of course I can only speak for my personal impression) quite nearly as well, since the game cannot know where you're looking with a few exceptions.

In a game, you use tricks to convey a high definition of detail but your source material is limited in resolution - even the multi-million polygon-models in 3D-modelling programs are. Normally, you derive a fairly convincing overall representation of your object from that plus normal and whatnot-maps, which you apply later on in....maximum details with little or no texture aliasing. When you upsample this, Nyquist shifts in his grave as does his theorem and you have more detail available until shimmering starts. You already paid for that through upsampling, remember. And now you downsample again without adjusting the possibly higher level of detail first, that is, you're using inferior source material.

And that's what "too blurry moaners" - at least I - do not like about this.

With regard to TXAA and the screenshots posted in the opening posts: Assuming they are legit, it seems to me, there's neither super- nor (A2C-) multisample-AA going on, otherwise those fences would not look as broken as they did. As it stands, for me those shots look like there's only an FX/MLAA-like filter and no higher resolution, aka higher quality source material from which the downsampling took place.

I compiled two different parts of the scene from the shots in the opening post to show what I mean - they are enlarged by a factor of 2 with no resampling.

http://imgur.com/TgFL2

Regardless of intentions, he really should have showed both cases (tone map pre/post resolve). If he still wants to make a correctness argument on the latter he can do that by showing cases in which it fails and TXAA looks better, but comparing just to the tone map post-resolve case and then hand-waving an argument about how pre-resolve is wrong is a bit weak IMHO.I would perhaps not be feeling so critical if it was pointed out in the context of the comparison that it was completely unnecessary for the MSAA shot to look anything like as bad as it does, but there is nothing of this nature.

This is no MLAA.