No, they always use an overclocked 750ti, 200mhz GPU and 400mhz Vram, so not so much faster even on paper and nvidia is usualy beating AMD handily if we compare cards with the same amount of flops.

Even with that overclock and assuming the card is capable at running 200Mhz over it's rated boost clock at all times, the PS4 still holds many advantages over the 750Ti on paper as follows:

Pixel Fill Rate:

125%

Texel Fill Rate:

112%

Geometry Rate:

62%

Memory Bandwidth:

166%

Shader Flops:

112%

Note the massive memory bandwidth advantage. Yes, Nvidia is foten more efficient than AMD in terms of both FLOP use and memory bandwidth, but with advantages like those above, we'd still be expecting the PS4 handily beat the 750Ti in pretty much every situation even if it wasn't punching above it's weight at all. What we see in reality is the 750Ti quite often being able to keep up with the PS4 and occasionally getting outperformed by a fairly narrow margin. So where is the 60%?

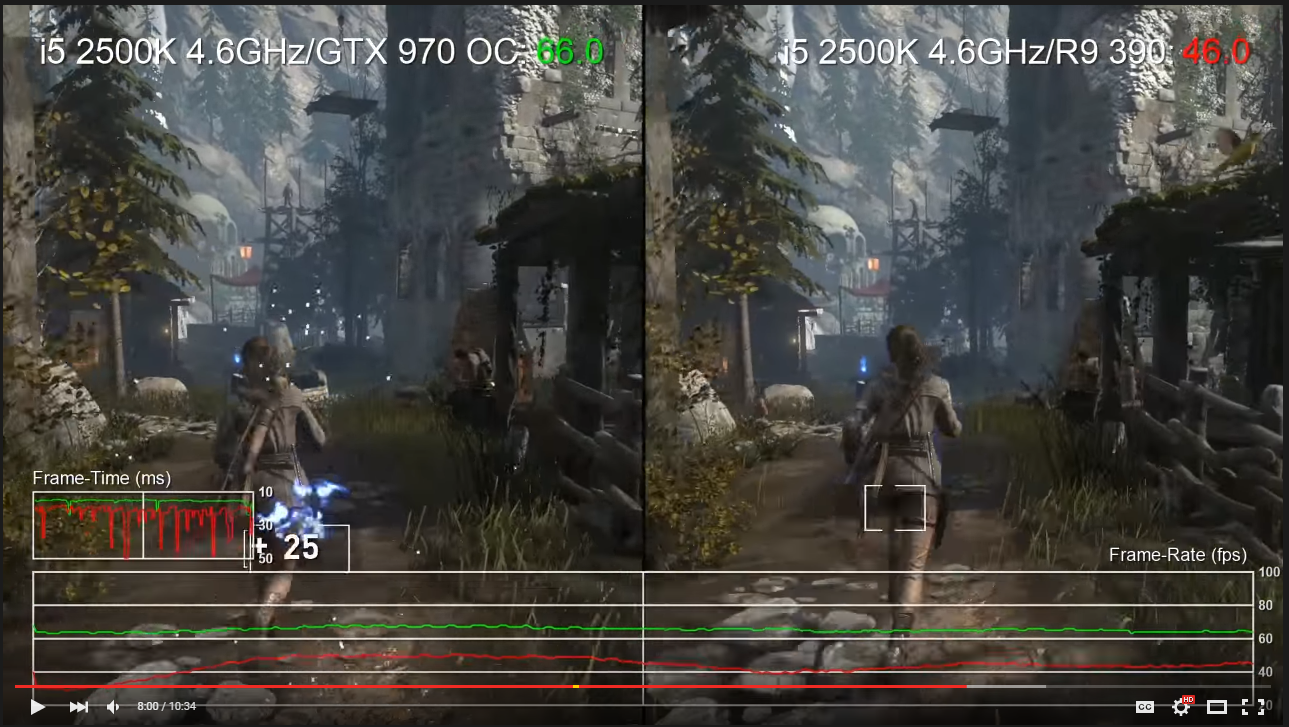

And if you take Tomb raider 2, a ~1550 flops 'flops efficient' Nividia card is beaten easily by a ~1300 flops 'not flops efficient' AMD card.

That 'not flops efficient AMD card' is also connected to a theoretical 272GB of memory bandwidth compared to 92.8GB on the 750Ti OC. If it can't beat the 750Ti with that advantage, plus the fact that the game would have been architecture specifically around the GCN architecture rather than Maxwell then it'd be a very poor situation indeed. Problem is, ROTTR is a one off, in most games the XBO actually is performing worse than the 750Ti. So where does that leave the console punching above it's weight by 60% theory?

And you are mistaken about Esram. It is only here to alleviate the low main bandwidth, it's not a miracle hardware. Tens of comparisons with PS4 multiplats showed this.

No one claimed anything about it being 'miracle hardware', but like it or not, it

does give the XBO a massive bandwidth advantage over the 750Ti (and R7 360) and ROTTR being originally an XBO exclusive would certainly have been optimised around that throughput. Comparisons to the PS4 don't change that fact since even if the eSRAM only gives the XBO a memory throughput similar to the PS4 in the real world, it's STILL massively more than the 750 Ti or R7 360.

Comparing completely mismatched parts to prove a console advantage doesn't really prove anything. I don't doubt that games will be built for the strengths of the consoles architectures, so in the case of a game that started life as an XBO exclusive, that means it's going to be built around very high memory bandwidth throughput. Unless you're comparing to a PC GPU that has similar capabilities (which the 750Ti/R7 360 do not, even though they may be faster in other respects) then you're not proving the console punches above it's weight, your just proving that a GPU which is less capable on paper (even if just in one key respect) is less able to keep up in the real world in a game that depends on that capability.

When you can demonstrate that the PS4 is performing consistently in line with an R9 280 (and XBO with something around the R7 270) only then will you proving that consoles are generally punching above their weight by about 60%. And I haven't seen that demonstrated in even one game, nevermind most.