Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Indiana Jones and the Great Circle [XBSX|S, PC, XGP, PS5]

- Thread starter cheapchips

- Start date

Just started up the game for the first time, some few notes:

- Machinegames need a new "logo video", wachting it on an OLED was cringe...low res and pixelated, not true black...unlike eg. the Lucasarts or NVIDIA logo video

- Filmgrain was on (25%) per default. Get. That. Shit. Away. From .Default. On. They few people that enjoy that can choose to enable it

- Same with Chromatic Aberration. Get. That. Shit. Away. From .Default. On

- Default resolution was set for 1080p. Please copy from desktop resolution, should not be complicated.

Yes, (90º as default and minimum setting)Is the FOV adjustable ?

Looks like that is PC only. It seriously should be added on Xbox.Is the FOV adjustable ?

have you tried to play the game from a longer distance to the screen? Motion sickness is one of the worst feelings I've experienced. I remember a rally game that I had which I liked but I had to decrease the resolution too much to make it run smoothly but I ended up getting motion sickness.On Series X here. Anyone else getting severe motion sickness with this game? I don't normally get motion sickness but there's something going on here. I've turned off motion blur and tweaked a few other settings but when I move around even slightly there's a ton of blur.

I want to play this game but may have to pass on it.

I also felt that when playing Heretic and Hexen.

On a different note, a review from a guy who always reviews games only after completing them to a 100% and on the highest difficulty.

Okay, flying a little bit close to the sun here, but while eating lunch and being irritated that certain cvars wouldn't apply in the console, or if you added them to TheGreatCircleConfig.local with a message of '<cvar> can only be set on the command line', I cracked the game's executable open with a hex editor. Eventually I realized that it accepts cvars as command line input, but not when prefixed with '-' or '--' as in most programs, but with a "+". I should have known, I seem to remember this has been IDTech's way since the dawn of time. For the adventurous, there's a ton of neat stuff in plaintext in the .exe file along with descriptions of what it does/changes.

Big thank-you to MachineGames for not Denuvo-ing this, which moved the game instantly from maybe-purchase to a definitely-purchase in my books.

Anyway, we are now officially path tracing happily on a 3080 10GB.

Step 1) Create a shortcut to TheGreatCircle.exe in "C:\Program Files (x86)\Steam\steamapps\common\The Great Circle" and add the command line argument "+pt_supportVRAMMinimumMB 9000"

Step 2) Start the game and enable path tracing via the cvar "rt_pathtracingenabled 1" in the console.

Step 3) Enjoy, and hope you don't run out of VRAM!

I'd set some of the other settings to 'low' like the texture pool size and the shadows to get a margin of safety for initial testing, as well as disabled DLSS which does take up some extra VRAM.

It definitely works, and there's certainly room for further optimization; hopefully the community can settle on a collection of settings that makes the 3080 10GB reasonably happy with it all enabled.

Some courageous soul might even want to try it on an 8GB card; unfortunately I just don't have one to test with.

Path tracing off:

Path tracing on: (it is definitely enabled, you can see from the lighting/shadow differences, as well as the performance hit)

Big thank-you to MachineGames for not Denuvo-ing this, which moved the game instantly from maybe-purchase to a definitely-purchase in my books.

Anyway, we are now officially path tracing happily on a 3080 10GB.

Step 1) Create a shortcut to TheGreatCircle.exe in "C:\Program Files (x86)\Steam\steamapps\common\The Great Circle" and add the command line argument "+pt_supportVRAMMinimumMB 9000"

Step 2) Start the game and enable path tracing via the cvar "rt_pathtracingenabled 1" in the console.

Step 3) Enjoy, and hope you don't run out of VRAM!

I'd set some of the other settings to 'low' like the texture pool size and the shadows to get a margin of safety for initial testing, as well as disabled DLSS which does take up some extra VRAM.

It definitely works, and there's certainly room for further optimization; hopefully the community can settle on a collection of settings that makes the 3080 10GB reasonably happy with it all enabled.

Some courageous soul might even want to try it on an 8GB card; unfortunately I just don't have one to test with.

Path tracing off:

Path tracing on: (it is definitely enabled, you can see from the lighting/shadow differences, as well as the performance hit)

Last edited:

A few more observations, this game may work a lot better when forced into doing so on cards with 4GB or 6GB of VRAM than previously thought.

Just for fun, I moved on up to native 1440p, with texture pool 'high' with path tracing enabled on the 3080 10GB.

The game seems completely indifferent to grossly overflowing its VRAM pool and overall frame times are remarkably stable with no hitching and hiccupping, and not a hint of engine instability, crashing, or missing effects, textures, models, etc.

Enabling dynamic resolution scaling, forced to a 30fps target via cvar was a surprisingly playable experience and if I was playing on the big screen that's probably how I'd play it on my 3080, or 40fps if I had a 120hz container available.

I sure am glad I have a PCIe 4.0 motherboard though, with my 3080 in PCIe 4.0 16x mode, I saw 80% bus utilization in GPU-Z at some points.

It sure was getting a workout, but I guess that's the point.

Having said all that though, my worries about running out of VRAM with DLSS were entirely unfounded, at 1440p DLSS performance, even trampling all over the VRAM pool, it runs great, nearly as good as my 3090 does at the same internal resolution (720p internal) - at like 50-70fps.

Just for fun, I moved on up to native 1440p, with texture pool 'high' with path tracing enabled on the 3080 10GB.

The game seems completely indifferent to grossly overflowing its VRAM pool and overall frame times are remarkably stable with no hitching and hiccupping, and not a hint of engine instability, crashing, or missing effects, textures, models, etc.

Enabling dynamic resolution scaling, forced to a 30fps target via cvar was a surprisingly playable experience and if I was playing on the big screen that's probably how I'd play it on my 3080, or 40fps if I had a 120hz container available.

I sure am glad I have a PCIe 4.0 motherboard though, with my 3080 in PCIe 4.0 16x mode, I saw 80% bus utilization in GPU-Z at some points.

It sure was getting a workout, but I guess that's the point.

Having said all that though, my worries about running out of VRAM with DLSS were entirely unfounded, at 1440p DLSS performance, even trampling all over the VRAM pool, it runs great, nearly as good as my 3090 does at the same internal resolution (720p internal) - at like 50-70fps.

Last edited:

great find!Okay, flying a little bit close to the sun here, but while eating lunch and being irritated that certain cvars wouldn't apply in the console, or if you added them to TheGreatCircleConfig.local with a message of '<cvar> can only be set on the command line', I cracked the game's executable open with a hex editor. Eventually I realized that it accepts cvars as command line input, but not when prefixed with '-' or '--' as in most programs, but with a "+". I should have known, I seem to remember this has been IDTech's way since the dawn of time. For the adventurous, there's a ton of neat stuff in plaintext in the .exe file along with descriptions of what it does/changes.

Big thank-you to MachineGames for not Denuvo-ing this, which moved the game instantly from maybe-purchase to a definitely-purchase in my books.

Anyway, we are now officially path tracing happily on a 3080 10GB.

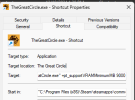

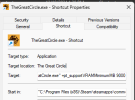

Step 1) Create a shortcut to TheGreatCircle.exe in "C:\Program Files (x86)\Steam\steamapps\common\The Great Circle" and add the command line argument "+pt_supportVRAMMinimumMB 9000"

View attachment 12594

Step 2) Start the game and enable path tracing via the cvar "rt_pathtracingenabled 1" in the console.

Step 3) Enjoy, and hope you don't run out of VRAM!

I'd set some of the other settings to 'low' like the texture pool size and the shadows to get a margin of safety for initial testing, as well as disabled DLSS which does take up some extra VRAM.

It definitely works, and there's certainly room for further optimization; hopefully the community can settle on a collection of settings that makes the 3080 10GB reasonably happy with it all enabled.

Some courageous soul might even want to try it on an 8GB card; unfortunately I just don't have one to test with.

Path tracing off:

View attachment 12596

Path tracing on: (it is definitely enabled, you can see from the lighting/shadow differences, as well as the performance hit)

View attachment 12598

It didn't work for me though, but it was exciting just trying. I used the same settings as you but for 16GB of VRAM.

That being said, I got the game to run better. I locked it to a quite stable 41fps and I used Lossless Scaling for x4 frame generation.

It works very well. However, when a section with vegetation appears -usually in more open world areas-, the framerate plummets into the 30s.

60fps with RTX 3060 optimised settings -some I found on a random youtube video- worked very well too, except in those areas I mention.

Last edited:

Does that mean for every frame its generated 4 additional frames?x4 frame generation.

I think it's 3 generated frames, so 4x the framerate. When I was playing Space Hulk: Vengeance of the Blood Angels, a game locked to 15 FPS, 4x gave me 60.Does that mean for every frame its generated 4 additional frames?

Even the highest NVIDIA card have problems doing path-tracing withDLSS and FG at 4K (I know my 4090 choked at certain points, so had to reduce settings), so perhaps AMD cards will coem later, but I fear at way worse performance just like in eg. CyberPunk 2077.Is there an explanation for why path tracing is only enabled on Nvidia cards?

Simply AMD's performance tanks badly when multiple RT effects are done at the same time and they ahve a very small marketshare with their 7900 XTX, so I suspect a effort/benefit calculus fmo the developer not being in favour of focusing on that.

Tough to say, I suppose it could be using some Vulkan extension that only Nvidia supports, or supports correctly.Is there an explanation for why path tracing is only enabled on Nvidia cards?

The engine when it initializes gives a bunch of output in the console, and also saved to 'qconsole.log' that give some hints as to what it might be doing. It lists all of these items including VK_NV_ray_tracing_invocation_reorder (SER) and VK_EXT_opacity_micromap (OMMs) on my system. This makes me think some of them are potential extensions it's looking to use if available though, given that I have a 3080 and 3090 and neither of them support SER or OMMs in hardware, and the FullRT/PT mode works just fine.

Or do the Ampere drivers expose the extensions, but just do nothing when they're invoked, or fallback to doing the work in a shader?

-------------------------

[2] Vulkan Device Info:

[2] -------------------------

[2] AS Pool: 3072 MB

[2] OMM Pool: 200 MB

[2] Vendor : NVIDIA

[2] GPU : NVIDIA GeForce RTX 3090

[2] VRAM : 24326 MiB

[2] Driver : 566.14 (8d838000)

[2] VK API : 1.3.289

[2]

[2] -------------------------

[2] Device layers: 3

[2] -------------------------

[2] - VK_LAYER_NV_optimus (00403121, 00000001) - "NVIDIA Optimus layer"

[2] - VK_LAYER_VALVE_steam_overlay (004030cf, 00000001) - "Steam Overlay Layer"

[2] - VK_LAYER_VALVE_steam_fossilize (004030cf, 00000001) - "Steam Pipeline Caching Layer"

[2] -------------------------

[2] Device Extensions

[2] -------------------------

[2] + VK_KHR_swapchain

[2] + VK_NV_fragment_shader_barycentric

[2] + VK_KHR_dedicated_allocation

[2] + VK_NV_dedicated_allocation_image_aliasing

[2] + VK_KHR_8bit_storage

[2] + VK_KHR_16bit_storage

[2] + VK_KHR_shader_float16_int8

[2] + VK_EXT_descriptor_indexing

[2] + VK_EXT_shader_subgroup_ballot

[2] + VK_EXT_sampler_filter_minmax

[2] + VK_KHR_buffer_device_address

[2] + VK_KHR_spirv_1_4

[2] + VK_KHR_shader_float_controls

[2] + VK_KHR_dynamic_rendering

[2] + VK_EXT_memory_budget

[2] + VK_KHR_draw_indirect_count

[2] + VK_EXT_extended_dynamic_state

[2] + VK_EXT_depth_clip_enable

[2] + VK_EXT_robustness2

[2] + VK_EXT_subgroup_size_control

[2] + VK_KHR_driver_properties

[2] + VK_EXT_calibrated_timestamps

[2] + VK_EXT_hdr_metadata

[2] + VK_EXT_memory_budget

[2] + VK_EXT_mesh_shader

[2] + VK_EXT_full_screen_exclusive

[2] + VK_KHR_deferred_host_operations

[2] + VK_KHR_pipeline_library

[2] + VK_KHR_ray_tracing_pipeline

[2] + VK_KHR_acceleration_structure

[2] + VK_KHR_ray_query

[2] + VK_KHR_synchronization2

[2] + VK_NV_ray_tracing_invocation_reorder

[2] + VK_EXT_conservative_rasterization

[2] + VK_EXT_depth_clip_control

[2] + VK_EXT_opacity_micromap

[2] + VK_KHR_push_descriptor

[2] + VK_NVX_binary_import

[2] + VK_NVX_image_view_handle

[2] + VK_NV_low_latency

[2] + VK_NV_optical_flow

[2] + VK_KHR_external_semaphore

[2] + VK_KHR_timeline_semaphore

[2] + VK_KHR_maintenance4

[2] + VK_KHR_external_memory_win32

[2] + VK_KHR_external_memory

[2] + VK_KHR_external_semaphore_win32

[2] + VK_NVX_binary_import

[2] + VK_NVX_image_view_handle

[2] + VK_KHR_buffer_device_address

[2] Initializing Vulkan subsystem

[2] Vulkan Device Info:

[2] -------------------------

[2] AS Pool: 3072 MB

[2] OMM Pool: 200 MB

[2] Vendor : NVIDIA

[2] GPU : NVIDIA GeForce RTX 3090

[2] VRAM : 24326 MiB

[2] Driver : 566.14 (8d838000)

[2] VK API : 1.3.289

[2]

[2] -------------------------

[2] Device layers: 3

[2] -------------------------

[2] - VK_LAYER_NV_optimus (00403121, 00000001) - "NVIDIA Optimus layer"

[2] - VK_LAYER_VALVE_steam_overlay (004030cf, 00000001) - "Steam Overlay Layer"

[2] - VK_LAYER_VALVE_steam_fossilize (004030cf, 00000001) - "Steam Pipeline Caching Layer"

[2] -------------------------

[2] Device Extensions

[2] -------------------------

[2] + VK_KHR_swapchain

[2] + VK_NV_fragment_shader_barycentric

[2] + VK_KHR_dedicated_allocation

[2] + VK_NV_dedicated_allocation_image_aliasing

[2] + VK_KHR_8bit_storage

[2] + VK_KHR_16bit_storage

[2] + VK_KHR_shader_float16_int8

[2] + VK_EXT_descriptor_indexing

[2] + VK_EXT_shader_subgroup_ballot

[2] + VK_EXT_sampler_filter_minmax

[2] + VK_KHR_buffer_device_address

[2] + VK_KHR_spirv_1_4

[2] + VK_KHR_shader_float_controls

[2] + VK_KHR_dynamic_rendering

[2] + VK_EXT_memory_budget

[2] + VK_KHR_draw_indirect_count

[2] + VK_EXT_extended_dynamic_state

[2] + VK_EXT_depth_clip_enable

[2] + VK_EXT_robustness2

[2] + VK_EXT_subgroup_size_control

[2] + VK_KHR_driver_properties

[2] + VK_EXT_calibrated_timestamps

[2] + VK_EXT_hdr_metadata

[2] + VK_EXT_memory_budget

[2] + VK_EXT_mesh_shader

[2] + VK_EXT_full_screen_exclusive

[2] + VK_KHR_deferred_host_operations

[2] + VK_KHR_pipeline_library

[2] + VK_KHR_ray_tracing_pipeline

[2] + VK_KHR_acceleration_structure

[2] + VK_KHR_ray_query

[2] + VK_KHR_synchronization2

[2] + VK_NV_ray_tracing_invocation_reorder

[2] + VK_EXT_conservative_rasterization

[2] + VK_EXT_depth_clip_control

[2] + VK_EXT_opacity_micromap

[2] + VK_KHR_push_descriptor

[2] + VK_NVX_binary_import

[2] + VK_NVX_image_view_handle

[2] + VK_NV_low_latency

[2] + VK_NV_optical_flow

[2] + VK_KHR_external_semaphore

[2] + VK_KHR_timeline_semaphore

[2] + VK_KHR_maintenance4

[2] + VK_KHR_external_memory_win32

[2] + VK_KHR_external_memory

[2] + VK_KHR_external_semaphore_win32

[2] + VK_NVX_binary_import

[2] + VK_NVX_image_view_handle

[2] + VK_KHR_buffer_device_address

[2] Initializing Vulkan subsystem

I don't have any RT-capable non-Nvidia cards to test with, but along with looking for an adventurous soul to try enabling PT/FullRT on an 8gb card, someone with an Arc or AMD card could try something like this: https://github.com/cdozdil/vulkan-spoofer

It mentions it doesn't work with Doom Eternal, which is also IDTech, but then gives a link to a different version that does. Worth a shot to see what happens, anyway.

Edit: I think I answered my own question shortly after posting, apparently the Nvidia drivers happily expose the extensions for OMMs all the way back to Turing: https://vulkan.gpuinfo.org/listdevi...sion=VK_EXT_opacity_micromap&platform=windows

And SER all the way back to Pascal (!): https://vulkan.gpuinfo.org/listdevi...y_tracing_invocation_reorder&platform=windows

Curious. I wonder what actually happens under the hood on those cards without hardware suport.

Edit 2: After poking through the Nvidia documentation: https://d29g4g2dyqv443.cloudfront.net/sites/default/files/akamai/gameworks/ser-whitepaper.pdf it's confirmed on page 12 that on cards without hardware support for SER it just does nothing.

OMMs, surprisingly actually seem to work and do something useful on older cards, at least as alluded to by Nvidia here in the FAQ at the bottom about hardware support: https://developer.nvidia.com/rtx/ray-tracing/opacity-micro-map/get-started

Edit 3: One other reason it might be Nvidia-only might be the use of Nvidia's Neural Radiance Caching - not sure if anyone else noticed "NRC_Vulkan.dll" in the game's install directory. Right click on it and go to properties and check its 'Details' tab - that's definitely what it's for.

Nvidia mentions here in their documentation that the training pass runs on the tensor cores: https://github.com/NVIDIAGameWorks/RTXGI/blob/main/docs/NrcGuide.md There were rumours that CP2077 was going to get an NRC implementation, but I can't find any information about it actually being implemented. Does this make Indiana Jones the first NRC-enabled title?

Last edited:

DavidGraham

Veteran

I was going to postulate that they could be using the same technique from Wolfenstein Youngblood, which was NVIDIA exclusive also. It was running NVIDIA proprietary VulkanRT extensions at that time (because AMD had no VulkanRT support or RT capable GPUs at that time).

They could also enable support for AMD hardware once FSR support is implemented (the developer already stated they are adding FSR support later as well as DLSS3.5 Ray Reconstruction).

They could also enable support for AMD hardware once FSR support is implemented (the developer already stated they are adding FSR support later as well as DLSS3.5 Ray Reconstruction).

VFX_Veteran

Regular

I am not seeing this. With my 4090 setup on Supreme, DLSS-Quality and FG ON, upsampled to 4k from 1440p, I'm in the 70-90FPS range all the time. I'm still in the Vatican area though so I don't know about the rest of the game. Performance is stellar and probably the best iteration of Path-tracing that I've seen from any of the other PT games. Very stable.Even the highest NVIDIA card have problems doing path-tracing withDLSS and FG at 4K (I know my 4090 choked at certain points, so had to reduce settings),

I was curious if this game would run on my laptop with a GTX 1660 (ti?), and a quick search showed a couple of videos of it failing to launch with some vulkan error. And yes, I know the 1660 doesn't really support ray tracing, but the card does let me enable path tracing in Cyberpunk at single digit frametates, so I thought I might try to get a Series S type experience out of it. Sadly, it looks like that's not on the table. I probably won't even try it myself until someone else gets it to launch, because I'd have to clear off some stuff to be able to install the game to begin with.

arandomguy

Veteran

Unless they've left in some sort of hidden support for non RT this isn't going to be something user "hackable."

Nvidia implemented DirectX 12 DXR support for 1xxx and 16xx cards via compute shaders. This is why you can run RT on those cards with games using DirectX 12.

Indiana Jones uses Vulkan.

Nvidia implemented DirectX 12 DXR support for 1xxx and 16xx cards via compute shaders. This is why you can run RT on those cards with games using DirectX 12.

Indiana Jones uses Vulkan.

I might have had other stuff running in the background, will check again.I am not seeing this. With my 4090 setup on Supreme, DLSS-Quality and FG ON, upsampled to 4k from 1440p, I'm in the 70-90FPS range all the time. I'm still in the Vatican area though so I don't know about the rest of the game. Performance is stellar and probably the best iteration of Path-tracing that I've seen from any of the other PT games. Very stable.

Liking the game, but I dislike the 3rd person switch to climbing it really breaks the immersion.

cheapchips

Veteran

Liking the game, but I dislike the 3rd person switch to climbing it really breaks the immersion.

I though they did that well. It's so snappy it doesn't take me out of the experience.

What bugs me in the vignetting when you're crouched. They need something but on one level in particular it turns the corners an ugly blue. I can't take my eyes off.

Somehow, the constant biscotti munching doesn't stop me feeling like Indiana Jones.

Last edited:

Yeah, they could have used a simple symbol just like when you are "spotted" and not done it like...it bugs me too.I though they did that well. It's so snappy it doesn't take me out of the experience.

What bugs me in the vignetting when you crouched. They need something but on one level in particular it turns the corners an ugly blue. I can't take my eyes off.

Somehow, the constant biscotti munching doesn't stop me feeling like Indiana Jones.

Found out you get XP from taking picture of stray cats in the vatican, so spending to much time looking for cats.

I do like that all baddies do not get auto-alerted when you have a liffte "ruffle" and laughing a little about how MANY people I can smack down and they seem to not be albe to warn others about a rouge-priest handing out "hand-cookies"