Evolve Benchmarking | Data-Driven Business Growth

Unlock insights with Evolve Benchmarking. Optimize performance, drive growth, and stay ahead with data-driven strategies

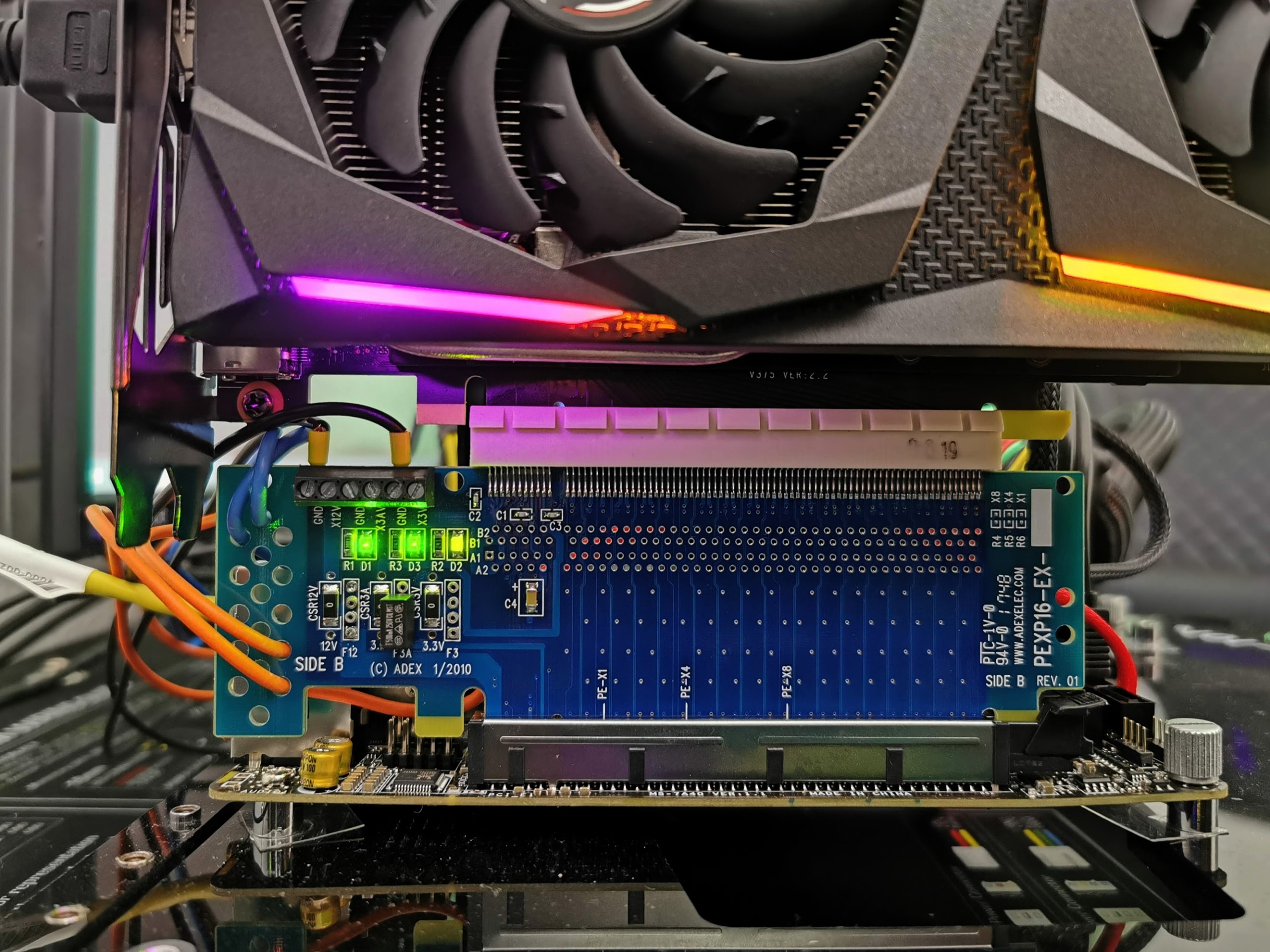

New GPU benchmark. It's not free but you can buy it on a few different stores.

Traverse Research Introduces Evolve: The Next-Gen GPU Benchmark Suite

Traverse Research, one of the leading companies in graphics research and development, has unveiled Evolve, a groundbreaking GPU benchmark suite engineered to redefine how we measure and analyze graphics performance.

With the rapid evolution of GPU technology, a one-size-fits-all benchmark is no longer sufficient as the market has exploded with devices of different performance characteristics and form factors, all at different power envelopes and performance. This makes it more important than ever to give deep insights into what’s going on exactly when measuring through a benchmark. Evolve addresses this challenge with a multifaceted approach, providing seven distinct performance scores:

And as an industry-first, Evolve introduces workgraph performance evaluation, pioneering a new way to assess the next generation of GPU workloads.

- Energy Consumption – Measuring power efficiency to optimize performance-per-watt.

- Ray Tracing Capabilities – Evaluating real-time ray tracing performance.

- Rasterization Performance – Assessing traditional rendering capabilities.

- Compute Performance – Measuring general-purpose GPU computing strength.

- Driver Effectiveness – Analyzing how effectively software leverages hardware.

- Acceleration Structure Build

- Workgraphs Performance (world-first evaluation)