You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

gamervivek

Regular

980Ti had a lot of headroom, AIB cards were ~20% faster out of the box, impossible to think of today.

www.techpowerup.com

www.techpowerup.com

www.techpowerup.com

www.techpowerup.com

Gigabyte GTX 980 Ti XtremeGaming 6GB Review

Gigabyte's new GTX 980 Ti XtremeGaming is highly overclocked, yet more affordable than other GTX 980 Ti variants. It also comes with a quiet triple-slot, triple-fan cooling solution that stops the fans in idle and provides great temperatures.

Zotac GeForce GTX 980 Ti AMP! Extreme 6GB Review

Zotac's GeForce GTX 980 Ti Amp! Extreme is one of the fastest custom-design GTX 980 Ti cards out there, yet comes at a relatively affordable price increase - unlike such competitors as the MSI Lightning or ASUS Matrix.

DegustatoR

Legend

Why? Ada's RT features materialized in less than a year from its launch.New Blackwell features for RT? Will be waiting a while to see those in games if ever.

It would of course help if these features would be pushed as a DX update instead of being an NvAPI thing.

TopSpoiler

Regular

Just a guess, I think this is the most likely structure of the 'redesigned SM'.

research.nvidia.com

research.nvidia.com

GPU Subwarp Interleaving | Research

Raytracing applications have naturally high thread divergence, low warp occupancy and are limited by memory latency. In this paper, we present an architectural enhancement called Subwarp Interleaving that exploits thread divergence to hide pipeline stalls in divergent sections of low warp...

US11934867B2 - Techniques for divergent thread group execution scheduling - Google Patents

Warp sharding techniques to switch execution between divergent shards on instructions that trigger a long stall, thereby interleaving execution between diverged threads within a warp instead of across warps. The technique may be applied to mitigate pipeline stalls in applications with low warp...

patents.google.com

Why? Ada's RT features materialized in less than a year from its launch.

It would of course help if these features would be pushed as a DX update instead of being an NvAPI thing.

This will be Nvidia’s fourth crack at HWRT and I’m guessing the low hanging fruit has already been picked. Maybe it’s an evolution of existing features like SER but in general more invasive changes will see even less adoption. These things really should be going through DirectX at this point.

That was just (mostly) the norm back then. Kepler GPU's generally had a fair chunk of headroom for overclocking compared to stock core/memory specs as well. And the highest end parts usually had the most, given their stock specs tended to be more conservative for binning purposes.980Ti had a lot of headroom, AIB cards were ~20% faster out of the box, impossible to think of today.

Gigabyte GTX 980 Ti XtremeGaming 6GB Review

Gigabyte's new GTX 980 Ti XtremeGaming is highly overclocked, yet more affordable than other GTX 980 Ti variants. It also comes with a quiet triple-slot, triple-fan cooling solution that stops the fans in idle and provides great temperatures.www.techpowerup.com

Zotac GeForce GTX 980 Ti AMP! Extreme 6GB Review

Zotac's GeForce GTX 980 Ti Amp! Extreme is one of the fastest custom-design GTX 980 Ti cards out there, yet comes at a relatively affordable price increase - unlike such competitors as the MSI Lightning or ASUS Matrix.www.techpowerup.com

D

Deleted member 2197

Guest

Speculation ...

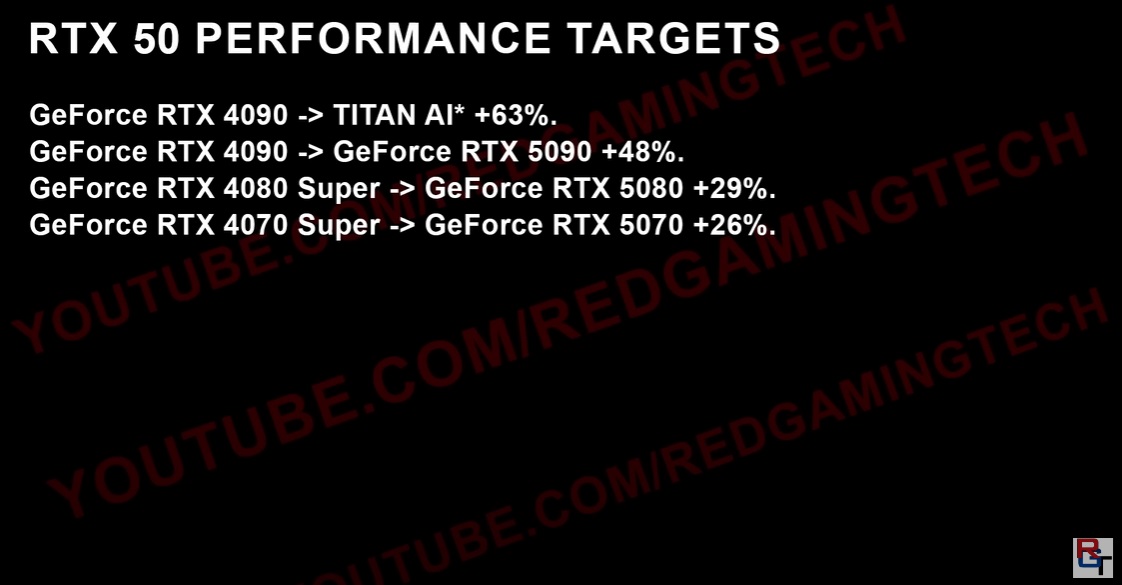

Recent rumours have suggested that Nvidia’s planned RTX 5090 will not be making full use of their flagship “Blackwell” silicon. Now, it seems clear why Nvidia isn’t using the full potential of Blackwell with the RTX 5090. Nvidia has a stronger GPU in the works, a new TITAN-class product called the TITAN AI.

Nvidia’s RTX 5090 reportedly features 28GB of GDDR7 memory over a 448-bit memory bus. This is not the full potential of Nvidia’s GB202 silicon, which reportedly features a 512-bit memory bus. If this is true, Nvidia’s planned Blackwell TITAN could feature up to 32GB of GDDR7 memory.

Below is a report from RedGamingTech that claims that Nvidia has a new TITAN GPU in the works. This GPU is reportedly around 63% faster than Nvidia’s RTX 4090. If the data below is correct, this New GPU will be around 10% faster than Nvidia’s RTX 5090.

DavidGraham

Veteran

It's more like a 55% increase now (the 3070 is 55% faster than 1080Ti at both 1080p and 1440p, and 3070 is equal to 2080Ti), timestamped below.1080Ti -> 2080Ti was a 33% increase in performance

I could believe it at $4000.

RGT dude got ripped!

Well yea, gaps over time tend to grow, especially once you get to like two generations behind with Nvidia products where driver optimization support tends to stop. And it was ever more the case with the switch to RTX/DX12U stuff where the 1080Ti was gonna fall farther behind after....yea, eight years. lolIt's more like a 55% increase now (the 3070 is 55% faster than 1080Ti at both 1080p and 1440p, and 3070 is equal to 2080Ti), timestamped below.

But people aren't buying GPU's for how they'll perform 5+ years down the line.

DavidGraham

Veteran

I am well aware of that, but that's not the case here, the 1080Ti is still on the level of 5700XT/Radeon VII, but the Turing advantage grew over time in the new games.Well yea, gaps over time tend to grow

The benchmark suite has no ray tracing tests. Only rasterization.the switch to RTX/DX12U stuff where the 1080Ti

"The Turing advantages grew over time in the new games"I am well aware of that, but that's not the case here, the 1080Ti is still on the level of 5700XT/Radeon VII, but the Turing advantage grew over time in the new games.

The benchmark suite has no ray tracing tests. Only rasterization.

Yes, that's basically what I was saying.

Also, a game like Alan Wake 2 absolutely uses ray tracing. Software ray tracing, but still something modern architectures are gonna handle better. Also of course support for mesh shaders.

I'm not entirely sure what point you're trying to make here. Sure, if Blackwell includes loads of future-orientated features that will get taken advantage of down the line and improve what's initially a disappointing performance improvement, cool. But that doesn't make it worthwhile in the moment if it's not a good leap otherwise.

Frenetic Pony

Veteran

I could believe it at $4000.

RGT dude got ripped!

"Titan AI" is a great name, Nvidia should take it.

Either way this at most would be numbers given to the PR team, no engineer would ever write "4090 -> +63%". It makes no sense, why is the 40XX series faster than 50XX? (Alligator > eats the bigger one, thanks gradeschool math) and "performance %+" is meaningless to an engineer, percentage faster in what regard, that's something consumers talk about like it's a simple number everyone can settle on, not an engineer.

I wonder if anyone would even be providing PR much of anything 5 months ahead of launch.

Frenetic Pony

Veteran

Now that I think about it, I'd bet "AI, AI AI AI! Ayyyyyeeee" *breath "Eyyyyeeeee" will be Nvidia's big pitch for consumer Blackwell

It's hot as shit, well ok the bottom has started falling out as of this writing (consolidation starting/even the analysts starting to realize how big a bubble this is/etc.) but when planning consumer Blackwell AI is hot as shit.

Nvidia, doing their thing, will pitch it up and down left right and center so it can be a "Feature differentiator" so they can justify charging a far higher price for performance ratio than the competition.

DLSS4 will probably have predictive frame generation (no need to wait for the next frame before generating an in between frame. Not invented by Nvidia by any means and can run on a phone but don't tell their fanbase that). There'll be in ram neural net compressed textures to save ram! (Excuse to not increase ram size for lower end SKUs, literally can't work on UE5/Call of Duty/etc. Won't show up anywhere but a few Nvidia sponsored games). Use a neural net to upscale *(texture magnification, happens when screen pixel density > texel density, neural nets could do this somewhat better in realtime) old game textures, claim it automatically remasters old games! I'm sure I'm missing a few more possibilities.

It's hot as shit, well ok the bottom has started falling out as of this writing (consolidation starting/even the analysts starting to realize how big a bubble this is/etc.) but when planning consumer Blackwell AI is hot as shit.

Nvidia, doing their thing, will pitch it up and down left right and center so it can be a "Feature differentiator" so they can justify charging a far higher price for performance ratio than the competition.

DLSS4 will probably have predictive frame generation (no need to wait for the next frame before generating an in between frame. Not invented by Nvidia by any means and can run on a phone but don't tell their fanbase that). There'll be in ram neural net compressed textures to save ram! (Excuse to not increase ram size for lower end SKUs, literally can't work on UE5/Call of Duty/etc. Won't show up anywhere but a few Nvidia sponsored games). Use a neural net to upscale *(texture magnification, happens when screen pixel density > texel density, neural nets could do this somewhat better in realtime) old game textures, claim it automatically remasters old games! I'm sure I'm missing a few more possibilities.

DegustatoR

Legend

It makes zero sense to launch anything AI for less than what current AI solutions are selling.

It does make sense to sell non-HBM GPUs for AI as long as you're supply limited on CoWoS though - and TSMC's latest quarterly earning conference call strongly implied those supply limits were improving but not fully resolving any time soon if demand remains as expected. Also NVIDIA can price the server variants using these chips for whatever the market will tolerate, just like they did for L40/AD102.It makes zero sense to launch anything AI for less than what current AI solutions are selling.

512-bit GDDR7 would let them go all the way to 96GiB on inference cards, and if they can combine that with NVLink like they had on RTX 3090 (and removed on 4090 presumably to improve differentiation for H100, seems like that might have hurt them slightly given it prevented from selling them for servers as much while H100 was unobtanium for much of that time) you'd end up with a very strong 192GiB dual-card solution for AI inference (but without the ability to efficiently scale above 192GiB due to limited NVLink bandwidth/no NVSwitch/etc.) - that's idle speculation on my part though and maybe too optimistic.

Given that I'd be quite surprised if RTX 5090 wasn't an AI powerhouse because they need the performance for that market even if it was a gimmick for gaming. But with the consumer cards initially limited to 448-bit and 28GiB GDDR7 (and no NVLink?), they wouldn't be worth deploying in most datacenters due to lack of memory. On the other hand, I hope they can tweak the design so they don't waste too much area on AI for everything below GB202...

On the other hand, I hope they can tweak the design so they don't waste too much area on AI for everything below GB202...

Wouldn’t it be easier to tape out a dedicated GDDR7 AI GPU? It’s not like they’re short on cash.

vjPiedPiper

Newcomer

Just a guess, I think this is the most likely structure of the 'redesigned SM'.

GPU Subwarp Interleaving | Research

Raytracing applications have naturally high thread divergence, low warp occupancy and are limited by memory latency. In this paper, we present an architectural enhancement called Subwarp Interleaving that exploits thread divergence to hide pipeline stalls in divergent sections of low warp...research.nvidia.com

US11934867B2 - Techniques for divergent thread group execution scheduling - Google Patents

Warp sharding techniques to switch execution between divergent shards on instructions that trigger a long stall, thereby interleaving execution between diverged threads within a warp instead of across warps. The technique may be applied to mitigate pipeline stalls in applications with low warp...patents.google.com

Sounds like maybe there starting to make it a bit more superscalar/pipelined.

ie. a single SM can work on multiple ops at once, not OOO but the first step.

That’s fair, at NVIDIA’s new scale it makes sense - the biggest obstacle short-term is probably hiring fast enough and replacing people early retiring, so it may not be realistic in this timeframe yet.Wouldn’t it be easier to tape out a dedicated GDDR7 AI GPU? It’s not like they’re short on cash.

On the other hand, it probably makes sense to have a strong AI card with display connectors for some markets including Quadro, and to the extent that a lot of inference workloads are often bandwidth limited, and that GB200 has ~5TB/s of bandwidth per ~800mm2 of GPU area which there is absolutely no way GDDR7 will match, even 1/4th the tensor core performance of GB200 per mm2 would probably keep it quite memory limited a lot of the time without hurting gaming perf/mm2 much.

Chiplets would definitely make this a lot easier *shrugs*

AFAIK (and I really need to double check this), NVIDIA’s L1 cache is still in-order for all misses. That is, if all of a warp’s load requests hit in the L1, it can reschedule earlier than an older warp reques that missed - but if a single thread misses in the L1, then it might have to wait until another warp’s data comes back from the far DRAM controller even though it hit in the L2 and could reschedule much sooner.Sounds like maybe there starting to make it a bit more superscalar/pipelined.

ie. a single SM can work on multiple ops at once, not OOO but the first step.

A lot of these patents therefore make little sense in the context of the current architecture and the bigger question imo is how their cache hierarchy will evolve in Blackwell.