Wouldn't this hold for Zen and Zen 2 as well

a whitelisting for Renoir (Zen 2) so it looks like this is supported for Zen 2

It's not in the master yet, and their vendor/device IDs do not match the PCIe Root Ports on my Zen 2 processor (Ryzen 5 3600). Overall this doesn't look like a fully tested or widely supported feature that's ready for production deployment.

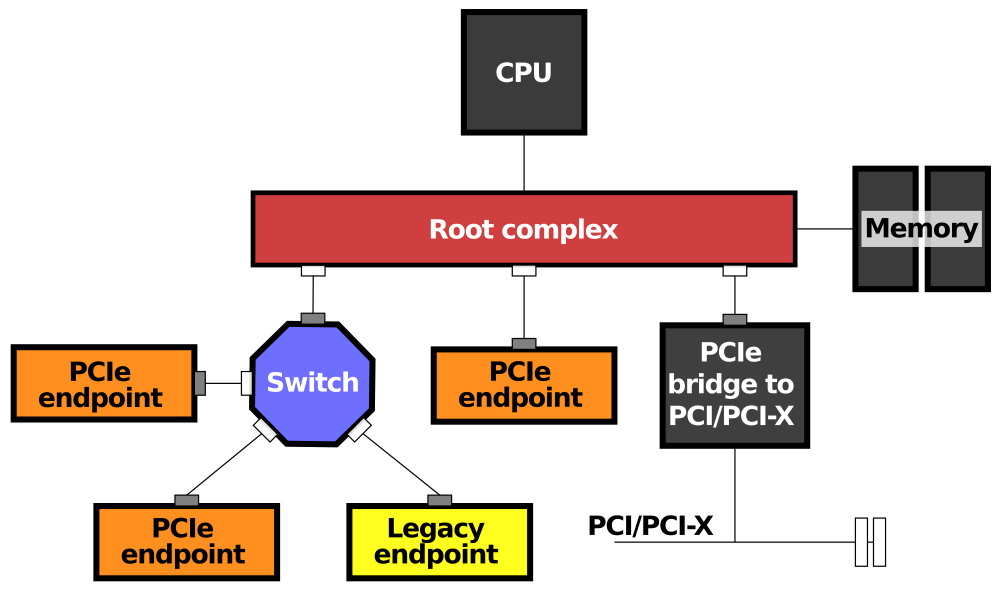

The problem is, PCIe Root Complex is not required to route peer-to-peer transactions either within or beyond its hierarchy tree, and does not expose its P2P capabilities as a discoverable configuration option.

Only PCIe Switches (virtual PCI-PCI bridges) are required to support P2P unconditionally - and even then it was primarily intended for multifunction devices and embedded/industrial markets, where the limited lanes from the CPU are typically split between several microcontrollers which talk to each other directly, saving the limited memory and CPU bandwidth (look at Xilinx, Broadcom, etc). This is the motivation given in

the original commit request for the P2PDMA component, and

the latest version only supports devices connected to the same root port or the same upstream port.

The PCIe spec assumes implementations may incorporate a virtual / physical PCIe Switch within the Root Complex to enable software transparent routing of P2P transactions, but it's not required either.

been unable to find confirmation one way or the other as to whether the NVMe and GPU share the same host port/bridge from the CPU

No, they are on separate Root Ports. Here's how the PCI device tree looks on my AMD X570 / Ryzen 5 3600 system with RX 5700 XT graphics and GAMMIX S11 SSD (Device Manager - View - Devices by connection):

Code:

⯆ PCI Bus

AMD SMBus

⯆ PCI Express Root Port

⯆ Standard NVM Express Controller

XPG GAMMIX S11 Pro

⯆ PCI Express Root Port

⯆ PCI Express Upstream Switch Port

⯆ PCI Express Downstream Switch Port

⯆ PCI Express Upstream Switch Port

PCI Express Downstream Switch Port

PCI Express Downstream Switch Port

⯆ PCI Express Downstream Switch Port

⯆ Intel(R) I211 Gigabit Network Connection

PCI Express Downstream Switch Port

⯆ PCI Express Downstream Switch Port

AMD PCI

AMD PSP 11.0 Device

⯆ AMD USB 3.10 eXtensible Host Controller - 1.10 (Microsoft)

⯆ USB Rooot Hub (USB 3.0)

Generic USB Hub

⯆ AMD USB 3.10 eXtensible Host Controller - 1.10 (Microsoft)

⯆ USB Rooot Hub (USB 3.0)

⯆ Generic SuperSpeed USB Hub

⯆ USB Attached SCIS (UAS) Mass Storage Device

JMicron Generic SCSI Disk Device

⯆ PCI Express Downstream Switch Port

⯆ Standard SATA AHCI Controller

WDC WD4003FZEX-00Z4SA0

⯆ PCI Express Root Port

⯆ PCI Express Upstream Switch Port

⯆ PCI Express Downstream Switch Port

⯆ AMD Radeon RX 5700 XT

BenQ EW3270U

⯆ High Definition Audio Controller

⯆ AMD High Definition Audio Device

3 - BenQ EW3270U (AMD High Definition Device)

⯆ PCI Express Root Port

AMD PCI

⯆ PCI Express Root Port

AMD PCI

⯆ AMD USB 3.10 eXtensible Host Controller - 1.10 (Microsoft)

⯆ USB Rooot Hub (USB 3.0)

⯆ USB Composite Device

⯈ USB Input Device

⯈ USB Input Device

⯆ USB Composite Device

⯈ USB Input Device

⯈ USB Input Device

⯈ USB Input Device

⯆ High Definition Audio Controller

⯆ Realtek(R) Audio

Realtek Asio Component

Realtek Audio Effects Component

Realtek Audio Universal Service

Realtek Digital Output (Realtek(R) Audio)

Realtek Hardware Support Application

Headphones (Realtek(R) Audio)

PCI Encryption/Decryption Controller

⯆ PCI Express Root Port

Standard SATA AHCI Controller

⯆ PCI Express Root Port

Standard SATA AHCI Controller

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

PCI standard host CPU bridge

⯆ PCI standard ISA bridge

Direct memory access controller

Programmable interrupt controller

System CMOS/real time clock

System speaker

System timer

Note that chipset-based I/O controllers and the primary PCIe x16 slot (which can share lanes with the secondary x16 slot) are actually connected through a PCIe Switch - which manifests as an

Upstream Switch Port and multiple

Downstream Switch Ports - and the Ethernet controller is connected through another lower-level switch to allow multiple Ethernet ports.

Other Root Ports and devices are not routed through a switch.

I truly appreciate you going to such lengths here because I was expecting to have to write something like this in response. but what it doesn't really zone in on all of the higher level inter-driver I/O in the kernel itself which is the reason why the harder you push I/O, the less real CPU time you have left over. The Windows 10 kernel does balance its CPU usage based on a number of hardware factors, unlike back in the days of Windows 95/982000 where pushing a few IDE drives could literally leave almost no free CPU time for the user at all.

I truly appreciate you going to such lengths here because I was expecting to have to write something like this in response. but what it doesn't really zone in on all of the higher level inter-driver I/O in the kernel itself which is the reason why the harder you push I/O, the less real CPU time you have left over. The Windows 10 kernel does balance its CPU usage based on a number of hardware factors, unlike back in the days of Windows 95/982000 where pushing a few IDE drives could literally leave almost no free CPU time for the user at all.