Intel has gone through some hard times as of lately, but they have interesting things like VPro and now 18A, a technology Microsoft is interested in and signed a deal with them.

www.pcgamer.com

www.pcgamer.com

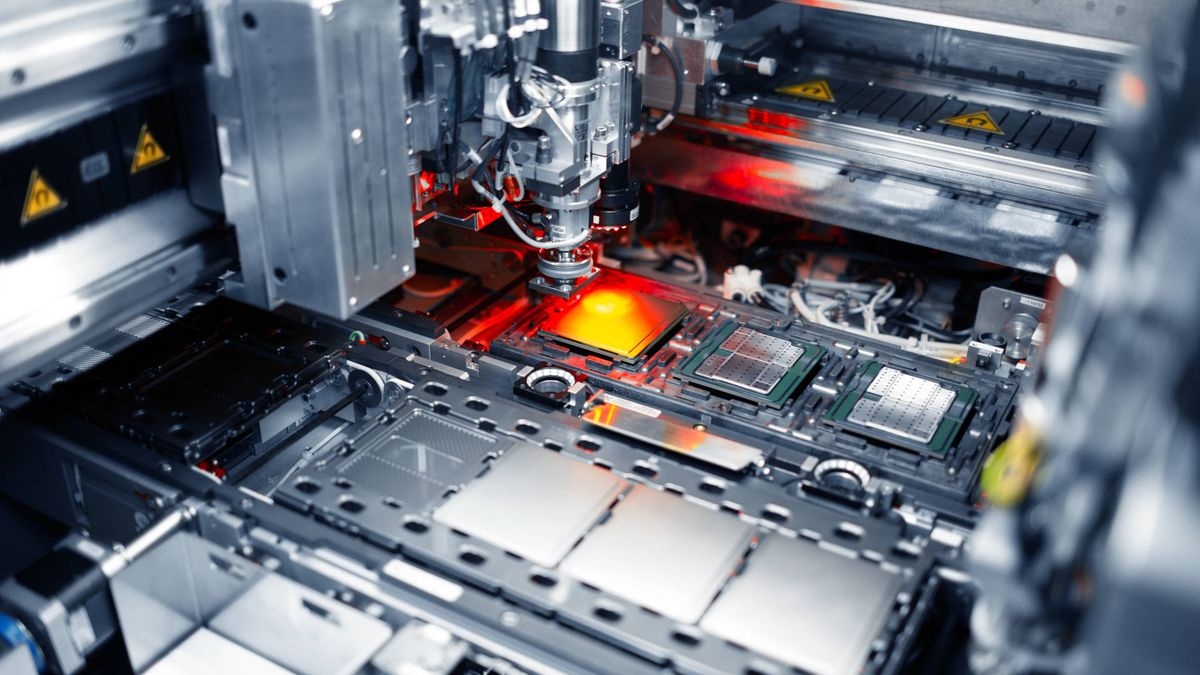

Intel CEO admits 'I've bet the whole company on 18A'

Pet Gelsinger resisted the idea last year, now he won't deny everything is riding on Intel's most advanced node yet.

Last edited: