You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PS5 Pro *spawn

- Thread starter chris1515

- Start date

Ok so ignoring the obviously contradicting specs we can still look at some interesting things about the upscaling (I would also ignore the alleged 300 TOPs number BTW) but this does actually seem real:

How does it fare compared to DLSS / FSR? Notably the "no per title training needed" part.no per tittle training needed... ~2ms to upscale 1080p to 4K

DLSS does not have per-game training since version 2.0. Same with XeSS. So that would be industry standard at this point. FSR2 does not do training as it has no ML component. 2 milliseconds frame-time execution cost would have to be thought relatively - MODERN DLSS costs I think at 4K roughly less than 1 millisecond on a 3090? It is about less than half a millisecond on a 4090.Ok so ignoring the obviously contradicting specs we can still look at some interesting things about the upscaling (I would also ignore the alleged 300 TOPs number BTW) but this does actually seem real:

How does it fare compared to DLSS / FSR? Notably the "no per title training needed" part.

Back when DLSS 2.0 came out, Nvidia put out the performance cost of DLSS 2.0 on the GPUs at the time. The model though now is faster on Ampere and Lovelace due to optimisations and using sparsity.

It being 2 milliseconds on PS5 Pro at 4K would either imply a much larger/complex model than DLSS 2 (possible) or that there is less ML grunt in the PS5 pro than what we saw in Turing if it is similarly complex to DLSS 2.0 (also possible).

Last edited:

Has MLID ever been correct about undisclosed hardware or had any valid inside info on products that were 8-12 months out?

It just seems to me anyhow, he just cherry-picks bits & pieces from well-known and published tech roadmaps and then sprinkles them with his wishful thinking from certain published patents.

That fact that ram/bus size and bandwidth weren't disclosed, but everything else was (from his supposed source), sounds like BS.

I get Kepler is backing some of it, but Kepler has been incorrect on certain things himself.

It just seems to me anyhow, he just cherry-picks bits & pieces from well-known and published tech roadmaps and then sprinkles them with his wishful thinking from certain published patents.

That fact that ram/bus size and bandwidth weren't disclosed, but everything else was (from his supposed source), sounds like BS.

I get Kepler is backing some of it, but Kepler has been incorrect on certain things himself.

MLID's track record for Playstation leaks is absolutely atrocious. He also claimed that the original PS5 would unlock more CU's before launch and the GPU would be more advanced than the one in the XSX, having special RDNA3 features(turns out the opposite was true).

That said, some of the notes on the document he showed do seem a bit too 'clever' for him to have come up with, given how little he actually understands of the things he talks about. Though, somebody else slightly more informed could easily have come up with this and sent it to him. Given how many things this guy gets wrong, either he's constantly making stuff up, or he does little vetting of his sources(probably a heavy mix of both, really).

That said, some of the notes on the document he showed do seem a bit too 'clever' for him to have come up with, given how little he actually understands of the things he talks about. Though, somebody else slightly more informed could easily have come up with this and sent it to him. Given how many things this guy gets wrong, either he's constantly making stuff up, or he does little vetting of his sources(probably a heavy mix of both, really).

This the research for Spectral Super resolutionMLID's track record for Playstation leaks is absolutely atrocious. He also claimed that the original PS5 would unlock more CU's before launch and the GPU would have special RDNA3 features.

That said, some of the notes on the document he showed do seem a bit too 'clever' for him to have come up with, given how little he actually understands of the things he talks about. That said, somebody else slightly more informed could easily have come up with this and sent it to him. Given how many things this guy gets wrong, either he's constantly making stuff up, or he does little vetting of his sources(probably a heavy mix of both, really).

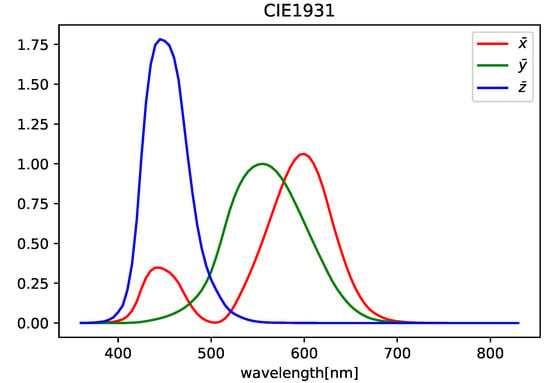

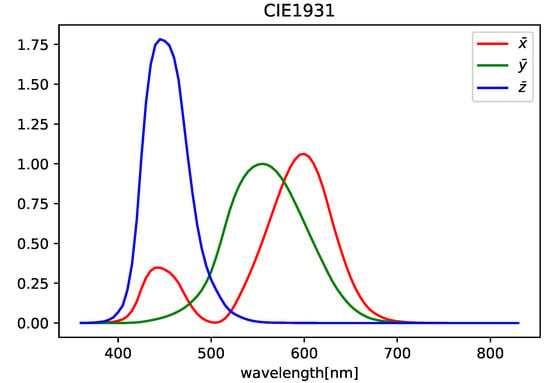

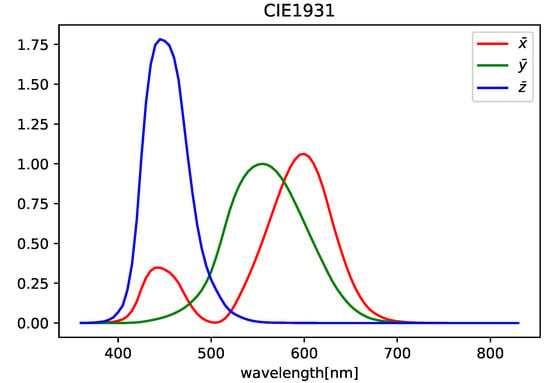

Spectral Super-Resolution for High Dynamic Range Images

The images we commonly use are RGB images that contain three pieces of information: red, green, and blue. On the other hand, hyperspectral (HS) images retain wavelength information. HS images are utilized in various fields due to their rich information content, but acquiring them requires...

I doubt he can invent this.

I heard of PSSR acronysm in discord before MLID do the video. Like I was sure they had another Deep learning technology before but outdone by anything Nvidia have. Here at least it seems comparable.

EDIT: Same I am sure like I/O Complex it will be very easy to use for developers because hide behind Sony library with lower level access to the best team first or third party on technology side.

Last edited:

In other words, the information was already out there. That constitutes a vast majority of the things that MLID actually gets 'right'. When it's not actually exclusive information, or is just making sensible guesses based on other rumors/information...This the research for Spectral Super resolution

Spectral Super-Resolution for High Dynamic Range Images

The images we commonly use are RGB images that contain three pieces of information: red, green, and blue. On the other hand, hyperspectral (HS) images retain wavelength information. HS images are utilized in various fields due to their rich information content, but acquiring them requires...www.mdpi.com

I doubt he can invent this.

When it comes to genuine 'could not have known/guessed it' sort of exclusive information, he's almost always wrong. From my knowledge, he's gotten a few real Intel slide leaks, but that's basically it.

In other words, the information was already out there. That constitutes a vast majority of the things that MLID actually gets 'right'. When it's not actually exclusive information, or is just making sensible guesses based on other rumors/information...

When it comes to genuine 'could not have known/guessed it' sort of exclusive information, he's almost always wrong. From my knowledge, he's gotten a few real Intel slide leaks, but that's basically it.

Yes the information was there but in fragment not as complete as this. I was knowing only the acronym for example and people told me it is maybe the name of deep learning PS technology.

Interesting but those cards still have dedicated tensor cores, right, with more grunt than even the latest RDNA3 GPUs? What about compared to TAAU and FSR2 (quality mode!) working in a similar 1080p to 4K setup? This is how this leaked PS5 Pro upscaling is being compared to as it would make sense on an AMD console.DLSS does not have per-game training since version 2.0. Same with XeSS. So that would be industry standard at this point. FSR2 does not do training as it has no ML component. 2 milliseconds frame-time execution cost would have to be thought relatively - MODERN DLSS costs I think at 4K roughly less than 1 millisecond on a 3090? It is about less than half a millisecond on a 4090.

Back when DLSS 2.0 came out, Nvidia put out the performance cost of DLSS 2.0 on the GPUs at the time. The model though now is faster on Ampere and Lovelace due to optimisations and using sparsity.

View attachment 10987

It being 2 milliseconds on PS5 Pro at 4K would either imply a much larger/complex model than DLSS 2 (possible) or that there is less ML grunt in the PS5 pro than what we saw in Turing if it is similarly complex to DLSS 2.0 (also possible).

BTW the results do look really good on the alleged leaked slide. Notably the aliasing on clank's mouth and the overall sharper assets.

That seems to be talking about 'colour resolution' and wouldn't be applicable to upscaling 2D images.This the research for Spectral Super resolution

Spectral Super-Resolution for High Dynamic Range Images

The images we commonly use are RGB images that contain three pieces of information: red, green, and blue. On the other hand, hyperspectral (HS) images retain wavelength information. HS images are utilized in various fields due to their rich information content, but acquiring them requires...www.mdpi.com

That seems to be talking about 'colour resolution' and wouldn't be applicable to upscaling 2D images.

From what I understand the training is different basically here it separate luminance and chrominance pass and this is not done in RGB space but light wavenlength space(spectral). After it this is an upscale in inference like all other method and the base is TAAU. And the implementation is probably different than the research paper on Sony side. Even on the spectral part of what Sony is doing.

EDIT: And the title seems clear

Spectral Super-Resolution for High Dynamic Range Images

EDIT: OK I understand what you say basically it is not for image upscale directly. I agree but at the end this is a topic of research. This is not a patent. And MLID would not have been able to find this.

EDIT2: The only Sony patent maybe linked to PS5 Pro and AI is this one with AI for raytracing

US20220309730A1 - Image rendering method and apparatus - Google Patents

An image rendering method includes: selecting at least a first trained machine learning model from among a plurality of machine learning models, the machine learning model having been trained to generate data contributing to a render of at least a part of an image, where the at least first...

patents.google.com

Last edited:

That could mean he found a fancy sounding term and threw it out there. This would be a suddenly-out-of-the-blue tech from Sony if true.EDIT: OK I understand what you say basically it is not for image upscale directly. I agree but at the end this is a topic of research. This is not a patent. And MLID would not have been able to find this.

Apparently there's a Sony patent on upscaling that no-one understands?

Alex's take at ~5 mins sounds typically patent (just patent an idea) and unrelated.

Also, could the iSize ML streaming tech be repurposed? they have tech for streaming higher quality video. Maybe that's ML upscaling that'd work directly with rendering??

It's allegedly using WMMA tech from RDNA4

That could mean he found a fancy sounding term and threw it out there. This would be a suddenly-out-of-the-blue tech from Sony if true.

Apparently there's a Sony patent on upscaling that no-one understands?

Alex's take at ~5 mins sounds typically patent (just patent an idea) and unrelated.

Also, could the iSize ML streaming tech be repurposed? they have tech for streaming higher quality video. Maybe that's ML upscaling that'd work directly with rendering??

Again PSSR is not something he find himself or people invented. I know people being AI programmer who try to understand what it can be. From what I understand human are more sensible to luminance error than color error. And this is maybe the base of the upscaling technology. Spectral super resolution research are done in Japan

An the patent is linked to VR not upscale from Fafalada an ex game dev in era.

EDIT:

Microbenchmarking AMD’s RDNA 3 Graphics Architecture

Editor’s Note (6/14/2023): We have a new article that reevaluates the cache latency of Navi 31, so please refer to that article for some new latency data. RDNA 3 represents the third iteratio…

chipsandcheese.com

chipsandcheese.com

Maybe dual issue will work better on consoles.

On the other hand, VOPD (vector operation, dual) does leave potential for improvement. AMD can optimize games by replacing known shaders with hand-optimized assembly instead of relying on compiler code generation. Humans will be much better at seeing dual issue opportunities than a compiler can ever hope to. Wave64 mode is another opportunity. On RDNA 2, AMD seems to compile a lot of pixel shaders down to wave64 mode, where dual issue can happen without any scheduling or register allocation smarts from the compiler.

It’ll be interesting to see how RDNA 3 performs once AMD has more time to optimize for the architecture, but they’re definitely justified in not advertising VOPD dual issue capability as extra shaders. Typically, GPU manufacturers use shader count to describe how many FP32 operations their GPUs can complete per cycle. In theory, VOPD would double FP32 throughput per WGP with very little hardware overhead besides the extra execution units. But it does so by pushing heavy scheduling responsibility to the compiler. AMD is probably aware that compiler technology is not up to the task, and will not get there anytime soon.

Last edited:

If the specifications known so far are true about the PS5pro, then does it make sense to release such a model almost only because of a theoretically better upscaling? Compared to the basic model, the compute difference is quite moderate and the basic model can also scale up, which is used more and more often in games. So why would the difference be spectacular from the point of view of an average user?

If the specifications known so far are true about the PS5pro, then does it make sense to release such a model almost only because of a theoretically better upscaling? Compared to the basic model, the compute difference is quite moderate and the basic model can also scale up, which is used more and more often in games. So why would the difference be spectacular from the point of view of an average user?

Again you don't understand why dual issue does not work on PC. On console they will do the effort. The difference is huge between PS5 Pro and PS5 but they won't fully push the PS5 Pro for sure. And in RT the PS5 Pro is much better than PS5 and XSX.

On the other hand, VOPD (vector operation, dual) does leave potential for improvement. AMD can optimize games by replacing known shaders with hand-optimized assembly instead of relying on compiler code generation. Humans will be much better at seeing dual issue opportunities than a compiler can ever hope to. Wave64 mode is another opportunity. On RDNA 2, AMD seems to compile a lot of pixel shaders down to wave64 mode, where dual issue can happen without any scheduling or register allocation smarts from the compiler.

It’ll be interesting to see how RDNA 3 performs once AMD has more time to optimize for the architecture, but they’re definitely justified in not advertising VOPD dual issue capability as extra shaders. Typically, GPU manufacturers use shader count to describe how many FP32 operations their GPUs can complete per cycle. In theory, VOPD would double FP32 throughput per WGP with very little hardware overhead besides the extra execution units. But it does so by pushing heavy scheduling responsibility to the compiler. AMD is probably aware that compiler technology is not up to the task, and will not get there anytime soon.

[Tom Henderson] PS5 Pro specs and release window details (codenamed Trinity, 30WGPs, 18000mts memory speed, November 2024 target) Rumor - Sony

54 CU is most definitely the active amount of CUs. I would bet good money that 60CUs most definitely refers to 3 shader engines, each with 20 CUs. There will be 2Cus disabled per shader engine for a total of 54 active CUs. In PS4 BC mode, only one shader engine will be active. In PS4 Pro and PS5...

I predicted ~35 last summer. If someone can post in that thread, please bump my post so I can look like I'm an insider.

I'm perma banned.

LMAO.

Last edited:

"Maybe dual issue will work better on consoles."Again you don't understand why dual issue does not work on PC. On console they will do the effort. The difference is huge between PS5 Pro and PS5 but they won't fully push the PS5 Pro for sure. And in RT the PS5 Pro is much better than PS5 and XSX.

I do not base it on theoretical assumptions. I need results.

However, if that's the case, a pro model might make sense.

It won't be. It'll be a limited improvement only noticeable by some but importantly something some people will spend a premium on. Plus potentially higher framerates which are preferable for the gaming core. No different to selling top of the range GPUs versus high end GPUs. The differences aren't normally in line with the price delta.So why would the difference be spectacular from the point of view of an average user?

I don't understand how this relates to the question asked. Nor where 'dual issue' comes in.Again you don't understand why dual issue does not work on PC. On console they will do the effort. The difference is huge between PS5 Pro and PS5 but they won't fully push the PS5 Pro for sure. And in RT the PS5 Pro is much better than PS5 and XSX.

It won't be. It'll be a limited improvement only noticeable by some but importantly something some people will spend a premium on. Plus potentially higher framerates which are preferable for the gaming core. No different to selling top of the range GPUs versus high end GPUs. The differences aren't normally in line with the price delta.

I don't understand how this relates to the question asked. Nor where 'dual issue' comes in.

Because he said the difference in compute power is low. If Sony SDK/API team does the effort to handtune the code generated for dual issue and don't let the compiler do the job for this part then dual issue performance will be there. And I am sure they will do it. This is a software problem not a hardware problem.

And this is not to game dev to solve the problem but SDK team, this is easier to solve this way.

Microbenchmarking AMD’s RDNA 3 Graphics Architecture

Editor’s Note (6/14/2023): We have a new article that reevaluates the cache latency of Navi 31, so please refer to that article for some new latency data. RDNA 3 represents the third iteratio…

chipsandcheese.com

chipsandcheese.com

On the other hand, VOPD (vector operation, dual) does leave potential for improvement. AMD can optimize games by replacing known shaders with hand-optimized assembly instead of relying on compiler code generation. Humans will be much better at seeing dual issue opportunities than a compiler can ever hope to. Wave64 mode is another opportunity. On RDNA 2, AMD seems to compile a lot of pixel shaders down to wave64 mode, where dual issue can happen without any scheduling or register allocation smarts from the compiler.

It’ll be interesting to see how RDNA 3 performs once AMD has more time to optimize for the architecture, but they’re definitely justified in not advertising VOPD dual issue capability as extra shaders. Typically, GPU manufacturers use shader count to describe how many FP32 operations their GPUs can complete per cycle. In theory, VOPD would double FP32 throughput per WGP with very little hardware overhead besides the extra execution units. But it does so by pushing heavy scheduling responsibility to the compiler. AMD is probably aware that compiler technology is not up to the task, and will not get there anytime soon.

Last edited:

Similar threads

- Replies

- 127

- Views

- 9K

- Replies

- 229

- Views

- 18K

- Replies

- 42

- Views

- 2K

D